Introduction to regression

Introduction to regression

Introduction to regression

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

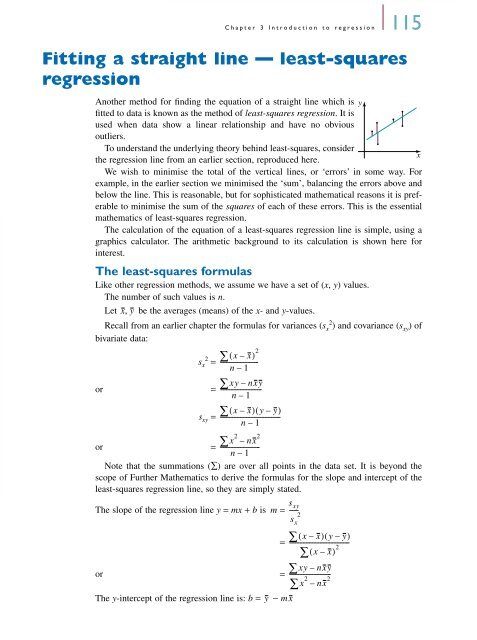

Chapter 3 <strong>Introduction</strong> <strong>to</strong> <strong>regression</strong> 115<br />

Fitting a straight line — least-squares<br />

<strong>regression</strong><br />

Another method for finding the equation of a straight line which is y<br />

fitted <strong>to</strong> data is known as the method of least-squares <strong>regression</strong>. It is<br />

used when data show a linear relationship and have no obvious<br />

outliers.<br />

To understand the underlying theory behind least-squares, consider<br />

x<br />

the <strong>regression</strong> line from an earlier section, reproduced here.<br />

We wish <strong>to</strong> minimise the <strong>to</strong>tal of the vertical lines, or ‘errors’ in some way. For<br />

example, in the earlier section we minimised the ‘sum’, balancing the errors above and<br />

below the line. This is reasonable, but for sophisticated mathematical reasons it is preferable<br />

<strong>to</strong> minimise the sum of the squares of each of these errors. This is the essential<br />

mathematics of least-squares <strong>regression</strong>.<br />

The calculation of the equation of a least-squares <strong>regression</strong> line is simple, using a<br />

graphics calcula<strong>to</strong>r. The arithmetic background <strong>to</strong> its calculation is shown here for<br />

interest.<br />

The least-squares formulas<br />

Like other <strong>regression</strong> methods, we assume we have a set of (x, y) values.<br />

The number of such values is n.<br />

Let x, y be the averages (means) of the x- and y-values.<br />

2 Recall from an earlier chapter the formulas for variances (sx ) and covariance (sxy) of<br />

bivariate data:<br />

2<br />

2 ( x – x)<br />

sx = ------------------------ ∑<br />

n – 1<br />

or = ∑ xy–<br />

nxy<br />

------------------------n<br />

– 1<br />

( x – x)<br />

( y– y)<br />

sxy = ∑------------------------------------- n – 1<br />

x<br />

or =<br />

Note that the summations (∑) are over all points in the data set. It is beyond the<br />

scope of Further Mathematics <strong>to</strong> derive the formulas for the slope and intercept of the<br />

least-squares <strong>regression</strong> line, so they are simply stated.<br />

2<br />

∑ nx 2<br />

–<br />

-----------------------n<br />

– 1<br />

sxy 2<br />

sx The slope of the <strong>regression</strong> line y = mx + b is m = ------<br />

∑<br />

∑<br />

∑<br />

( x – x)<br />

( y– y)<br />

=<br />

( x – x)<br />

2<br />

--------------------------------------<br />

xy – nxy<br />

or =<br />

x<br />

The y-intercept of the <strong>regression</strong> line is: b = − m<br />

2 nx 2<br />

--------------------------<br />

∑ –<br />

y x