Nonparametric Bayesian Discrete Latent Variable Models for ...

Nonparametric Bayesian Discrete Latent Variable Models for ...

Nonparametric Bayesian Discrete Latent Variable Models for ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

4 Indian Buffet Process <strong>Models</strong><br />

mixing time<br />

10 2<br />

10 0<br />

mixing times <strong>for</strong> α<br />

Stick−BreakingSemi−Ordered Gibbs Sampling<br />

mixing time<br />

10 2<br />

10 0<br />

mixing times of active features<br />

Stick−BreakingSemi−Ordered Gibbs Sampling<br />

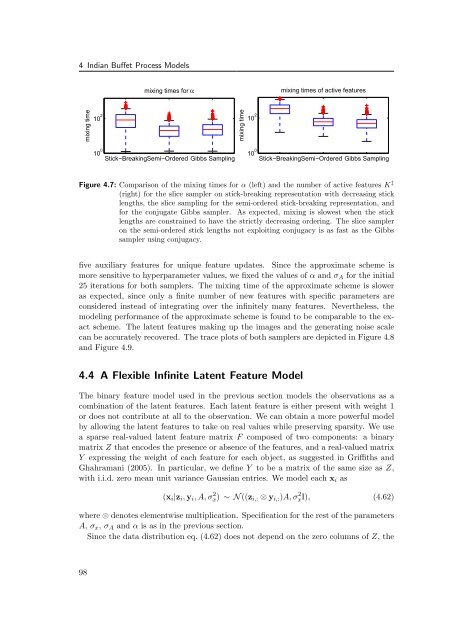

Figure 4.7: Comparison of the mixing times <strong>for</strong> α (left) and the number of active features K ‡<br />

(right) <strong>for</strong> the slice sampler on stick-breaking representation with decreasing stick<br />

lengths, the slice sampling <strong>for</strong> the semi-ordered stick-breaking representation, and<br />

<strong>for</strong> the conjugate Gibbs sampler. As expected, mixing is slowest when the stick<br />

lengths are constrained to have the strictly decreasing ordering. The slice sampler<br />

on the semi-ordered stick lengths not exploiting conjugacy is as fast as the Gibbs<br />

sampler using conjugacy.<br />

five auxiliary features <strong>for</strong> unique feature updates. Since the approximate scheme is<br />

more sensitive to hyperparameter values, we fixed the values of α and σA <strong>for</strong> the initial<br />

25 iterations <strong>for</strong> both samplers. The mixing time of the approximate scheme is slower<br />

as expected, since only a finite number of new features with specific parameters are<br />

considered instead of integrating over the infinitely many features. Nevertheless, the<br />

modeling per<strong>for</strong>mance of the approximate scheme is found to be comparable to the exact<br />

scheme. The latent features making up the images and the generating noise scale<br />

can be accurately recovered. The trace plots of both samplers are depicted in Figure 4.8<br />

and Figure 4.9.<br />

4.4 A Flexible Infinite <strong>Latent</strong> Feature Model<br />

The binary feature model used in the previous section models the observations as a<br />

combination of the latent features. Each latent feature is either present with weight 1<br />

or does not contribute at all to the observation. We can obtain a more powerful model<br />

by allowing the latent features to take on real values while preserving sparsity. We use<br />

a sparse real-valued latent feature matrix F composed of two components: a binary<br />

matrix Z that encodes the presence or absence of the features, and a real-valued matrix<br />

Y expressing the weight of each feature <strong>for</strong> each object, as suggested in Griffiths and<br />

Ghahramani (2005). In particular, we define Y to be a matrix of the same size as Z,<br />

with i.i.d. zero mean unit variance Gaussian entries. We model each xi as<br />

(xi|zi, yi, A, σ 2 x) ∼ N ((zi,: ⊗ yi,:)A, σ 2 xI), (4.62)<br />

where ⊗ denotes elementwise multiplication. Specification <strong>for</strong> the rest of the parameters<br />

A, σx, σA and α is as in the previous section.<br />

Since the data distribution eq. (4.62) does not depend on the zero columns of Z, the<br />

98