Chapter 14 - Bootstrap Methods and Permutation Tests - WH Freeman

Chapter 14 - Bootstrap Methods and Permutation Tests - WH Freeman

Chapter 14 - Bootstrap Methods and Permutation Tests - WH Freeman

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

<strong>14</strong>-6 CHAPTER <strong>14</strong> <strong>Bootstrap</strong> <strong>Methods</strong> <strong>and</strong> <strong>Permutation</strong> <strong>Tests</strong><br />

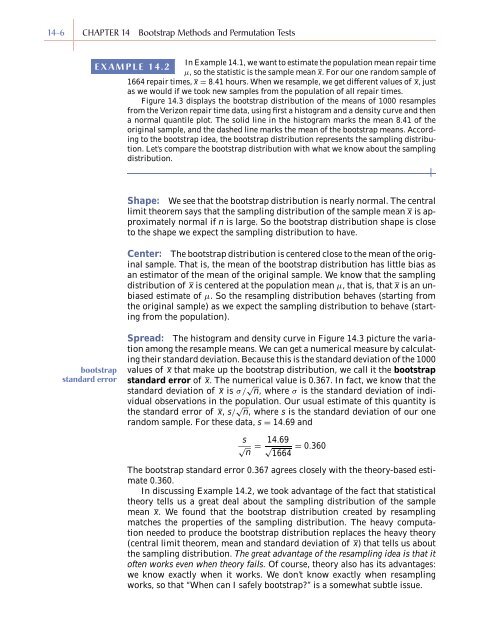

In Example <strong>14</strong>.1, we want to estimate the population mean repair time<br />

EXAMPLE <strong>14</strong>.2<br />

µ, so the statistic is the sample mean x. For our one r<strong>and</strong>om sample of<br />

1664 repair times, x = 8.41 hours. When we resample, we get different values of x,just<br />

as we would if we took new samples from the population of all repair times.<br />

Figure <strong>14</strong>.3 displays the bootstrap distribution of the means of 1000 resamples<br />

from the Verizon repair time data, using first a histogram <strong>and</strong> a density curve <strong>and</strong> then<br />

a normal quantile plot. The solid line in the histogram marks the mean 8.41 of the<br />

original sample, <strong>and</strong> the dashed line marks the mean of the bootstrap means. According<br />

to the bootstrap idea, the bootstrap distribution represents the sampling distribution.<br />

Let’s compare the bootstrap distribution with what we know about the sampling<br />

distribution.<br />

Shape: We see that the bootstrap distribution is nearly normal. The central<br />

limit theorem says that the sampling distribution of the sample mean x is approximately<br />

normal if n is large. So the bootstrap distribution shape is close<br />

to the shape we expect the sampling distribution to have.<br />

Center: The bootstrap distribution is centered close to the mean of the original<br />

sample. That is, the mean of the bootstrap distribution has little bias as<br />

an estimator of the mean of the original sample. We know that the sampling<br />

distribution of x is centered at the population mean µ, that is, that x is an unbiased<br />

estimate of µ. So the resampling distribution behaves (starting from<br />

the original sample) as we expect the sampling distribution to behave (starting<br />

from the population).<br />

bootstrap<br />

st<strong>and</strong>ard error<br />

Spread: The histogram <strong>and</strong> density curve in Figure <strong>14</strong>.3 picture the variation<br />

among the resample means. We can get a numerical measure by calculating<br />

their st<strong>and</strong>ard deviation. Because this is the st<strong>and</strong>ard deviation of the 1000<br />

values of x that make up the bootstrap distribution, we call it the bootstrap<br />

st<strong>and</strong>ard error of x. The numerical value is 0.367. In fact, we know that the<br />

st<strong>and</strong>ard deviation of x is σ/ √ n, where σ is the st<strong>and</strong>ard deviation of individual<br />

observations in the population. Our usual estimate of this quantity is<br />

the st<strong>and</strong>ard error of x, s/ √ n, where s is the st<strong>and</strong>ard deviation of our one<br />

r<strong>and</strong>om sample. For these data, s = <strong>14</strong>.69 <strong>and</strong><br />

s<br />

√ n<br />

= <strong>14</strong>.69 √<br />

1664<br />

= 0.360<br />

The bootstrap st<strong>and</strong>ard error 0.367 agrees closely with the theory-based estimate<br />

0.360.<br />

In discussing Example <strong>14</strong>.2, we took advantage of the fact that statistical<br />

theory tells us a great deal about the sampling distribution of the sample<br />

mean x. We found that the bootstrap distribution created by resampling<br />

matches the properties of the sampling distribution. The heavy computation<br />

needed to produce the bootstrap distribution replaces the heavy theory<br />

(central limit theorem, mean <strong>and</strong> st<strong>and</strong>ard deviation of x) that tells us about<br />

the sampling distribution. The great advantage of the resampling idea is that it<br />

often works even when theory fails. Of course, theory also has its advantages:<br />

we know exactly when it works. We don’t know exactly when resampling<br />

works, so that “When can I safely bootstrap?” is a somewhat subtle issue.