Performance Tuning Siebel Software on the Sun Platform

Performance Tuning Siebel Software on the Sun Platform

Performance Tuning Siebel Software on the Sun Platform

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

designed such that <strong>on</strong>e thread of a <str<strong>on</strong>g>Siebel</str<strong>on</strong>g> OM services <strong>on</strong>e user sessi<strong>on</strong> or task. The<br />

ratio of threads or users/process is c<strong>on</strong>figured using <strong>the</strong> <str<strong>on</strong>g>Siebel</str<strong>on</strong>g> parameters:<br />

• MinMTServers<br />

• MaxMTServers<br />

• MaxTasks<br />

From several tests c<strong>on</strong>ducted it was found that <strong>on</strong> <strong>the</strong> Solaris platform with <str<strong>on</strong>g>Siebel</str<strong>on</strong>g> 7.5 <strong>the</strong><br />

following users/OM ratios provided optimal performance:<br />

• Call Center – 80 users/OM<br />

• eChannel – 40 users/OM<br />

• eSales – 50 users/OM<br />

• eService – 60 users/OM<br />

As you can see, <strong>the</strong> optimal ratio of threads/process varies with <strong>the</strong> <str<strong>on</strong>g>Siebel</str<strong>on</strong>g> OM and <strong>the</strong><br />

type of <str<strong>on</strong>g>Siebel</str<strong>on</strong>g> workload per user. MaxTasks divided by MaxMTServers determines <strong>the</strong><br />

number of users/process. For example, for 300 users <strong>the</strong> setting would be<br />

MinMtServers=6, MaxMTServers=6, Maxtasks=300. This would direct <str<strong>on</strong>g>Siebel</str<strong>on</strong>g> to<br />

distribute <strong>the</strong> users across <strong>the</strong> 6 processes evenly, with 50 users <strong>on</strong> each. The noti<strong>on</strong> of<br />

an<strong>on</strong>ymous users must also be c<strong>on</strong>sidered in this calculati<strong>on</strong>, as discussed in <strong>the</strong> Call<br />

Center secti<strong>on</strong>, <strong>the</strong> eChannel secti<strong>on</strong>, and so <strong>on</strong>. The prstat –v or <strong>the</strong> top command<br />

shows how many threads or users or being serviced by a single multithreaded <str<strong>on</strong>g>Siebel</str<strong>on</strong>g><br />

process.<br />

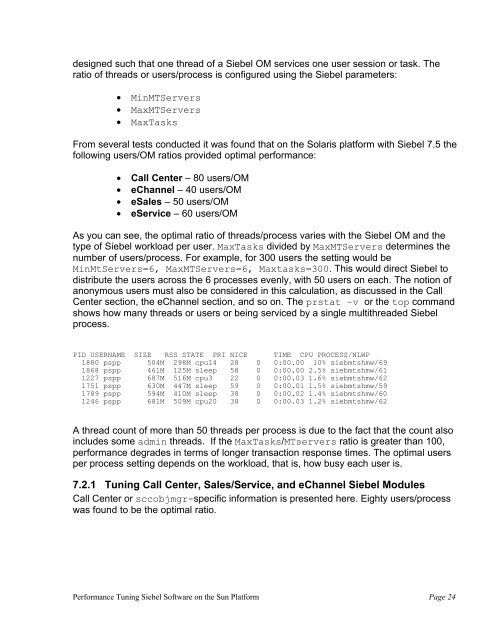

PID USERNAME SIZE RSS STATE PRI NICE TIME CPU PROCESS/NLWP<br />

1880 pspp 504M 298M cpu14 28 0 0:00.00 10% siebmtshmw/69<br />

1868 pspp 461M 125M sleep 58 0 0:00.00 2.5% siebmtshmw/61<br />

1227 pspp 687M 516M cpu3 22 0 0:00.03 1.6% siebmtshmw/62<br />

1751 pspp 630M 447M sleep 59 0 0:00.01 1.5% siebmtshmw/59<br />

1789 pspp 594M 410M sleep 38 0 0:00.02 1.4% siebmtshmw/60<br />

1246 pspp 681M 509M cpu20 38 0 0:00.03 1.2% siebmtshmw/62<br />

A thread count of more than 50 threads per process is due to <strong>the</strong> fact that <strong>the</strong> count also<br />

includes some admin threads. If <strong>the</strong> MaxTasks/MTservers ratio is greater than 100,<br />

performance degrades in terms of l<strong>on</strong>ger transacti<strong>on</strong> resp<strong>on</strong>se times. The optimal users<br />

per process setting depends <strong>on</strong> <strong>the</strong> workload, that is, how busy each user is.<br />

7.2.1 <str<strong>on</strong>g>Tuning</str<strong>on</strong>g> Call Center, Sales/Service, and eChannel <str<strong>on</strong>g>Siebel</str<strong>on</strong>g> Modules<br />

Call Center or sccobjmgr-specific informati<strong>on</strong> is presented here. Eighty users/process<br />

was found to be <strong>the</strong> optimal ratio.<br />

<str<strong>on</strong>g>Performance</str<strong>on</strong>g> <str<strong>on</strong>g>Tuning</str<strong>on</strong>g> <str<strong>on</strong>g>Siebel</str<strong>on</strong>g> <str<strong>on</strong>g>Software</str<strong>on</strong>g> <strong>on</strong> <strong>the</strong> <strong>Sun</strong> <strong>Platform</strong> Page 24