The Evaluation of 'Behavioural Additionality' - IWT

The Evaluation of 'Behavioural Additionality' - IWT

The Evaluation of 'Behavioural Additionality' - IWT

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

CHAPTER 3 > Conceptual and Empirical Challenges <strong>of</strong> Evaluating the Effectiveness <strong>of</strong><br />

Innovation Policies with ‘Behavioural Additionality’ .<br />

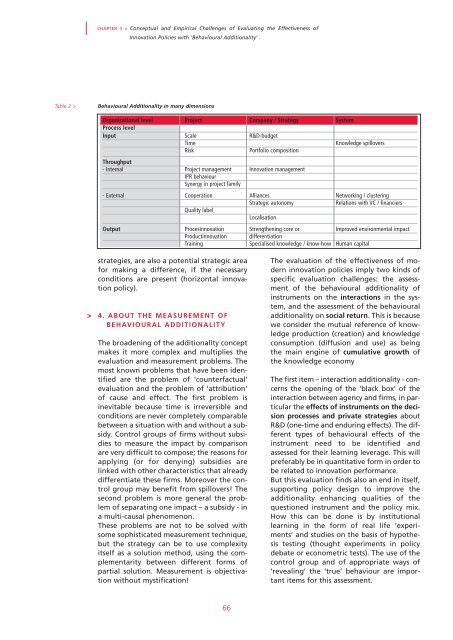

Table 2 ><br />

Behavioural Additionality in many dimensions<br />

Organisational level Project Company / Strategy System<br />

Process level<br />

Input Scale R&D-budget<br />

Time<br />

Knowledge spillovers<br />

Risk<br />

Portfolio composition<br />

Throughput<br />

- Internal Project management Innovation management<br />

IPR behaviour<br />

Synergy in project family<br />

- External Cooperation Alliances Networking / clustering<br />

Strategic autonomy<br />

Relations with VC / financiers<br />

Quality label<br />

Localisation<br />

Output Procesinnovation Strengthening core or Improved environmental impact<br />

Productinnovation differentiation<br />

Training Specialised knowledge / know-how Human capital<br />

><br />

strategies, are also a potential strategic area<br />

for making a difference, if the necessary<br />

conditions are present (horizontal innovation<br />

policy).<br />

4. ABOUT THE MEASUREMENT OF<br />

BEHAVIOURAL ADDITIONALITY<br />

<strong>The</strong> broadening <strong>of</strong> the additionality concept<br />

makes it more complex and multiplies the<br />

evaluation and measurement problems. <strong>The</strong><br />

most known problems that have been identified<br />

are the problem <strong>of</strong> ‘counterfactual’<br />

evaluation and the problem <strong>of</strong> ‘attribution’<br />

<strong>of</strong> cause and effect. <strong>The</strong> first problem is<br />

inevitable because time is irreversible and<br />

conditions are never completely comparable<br />

between a situation with and without a subsidy.<br />

Control groups <strong>of</strong> firms without subsidies<br />

to measure the impact by comparison<br />

are very difficult to compose; the reasons for<br />

applying (or for denying) subsidies are<br />

linked with other characteristics that already<br />

differentiate these firms. Moreover the control<br />

group may benefit from spillovers! <strong>The</strong><br />

second problem is more general the problem<br />

<strong>of</strong> separating one impact – a subsidy - in<br />

a multi-causal phenomenon.<br />

<strong>The</strong>se problems are not to be solved with<br />

some sophisticated measurement technique,<br />

but the strategy can be to use complexity<br />

itself as a solution method, using the complementarity<br />

between different forms <strong>of</strong><br />

partial solution. Measurement is objectivation<br />

without mystification!<br />

<strong>The</strong> evaluation <strong>of</strong> the effectiveness <strong>of</strong> modern<br />

innovation policies imply two kinds <strong>of</strong><br />

specific evaluation challenges: the assessment<br />

<strong>of</strong> the behavioural additionality <strong>of</strong><br />

instruments on the interactions in the system,<br />

and the assessment <strong>of</strong> the behavioural<br />

additionality on social return. This is because<br />

we consider the mutual reference <strong>of</strong> knowledge<br />

production (creation) and knowledge<br />

consumption (diffusion and use) as being<br />

the main engine <strong>of</strong> cumulative growth <strong>of</strong><br />

the knowledge economy<br />

<strong>The</strong> first item – interaction additionality - concerns<br />

the opening <strong>of</strong> the ‘black box’ <strong>of</strong> the<br />

interaction between agency and firms, in particular<br />

the effects <strong>of</strong> instruments on the decision<br />

processes and private strategies about<br />

R&D (one-time and enduring effects). <strong>The</strong> different<br />

types <strong>of</strong> behavioural effects <strong>of</strong> the<br />

instrument need to be identified and<br />

assessed for their learning leverage. This will<br />

preferably be in quantitative form in order to<br />

be related to innovation performance.<br />

But this evaluation finds also an end in itself,<br />

supporting policy design to improve the<br />

additionality enhancing qualities <strong>of</strong> the<br />

questioned instrument and the policy mix.<br />

How this can be done is by institutional<br />

learning in the form <strong>of</strong> real life ‘experiments’<br />

and studies on the basis <strong>of</strong> hypothesis<br />

testing (thought experiments in policy<br />

debate or econometric tests). <strong>The</strong> use <strong>of</strong> the<br />

control group and <strong>of</strong> appropriate ways <strong>of</strong><br />

‘revealing’ the ‘true’ behaviour are important<br />

items for this assessment.<br />

66