Introduction to Krylov subspace methods - IMAGe

Introduction to Krylov subspace methods - IMAGe

Introduction to Krylov subspace methods - IMAGe

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

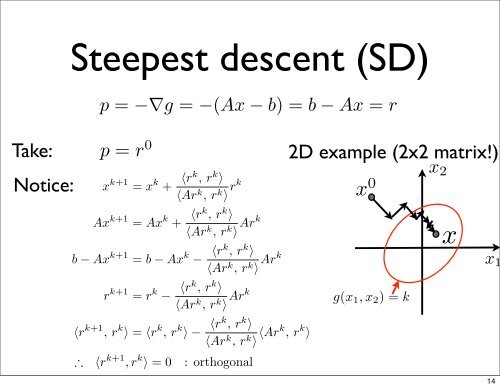

adient Method Methodat is p ?x k+1 = x k + 〈rk , r k 〉What is p ?〈Ar k , r k 〉 rkSteepest descent (SD)Gradient MethodAx k+1 = Ax k + 〈rk , r k 〉〈Ar k , r k 〉 Arkown as(AKA) ”Steepest Descent(SD)”b − Axp = p −∇g = −∇g = −(Ax = −(Ax k+1 = b − Ax− b) − b) = Ax b k − 〈rk , r k 〉2 Gradient Method= 〈Ar Ax rk , = r k 〉 rArkp = −∇g = −(Ax − b) = b − Ax = r.4), Take: taking p = r 0 p r,0 = , r 0 r k+1 = r k − 〈rk , r k 〉〈Ar,k , r k 〉 Arkg(xx k+1 x k+1 = x k + 〈rk , r k 〈r〉k+1 x, 0 r k 〉 = 〈r k , r k 〉 − 〈rk , r k 〉 1 , x 2 ) = Notice:= x + 〈rk , r k 〉x k+1 〈Ar k , r= k 〉x k rk+ 〈rk , r k 〉 x 0 〈Ar k , r k 〉 〈Ark , r k 〉∴〈Ar k 〈r k+1 〈Ar , r k , k r k 〉, rk 〉 =r k 0〉 rk : orthogonalAx k+1 = Ax k + 〈rk , r k 〉〈Ar k , r k 〉 Ark∴ 〈r k+1 , r k ∴ 〉 = 〈r 0 k+1 : orthogona , k 〉 = 0own as(AKA) as(AKA) ”Steepest ”Steepest Descent(SD)” Descent(SD)” r k+1 = r k − 〈rAx k+1 = Ax k + 〈rk , r k 〉Ax k+1 = Ax〈Ar k + 〈rk , r k 〉〈Ar k , r k 〉 Ark k 〈Ar , r k k 〉, Arkr k x〉 Ark 0b − Ax k+1 = b − Ax k − 〈rk , r k 〉r k+1 = r k − 〈rk , r k 〉b − Ax k+1 = b − Ax k − 〈rk , r k 〉b − Ax k+1 〈Ar k , r= k 〉 Arkb − Ax〈Ar k − 〈rk g(x, 1 ,r k x〉2 ) = kk 〈Ar , r k k 〉, Arkr k 〉 Ark〈r k+1 , r k 〉 = 〈r k , r k 〉 − 〈rk , r k 〉〈Ar k , r k 〉 〈Ark , r k 〉r k+1 = r k − 〈rk , r k 〉r k+1 = r〈Ar k − 〈rk , r k 〉k , r k k〉 Arkk Ark∴ 〈r k+1 , r k 〉 = 0 : orthogonal2D example (2x2 matrix!)so known as(AKA) ”Steepest Descent(SD)om (5.4), taking p = r 0 ,b − Ax= b − Ax〈Ar k , r〈A〈r k+1 , r k 〉 = 〈r k , r k 〉∴ 〈r k+1 , r k 〉 = 0 :p = −∇g = −(Ax − bg(x 1x 0 14x k+1 = x k + 〈rk , rg