Introduction to Krylov subspace methods - IMAGe

Introduction to Krylov subspace methods - IMAGe

Introduction to Krylov subspace methods - IMAGe

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

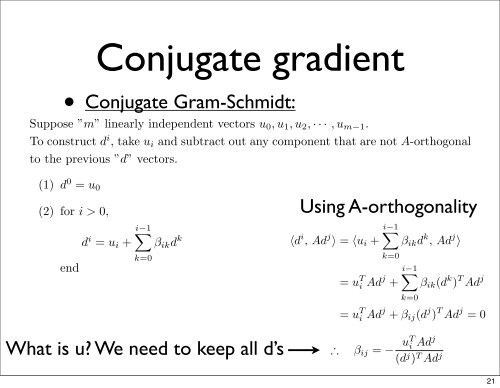

〈r , p〉 = 〈r , p〉 − α 〈Ap, p〉〈r k , p〉 = 0Conjugate gradient• Conjugate Gram-Schmidt:∴r k ⊥ pThe new residual is orthogonal <strong>to</strong> the search direction.Conjugate Gram-Schmidt ProcedureSuppose ”m” linearly independent vec<strong>to</strong>rs u 0 , u 1 , u 2 , · · · , u m−1 .To construct d i , take u i and subtract out any component that are not A-orthogonal<strong>to</strong> the previous ”d” vec<strong>to</strong>rs.5 KRYLOV SUBSPACE METHODS(1) d 0 = u 0(2) for i > 0,endd i = u i +∑i−1k=0β ik d kUsing A-orthogonality〈d i , Ad j 〉 = 〈u i +∑i−1k=0= u T i Ad j +β ik d k , Ad j 〉∑i−1k=0β ik (d k ) T Ad j= u T i Ad j + β ij (d j ) T Ad j = 0What is u? We need <strong>to</strong> keep all d’s∴β ij = − uT i Adj(d j ) T Ad jThis is not cheap, since we need <strong>to</strong> keep the vec<strong>to</strong>rs ”d” <strong>to</strong>21