Introduction to Krylov subspace methods - IMAGe

Introduction to Krylov subspace methods - IMAGe

Introduction to Krylov subspace methods - IMAGe

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

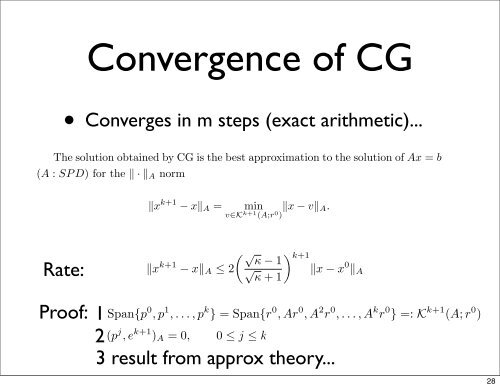

So x − x is the orthogonal projection of x − x on<strong>to</strong> Span{p , p , . . . , p } wis reached respect by the <strong>to</strong> (·, polynomial ·) A .k+1∑⇒ ‖x k+1 − x‖ A = min ‖(x − x 0 ) − θ i A i (x − x 0 )‖ AYLOV SUBSPACE METHODS i=1̂T k (t) = T k(1 + 2(t−β)β−α )30ω∈KConvergence k+1 (A;r 0 )of CGT= min ‖(I −∑ k (1 + 2γ−ββ−αθ i A ) .i )(x − x 0 )‖ A0 = r 0 .= minHomework:uppose true for k, that is, assume thathenω∈K k+1 (A;r 0 )•(√ )Converges‖xin m steps (exactk+1κ(A) − 1 arithmetic)...k+1 − x‖ A ≤ 2 √ ‖x − x 0 ‖ A .κ(A) + 1p∈P ∗ k+1k+1i=1‖p(A)(x − x 0 )‖ Awhere Pk+1 ∗ := {p ∈ P k+1| p(0) = 1}.TheFromsolutionp 0 Span{p 0obtained= r 0 , p 1 ,andbyp k+1 . . . , p k }CG is=ther k+1 = K k+1 (A; r 0best+approximationβ k+1 p k ),<strong>to</strong> the solution of Ax = bλ min(A : Let SP D) η = for the ‖ · ‖ A norm , thenλ max − λ minp 1 = r 1 + β 1 r 0r k+1 = r k − α k Ap ‖x k+1 k − x‖ A min ‖x − v‖ A .v∈K k+1 (A;r 0 )T k+1 (1 + 2η) ≥ 1 (√ p 2 = r 2 + βλmax √ 2 p 1 = ) r 2 k+1 + βλ 2√ min2For v ∈ K k+1 (A; r 0 λmax − √ = 1 (r(√ 1 (From + β κ − 1 r 0 k+1 1 CG) )√k∑k∑ λ), .min 2 κ + 1= r 0 θ⇒m − α k A A m r 0 θ m ∈ K k+2 (A; r 0 )m=0(√ )0 = (v, e k+1 m=0) A = (v, Ae κ − k+1 k+1 1 )Rate:‖x k+1 ⇒ Span{p 0 , p 1 , . . . , p k } = Span{r 0− x‖ A ≤ 2 √ ‖x − x 0 , r 1 , . . . , r k }‖ A .here r k ∈ K = (v, A(x k+1 − x)) κ = + −(v, 1We k+1 (A; rwant 0 ), p<strong>to</strong> show k ∈ Kthis k+1 (A; r:0 )r k+1 ) A5.3 Projection <strong>methods</strong>Proof:ve shown that (p j , xSpan{p k−1 −r k+1 x 0 0 ),⊥p A 1 K= , . k+1 (p. . ,(A; j ,p k x}r− 0 =) xSpan{r 0 ) ← A Galerkin so that 0 , Ar 0 condition, A 2 r 0 , . . . , A k r 0 } =: K k+1 (A; r 0 )12(p.86 Convergence in [2]) We now understand that CG/SDesc./Richardson are projection <strong>methods</strong>onAlgorithm a k+1 (p1) TheK (A; j ,r<strong>Krylov</strong> 0 e(CG) ) k+1 )is called A = 0, 0 ≤ j ≤ k.space. converges <strong>Krylov</strong>In general, in m-steps <strong>subspace</strong> of V .let V = [v 1 , · · · , v m ] be an n × m matrix where2) {vA i } m practical error is useful for application :i=1 are By 3 a induction result from basis of space : (p.48 K in approx and [8]) theory...W = [w 1, · · · , w m ] a (A-orthogonal) basis ofnsider the A-norm of the errorx 028x