Project Proposal (PDF) - Oxford Brookes University

Project Proposal (PDF) - Oxford Brookes University

Project Proposal (PDF) - Oxford Brookes University

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

FP7-ICT-2011-9 STREP proposal<br />

18/01/12 v1 [Dynact]<br />

A first sensible option is to consider imprecise graphical models, and iHMMs in particular, as convex sets of<br />

probability distributions themselves. A distance/dissimilarity between two imprecise models is then just a<br />

special case of distance between two sets of distributions. We can then compute the latter as the vector of the<br />

distances between all the possible pairs of distributions in the two sets, and take the minimum or the<br />

maximum distance, or we can identify a single representative element in the credal set (e.g., the maximum<br />

entropy element or the center of mass), to which distance measures for single distributions can be applied.<br />

These ideas have already been formalised by OBU and IDSIA: yet the evaluation of such distances require to<br />

define an optimisation problem whose efficient solution still needs to be developed.<br />

SubTask 2.1.2 - Specialisation to the class of imprecise hidden Markov models (iHMMs)<br />

The results achieved in the previous task should later be specialised to the sets of (joint) probability<br />

distributions associated with an iHMM. The challenge, here, is to perform the distance evaluation efficiently,<br />

by exploiting the algorithms developed in Task 1.2.<br />

SubTask 2.1.3 - Supervised learning of similarity measures for precise generative models<br />

A second option is to learn in a supervised way the “best” metric for a given training set of models [3, 4, 48].<br />

A natural optimisation criterion consists on maximising the classification performance achieved by the learnt<br />

metric, a problem which has elegant solutions in the case of linear mappings [50, 57]. An interesting tool is<br />

provided by the formalism of pullback metrics: if the models belong to a Riemannian manifold M, any<br />

diffeomorphism of itself (or “automorphism”) induces such a metric on M (see Figure 5). By designing a<br />

suitable parameterized family of automorphisms we obtain a family of pullback metrics we can optimise on.<br />

OBU already has relevant experience in this sense [13]. The second goal of Task 2.1 will be the formulation<br />

of a general manifold learning framework for the classification of generative (precise) models, starting from<br />

hidden Markov models, later to be extended to more powerful classes of dynamical models (such as, for<br />

instance, semi-Markov [51], hierarchical or variable length [26] Markov models) able to describe complex<br />

activities rather than elementary actions.<br />

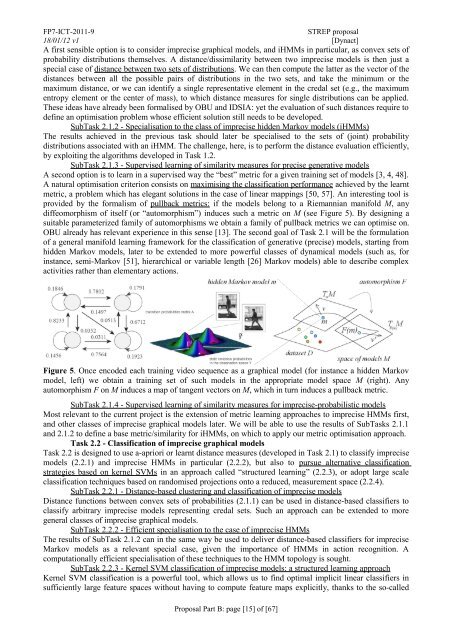

Figure 5. Once encoded each training video sequence as a graphical model (for instance a hidden Markov<br />

model, left) we obtain a training set of such models in the appropriate model space M (right). Any<br />

automorphism F on M induces a map of tangent vectors on M, which in turn induces a pullback metric.<br />

SubTask 2.1.4 - Supervised learning of similarity measures for imprecise-probabilistic models<br />

Most relevant to the current project is the extension of metric learning approaches to imprecise HMMs first,<br />

and other classes of imprecise graphical models later. We will be able to use the results of SubTasks 2.1.1<br />

and 2.1.2 to define a base metric/similarity for iHMMs, on which to apply our metric optimisation approach.<br />

Task 2.2 - Classification of imprecise graphical models<br />

Task 2.2 is designed to use a-apriori or learnt distance measures (developed in Task 2.1) to classify imprecise<br />

models (2.2.1) and imprecise HMMs in particular (2.2.2), but also to pursue alternative classification<br />

strategies based on kernel SVMs in an approach called “structured learning” (2.2.3), or adopt large scale<br />

classification techniques based on randomised projections onto a reduced, measurement space (2.2.4).<br />

SubTask 2.2.1 - Distance-based clustering and classification of imprecise models<br />

Distance functions between convex sets of probabilities (2.1.1) can be used in distance-based classifiers to<br />

classify arbitrary imprecise models representing credal sets. Such an approach can be extended to more<br />

general classes of imprecise graphical models.<br />

SubTask 2.2.2 - Efficient specialisation to the case of imprecise HMMs<br />

The results of SubTask 2.1.2 can in the same way be used to deliver distance-based classifiers for imprecise<br />

Markov models as a relevant special case, given the importance of HMMs in action recognition. A<br />

computationally efficient specialisation of these techniques to the HMM topology is sought.<br />

SubTask 2.2.3 - Kernel SVM classification of imprecise models: a structured learning approach<br />

Kernel SVM classification is a powerful tool, which allows us to find optimal implicit linear classifiers in<br />

sufficiently large feature spaces without having to compute feature maps explicitly, thanks to the so-called<br />

<strong>Proposal</strong> Part B: page [15] of [67]