Project Proposal (PDF) - Oxford Brookes University

Project Proposal (PDF) - Oxford Brookes University

Project Proposal (PDF) - Oxford Brookes University

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

FP7-ICT-2011-9 STREP proposal<br />

18/01/12 v1 [Dynact]<br />

Work package description<br />

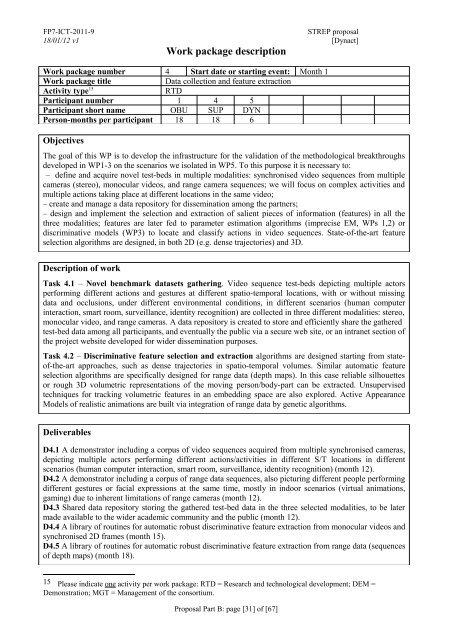

Work package number 4 Start date or starting event: Month 1<br />

Work package title Data collection and feature extraction<br />

Activity type 15 RTD<br />

Participant number 1 4 5<br />

Participant short name OBU SUP DYN<br />

Person-months per participant 18 18 6<br />

Objectives<br />

The goal of this WP is to develop the infrastructure for the validation of the methodological breakthroughs<br />

developed in WP1-3 on the scenarios we isolated in WP5. To this purpose it is necessary to:<br />

– define and acquire novel test-beds in multiple modalities: synchronised video sequences from multiple<br />

cameras (stereo), monocular videos, and range camera sequences; we will focus on complex activities and<br />

multiple actions taking place at different locations in the same video;<br />

– create and manage a data repository for dissemination among the partners;<br />

– design and implement the selection and extraction of salient pieces of information (features) in all the<br />

three modalities; features are later fed to parameter estimation algorithms (imprecise EM, WPs 1,2) or<br />

discriminative models (WP3) to locate and classify actions in video sequences. State-of-the-art feature<br />

selection algorithms are designed, in both 2D (e.g. dense trajectories) and 3D.<br />

Description of work<br />

Task 4.1 – Novel benchmark datasets gathering. Video sequence test-beds depicting multiple actors<br />

performing different actions and gestures at different spatio-temporal locations, with or without missing<br />

data and occlusions, under different environmental conditions, in different scenarios (human computer<br />

interaction, smart room, surveillance, identity recognition) are collected in three different modalities: stereo,<br />

monocular video, and range cameras. A data repository is created to store and efficiently share the gathered<br />

test-bed data among all participants, and eventually the public via a secure web site, or an intranet section of<br />

the project website developed for wider dissemination purposes.<br />

Task 4.2 – Discriminative feature selection and extraction algorithms are designed starting from stateof-the-art<br />

approaches, such as dense trajectories in spatio-temporal volumes. Similar automatic feature<br />

selection algorithms are specifically designed for range data (depth maps). In this case reliable silhouettes<br />

or rough 3D volumetric representations of the moving person/body-part can be extracted. Unsupervised<br />

techniques for tracking volumetric features in an embedding space are also explored. Active Appearance<br />

Models of realistic animations are built via integration of range data by genetic algorithms.<br />

Deliverables<br />

D4.1 A demonstrator including a corpus of video sequences acquired from multiple synchronised cameras,<br />

depicting multiple actors performing different actions/activities in different S/T locations in different<br />

scenarios (human computer interaction, smart room, surveillance, identity recognition) (month 12).<br />

D4.2 A demonstrator including a corpus of range data sequences, also picturing different people performing<br />

different gestures or facial expressions at the same time, mostly in indoor scenarios (virtual animations,<br />

gaming) due to inherent limitations of range cameras (month 12).<br />

D4.3 Shared data repository storing the gathered test-bed data in the three selected modalities, to be later<br />

made available to the wider academic community and the public (month 12).<br />

D4.4 A library of routines for automatic robust discriminative feature extraction from monocular videos and<br />

synchronised 2D frames (month 15).<br />

D4.5 A library of routines for automatic robust discriminative feature extraction from range data (sequences<br />

of depth maps) (month 18).<br />

15 Please indicate one activity per work package: RTD = Research and technological development; DEM =<br />

Demonstration; MGT = Management of the consortium.<br />

<strong>Proposal</strong> Part B: page [31] of [67]