Project Proposal (PDF) - Oxford Brookes University

Project Proposal (PDF) - Oxford Brookes University

Project Proposal (PDF) - Oxford Brookes University

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

FP7-ICT-2011-9 STREP proposal<br />

18/01/12 v1 [Dynact]<br />

Task 4.2 – Discriminative feature selection and extraction<br />

Prior to modelling actions, the video streams have to be processed to extract the most discriminative<br />

information: such “features” can be extracted frame by frame, or from the entire spatio-temporal volume<br />

which contains the action(s) of interest. Their selection and extraction are crucial stages of this project.<br />

SubTask 4.2.1 - Feature extraction from conventional video<br />

Even though, historically, silhouettes have been often used to encode the shape of the moving person for<br />

action recognition, their sensitivity to noise and the fact that they require (on conventional 2D cameras)<br />

solving the (inherently ill defined) background subtraction problem has caused their replacement with feature<br />

selection frameworks in which a large number of low level operators or filters are applied to images (or, for<br />

action, spatio-temporal volumes), while in a later stage the most discriminative ones are retained for<br />

recognition purposes. Interest point detectors [107] such as the Harris3D [70], the Cuboid detector [119], and<br />

the Hessian detector [120] may be employed to extract salient video regions, around which a local descriptor<br />

may be calculated. A plethora of local video descriptors have been proposed for space-time volumes, mainly<br />

derived from their 2D counterparts: Cuboid [119], 3D-SIFT [121], HoGHoF [87], HOG3D [122], extended<br />

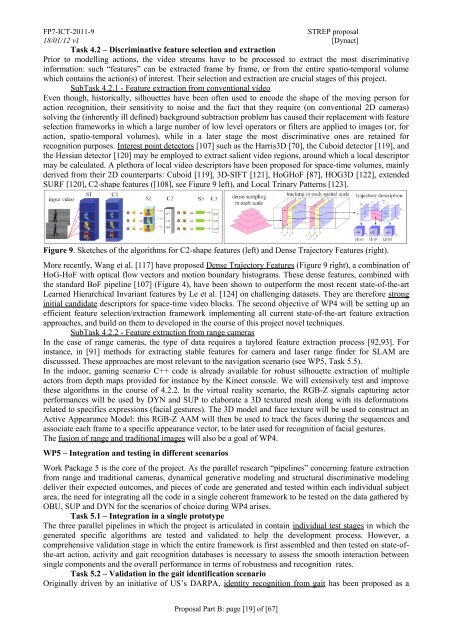

SURF [120], C2-shape features ([108], see Figure 9 left), and Local Trinary Patterns [123].<br />

Figure 9. Sketches of the algorithms for C2-shape features (left) and Dense Trajectory Features (right).<br />

More recently, Wang et al. [117] have proposed Dense Trajectory Features (Figure 9 right), a combination of<br />

HoG-HoF with optical flow vectors and motion boundary histograms. These dense features, combined with<br />

the standard BoF pipeline [107] (Figure 4), have been shown to outperform the most recent state-of-the-art<br />

Learned Hierarchical Invariant features by Le et al. [124] on challenging datasets. They are therefore strong<br />

initial candidate descriptors for space-time video blocks. The second objective of WP4 will be setting up an<br />

efficient feature selection/extraction framework implementing all current state-of-the-art feature extraction<br />

approaches, and build on them to developed in the course of this project novel techniques.<br />

SubTask 4.2.2 - Feature extraction from range cameras<br />

In the case of range cameras, the type of data requires a taylored feature extraction process [92,93]. For<br />

instance, in [91] methods for extracting stable features for camera and laser range finder for SLAM are<br />

discusssed. These approaches are most relevant to the navigation scenario (see WP5, Task 5.5).<br />

In the indoor, gaming scenario C++ code is already available for robust silhouette extraction of multiple<br />

actors from depth maps provided for instance by the Kinect console. We will extensively test and improve<br />

these algorithms in the course of 4.2.2. In the virtual reality scenario, the RGB-Z signals capturing actor<br />

performances will be used by DYN and SUP to elaborate a 3D textured mesh along with its deformations<br />

related to specifics expressions (facial gestures). The 3D model and face texture will be used to construct an<br />

Active Appearance Model: this RGB-Z AAM will then be used to track the faces during the sequences and<br />

associate each frame to a specific appearance vector, to be later used for recognition of facial gestures.<br />

The fusion of range and traditional images will also be a goal of WP4.<br />

WP5 – Integration and testing in different scenarios<br />

Work Package 5 is the core of the project. As the parallel research “pipelines” concerning feature extraction<br />

from range and traditional cameras, dynamical generative modeling and structural discriminative modeling<br />

deliver their expected outcomes, and pieces of code are generated and tested within each individual subject<br />

area, the need for integrating all the code in a single coherent framework to be tested on the data gathered by<br />

OBU, SUP and DYN for the scenarios of choice during WP4 arises.<br />

Task 5.1 – Integration in a single prototype<br />

The three parallel pipelines in which the project is articulated in contain individual test stages in which the<br />

generated specific algorithms are tested and validated to help the development process. However, a<br />

comprehensive validation stage in which the entire framework is first assembled and then tested on state-ofthe-art<br />

action, activity and gait recognition databases is necessary to assess the smooth interaction between<br />

single components and the overall performance in terms of robustness and recognition rates.<br />

Task 5.2 – Validation in the gait identification scenario<br />

Originally driven by an initiative of US’s DARPA, identity recognition from gait has been proposed as a<br />

<strong>Proposal</strong> Part B: page [19] of [67]