Project Proposal (PDF) - Oxford Brookes University

Project Proposal (PDF) - Oxford Brookes University

Project Proposal (PDF) - Oxford Brookes University

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

FP7-ICT-2011-9 STREP proposal<br />

18/01/12 v1 [Dynact]<br />

conducted in small, controlled environments, even though attempts have been recently made to go towards<br />

action recognition “in the wild” [37]. If we remove the assumption that a single motion of interest is present<br />

in our video(s), locality emerges too as a critical factor: a person can walk and wave at the same time;<br />

different actors may perform different activities in the same room. Indeed, action localization (in both space<br />

and time) within a given video sequence is a necessary first step in any recognition framework. However,<br />

most current research normally assumes that the motion of interest has already been segmented, and focuses<br />

on the classification of the given segment. This is reflected in the inadequate number of action<br />

localization/detection (versus recognition) test-beds currently available.<br />

Current research tends as well to focus on single-actor videos, as the presence of multiple actors greatly<br />

complicates both localization and recognition.<br />

In addition, learning a large, realistic number of action categories requires the collection of huge training<br />

sets, and triggers the problem of how to classify large amounts of complex data. Most importantly, learning<br />

action models from a limited training set poses serious overfitting issues: if very few instances of each action<br />

category are available the model describes well the available examples, but has limited generalisation power,<br />

i.e., it performs badly on new data.<br />

Finally, a serious challenge arises when we move from simple, “atomic” actions to more complex,<br />

sophisticated “activities”, seen as series of elementary actions structured in a meaningful way.<br />

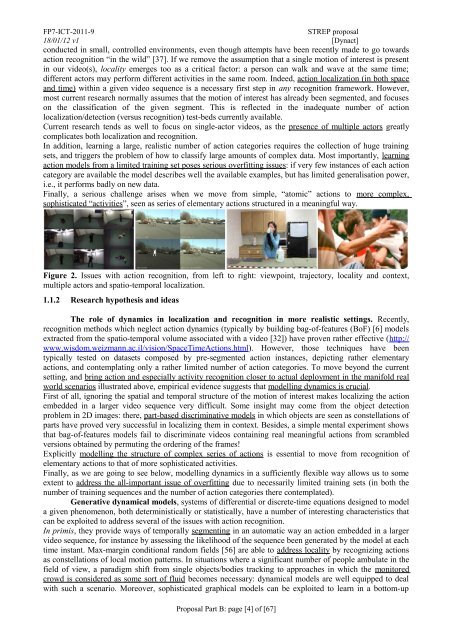

Figure 2. Issues with action recognition, from left to right: viewpoint, trajectory, locality and context,<br />

multiple actors and spatio-temporal localization.<br />

1.1.2 Research hypothesis and ideas<br />

The role of dynamics in localization and recognition in more realistic settings. Recently,<br />

recognition methods which neglect action dynamics (typically by building bag-of-features (BoF) [6] models<br />

extracted from the spatio-temporal volume associated with a video [32]) have proven rather effective (http://<br />

www.wisdom.weizmann.ac.il/vision/SpaceTimeActions.html). However, those techniques have been<br />

typically tested on datasets composed by pre-segmented action instances, depicting rather elementary<br />

actions, and contemplating only a rather limited number of action categories. To move beyond the current<br />

setting, and bring action and especially activity recognition closer to actual deployment in the manifold real<br />

world scenarios illustrated above, empirical evidence suggests that modelling dynamics is crucial.<br />

First of all, ignoring the spatial and temporal structure of the motion of interest makes localizing the action<br />

embedded in a larger video sequence very difficult. Some insight may come from the object detection<br />

problem in 2D images: there, part-based discriminative models in which objects are seen as constellations of<br />

parts have proved very successful in localizing them in context. Besides, a simple mental experiment shows<br />

that bag-of-features models fail to discriminate videos containing real meaningful actions from scrambled<br />

versions obtained by permuting the ordering of the frames!<br />

Explicitly modelling the structure of complex series of actions is essential to move from recognition of<br />

elementary actions to that of more sophisticated activities.<br />

Finally, as we are going to see below, modelling dynamics in a sufficiently flexible way allows us to some<br />

extent to address the all-important issue of overfitting due to necessarily limited training sets (in both the<br />

number of training sequences and the number of action categories there contemplated).<br />

Generative dynamical models, systems of differential or discrete-time equations designed to model<br />

a given phenomenon, both deterministically or statistically, have a number of interesting characteristics that<br />

can be exploited to address several of the issues with action recognition.<br />

In primis, they provide ways of temporally segmenting in an automatic way an action embedded in a larger<br />

video sequence, for instance by assessing the likelihood of the sequence been generated by the model at each<br />

time instant. Max-margin conditional random fields [56] are able to address locality by recognizing actions<br />

as constellations of local motion patterns. In situations where a significant number of people ambulate in the<br />

field of view, a paradigm shift from single objects/bodies tracking to approaches in which the monitored<br />

crowd is considered as some sort of fluid becomes necessary: dynamical models are well equipped to deal<br />

with such a scenario. Moreover, sophisticated graphical models can be exploited to learn in a bottom-up<br />

<strong>Proposal</strong> Part B: page [4] of [67]