Project Proposal (PDF) - Oxford Brookes University

Project Proposal (PDF) - Oxford Brookes University

Project Proposal (PDF) - Oxford Brookes University

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

FP7-ICT-2011-9 STREP proposal<br />

18/01/12 v1 [Dynact]<br />

Work package description<br />

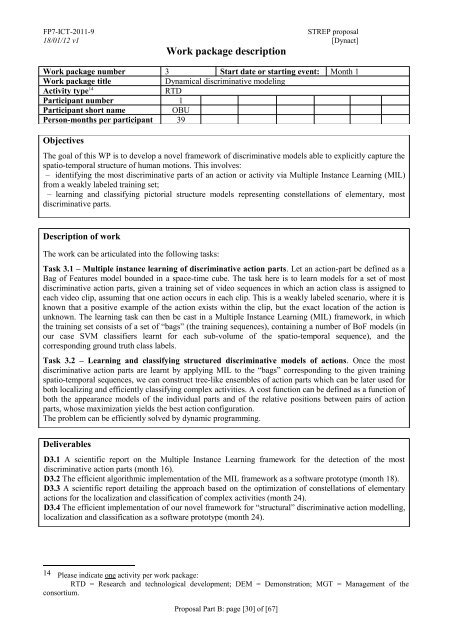

Work package number 3 Start date or starting event: Month 1<br />

Work package title Dynamical discriminative modeling<br />

Activity type 14 RTD<br />

Participant number 1<br />

Participant short name OBU<br />

Person-months per participant 39<br />

Objectives<br />

The goal of this WP is to develop a novel framework of discriminative models able to explicitly capture the<br />

spatio-temporal structure of human motions. This involves:<br />

– identifying the most discriminative parts of an action or activity via Multiple Instance Learning (MIL)<br />

from a weakly labeled training set;<br />

– learning and classifying pictorial structure models representing constellations of elementary, most<br />

discriminative parts.<br />

Description of work<br />

The work can be articulated into the following tasks:<br />

Task 3.1 – Multiple instance learning of discriminative action parts. Let an action-part be defined as a<br />

Bag of Features model bounded in a space-time cube. The task here is to learn models for a set of most<br />

discriminative action parts, given a training set of video sequences in which an action class is assigned to<br />

each video clip, assuming that one action occurs in each clip. This is a weakly labeled scenario, where it is<br />

known that a positive example of the action exists within the clip, but the exact location of the action is<br />

unknown. The learning task can then be cast in a Multiple Instance Learning (MIL) framework, in which<br />

the training set consists of a set of “bags” (the training sequences), containing a number of BoF models (in<br />

our case SVM classifiers learnt for each sub-volume of the spatio-temporal sequence), and the<br />

corresponding ground truth class labels.<br />

Task 3.2 – Learning and classifying structured discriminative models of actions. Once the most<br />

discriminative action parts are learnt by applying MIL to the “bags” corresponding to the given training<br />

spatio-temporal sequences, we can construct tree-like ensembles of action parts which can be later used for<br />

both localizing and efficiently classifying complex activities. A cost function can be defined as a function of<br />

both the appearance models of the individual parts and of the relative positions between pairs of action<br />

parts, whose maximization yields the best action configuration.<br />

The problem can be efficiently solved by dynamic programming.<br />

Deliverables<br />

D3.1 A scientific report on the Multiple Instance Learning framework for the detection of the most<br />

discriminative action parts (month 16).<br />

D3.2 The efficient algorithmic implementation of the MIL framework as a software prototype (month 18).<br />

D3.3 A scientific report detailing the approach based on the optimization of constellations of elementary<br />

actions for the localization and classification of complex activities (month 24).<br />

D3.4 The efficient implementation of our novel framework for “structural” discriminative action modelling,<br />

localization and classification as a software prototype (month 24).<br />

14 Please indicate one activity per work package:<br />

RTD = Research and technological development; DEM = Demonstration; MGT = Management of the<br />

consortium.<br />

<strong>Proposal</strong> Part B: page [30] of [67]