Project Proposal (PDF) - Oxford Brookes University

Project Proposal (PDF) - Oxford Brookes University

Project Proposal (PDF) - Oxford Brookes University

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

FP7-ICT-2011-9 STREP proposal<br />

18/01/12 v1 [Dynact]<br />

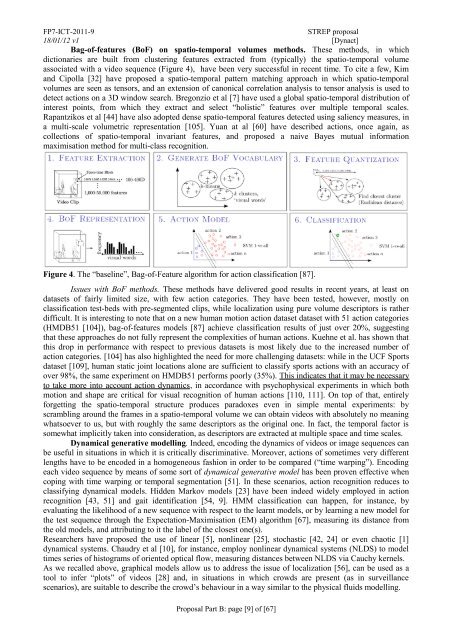

Bag-of-features (BoF) on spatio-temporal volumes methods. These methods, in which<br />

dictionaries are built from clustering features extracted from (typically) the spatio-temporal volume<br />

associated with a video sequence (Figure 4), have been very successful in recent time. To cite a few, Kim<br />

and Cipolla [32] have proposed a spatio-temporal pattern matching approach in which spatio-temporal<br />

volumes are seen as tensors, and an extension of canonical correlation analysis to tensor analysis is used to<br />

detect actions on a 3D window search. Bregonzio et al [7] have used a global spatio-temporal distribution of<br />

interest points, from which they extract and select “holistic” features over multiple temporal scales.<br />

Rapantzikos et al [44] have also adopted dense spatio-temporal features detected using saliency measures, in<br />

a multi-scale volumetric representation [105]. Yuan at al [60] have described actions, once again, as<br />

collections of spatio-temporal invariant features, and proposed a naive Bayes mutual information<br />

maximisation method for multi-class recognition.<br />

Figure 4. The “baseline”, Bag-of-Feature algorithm for action classification [87].<br />

Issues with BoF methods. These methods have delivered good results in recent years, at least on<br />

datasets of fairly limited size, with few action categories. They have been tested, however, mostly on<br />

classification test-beds with pre-segmented clips, while localization using pure volume descriptors is rather<br />

difficult. It is interesting to note that on a new human motion action dataset dataset with 51 action categories<br />

(HMDB51 [104]), bag-of-features models [87] achieve classification results of just over 20%, suggesting<br />

that these approaches do not fully represent the complexities of human actions. Kuehne et al. has shown that<br />

this drop in performance with respect to previous datasets is most likely due to the increased number of<br />

action categories. [104] has also highlighted the need for more challenging datasets: while in the UCF Sports<br />

dataset [109], human static joint locations alone are sufficient to classify sports actions with an accuracy of<br />

over 98%, the same experiment on HMDB51 performs poorly (35%). This indicates that it may be necessary<br />

to take more into account action dynamics, in accordance with psychophysical experiments in which both<br />

motion and shape are critical for visual recognition of human actions [110, 111]. On top of that, entirely<br />

forgetting the spatio-temporal structure produces paradoxes even in simple mental experiments: by<br />

scrambling around the frames in a spatio-temporal volume we can obtain videos with absolutely no meaning<br />

whatsoever to us, but with roughly the same descriptors as the original one. In fact, the temporal factor is<br />

somewhat implicitly taken into consideration, as descriptors are extracted at multiple space and time scales.<br />

Dynamical generative modelling. Indeed, encoding the dynamics of videos or image sequences can<br />

be useful in situations in which it is critically discriminative. Moreover, actions of sometimes very different<br />

lengths have to be encoded in a homogeneous fashion in order to be compared (“time warping”). Encoding<br />

each video sequence by means of some sort of dynamical generative model has been proven effective when<br />

coping with time warping or temporal segmentation [51]. In these scenarios, action recognition reduces to<br />

classifying dynamical models. Hidden Markov models [23] have been indeed widely employed in action<br />

recognition [43, 51] and gait identification [54, 9]. HMM classification can happen, for instance, by<br />

evaluating the likelihood of a new sequence with respect to the learnt models, or by learning a new model for<br />

the test sequence through the Expectation-Maximisation (EM) algorithm [67], measuring its distance from<br />

the old models, and attributing to it the label of the closest one(s).<br />

Researchers have proposed the use of linear [5], nonlinear [25], stochastic [42, 24] or even chaotic [1]<br />

dynamical systems. Chaudry et al [10], for instance, employ nonlinear dynamical systems (NLDS) to model<br />

times series of histograms of oriented optical flow, measuring distances between NLDS via Cauchy kernels.<br />

As we recalled above, graphical models allow us to address the issue of localization [56], can be used as a<br />

tool to infer “plots” of videos [28] and, in situations in which crowds are present (as in surveillance<br />

scenarios), are suitable to describe the crowd’s behaviour in a way similar to the physical fluids modelling.<br />

<strong>Proposal</strong> Part B: page [9] of [67]