Project Proposal (PDF) - Oxford Brookes University

Project Proposal (PDF) - Oxford Brookes University

Project Proposal (PDF) - Oxford Brookes University

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

FP7-ICT-2011-9 STREP proposal<br />

18/01/12 v1 [Dynact]<br />

training set consists of a set of “bags” (the training sequences), containing a number of BoF models (or<br />

“examples”, in our case SVM classifiers learnt for each sub-volume of the spatio-temporal sequence), and<br />

the corresponding ground truth class labels. The class label for each bag (sequence) is positive if there exists<br />

at least one positive example (sub-volume) in the bag (which ones are positive we initially do not know): see<br />

Figure 6. An initial “positive” model is learned by assuming that all examples in the positive bag are indeed<br />

positive (all sub-volumes of the sequence do contain the action at hand), while a negative model is learned<br />

from the examples in the negative bag (videos labelled with a different action category). Initial models are<br />

updated in an iterative process: eventually, only the most discriminative examples in each positive bag are<br />

retained as positive.<br />

A MIL/Latent-SVM framework has been used in [116],<br />

where possible object part bounding box locations were<br />

cast as latent variables. Such an approach permits the selfadjustment<br />

of the positive ground truth data, better<br />

aligning the learned objects filters during training. In<br />

action detection, Hu et al.'s SMILE-SVM focuses on the<br />

detection of 2D action boxes, and requires the approximate<br />

labelling of the frames (and human heads) in which the<br />

actions occur. In contrast, we propose to cast 3D bounding<br />

structures as latent variables. When using standard SVMs<br />

in conjunction with BoF as single part models, MIL<br />

becomes a semi-convex optimisation problem, for which<br />

Andrews et al. have proposed two heuristic approaches<br />

[126], of which the mixed-integer formulation suits the<br />

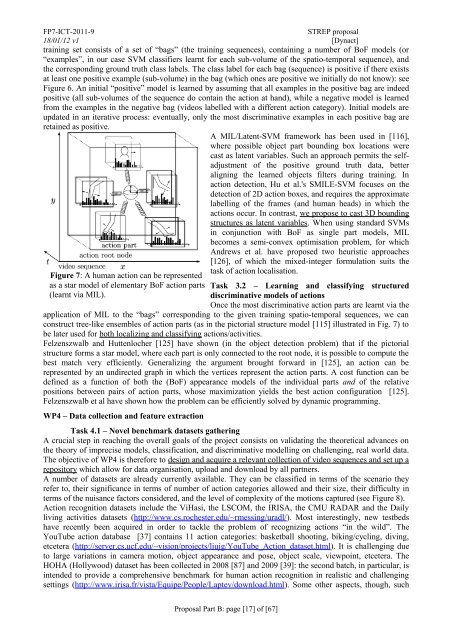

Figure 7: A human action can be represented<br />

as a star model of elementary BoF action parts<br />

(learnt via MIL).<br />

task of action localisation.<br />

Task 3.2 – Learning and classifying structured<br />

discriminative models of actions<br />

Once the most discriminative action parts are learnt via the<br />

application of MIL to the “bags” corresponding to the given training spatio-temporal sequences, we can<br />

construct tree-like ensembles of action parts (as in the pictorial structure model [115] illustrated in Fig. 7) to<br />

be later used for both localizing and classifying actions/activities.<br />

Felzenszwalb and Huttenlocher [125] have shown (in the object detection problem) that if the pictorial<br />

structure forms a star model, where each part is only connected to the root node, it is possible to compute the<br />

best match very efficiently. Generalizing the argument brought forward in [125], an action can be<br />

represented by an undirected graph in which the vertices represent the action parts. A cost function can be<br />

defined as a function of both the (BoF) appearance models of the individual parts and of the relative<br />

positions between pairs of action parts, whose maximization yields the best action configuration [125].<br />

Felzenszwalb et al have shown how the problem can be efficiently solved by dynamic programming.<br />

WP4 – Data collection and feature extraction<br />

Task 4.1 – Novel benchmark datasets gathering<br />

A crucial step in reaching the overall goals of the project consists on validating the theoretical advances on<br />

the theory of imprecise models, classification, and discriminative modelling on challenging, real world data.<br />

The objective of WP4 is therefore to design and acquire a relevant collection of video sequences and set up a<br />

repository which allow for data organisation, upload and download by all partners.<br />

A number of datasets are already currently available. They can be classified in terms of the scenario they<br />

refer to, their significance in terms of number of action categories allowed and their size, their difficulty in<br />

terms of the nuisance factors considered, and the level of complexity of the motions captured (see Figure 8).<br />

Action recognition datasets include the ViHasi, the LSCOM, the IRISA, the CMU RADAR and the Daily<br />

living activities datasets (http://www.cs.rochester.edu/~rmessing/uradl/). Most interestingly, new testbeds<br />

have recently been acquired in order to tackle the problem of recognizing actions “in the wild”. The<br />

YouTube action database [37] contains 11 action categories: basketball shooting, biking/cycling, diving,<br />

etcetera (http://server.cs.ucf.edu/~vision/projects/liujg/YouTube_Action_dataset.html). It is challenging due<br />

to large variations in camera motion, object appearance and pose, object scale, viewpoint, etcetera. The<br />

HOHA (Hollywood) dataset has been collected in 2008 [87] and 2009 [39]: the second batch, in particular, is<br />

intended to provide a comprehensive benchmark for human action recognition in realistic and challenging<br />

settings (http://www.irisa.fr/vista/Equipe/People/Laptev/download.html). Some other aspects, though, such<br />

<strong>Proposal</strong> Part B: page [17] of [67]