Intel ® Visual

Intel ® Visual

Intel ® Visual

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

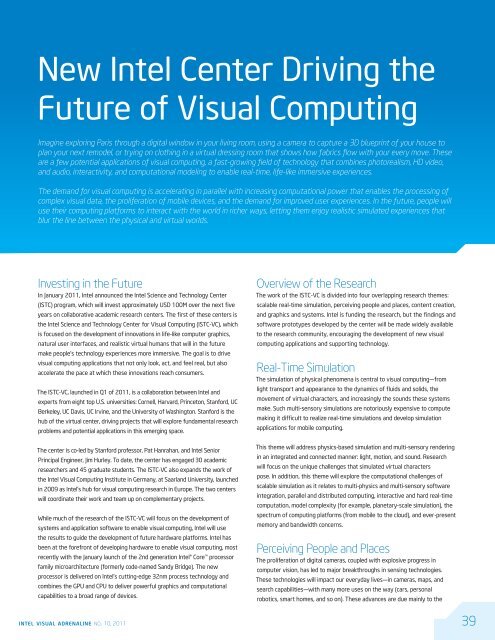

New <strong>Intel</strong> Center Driving the<br />

Future of <strong>Visual</strong> Computing<br />

Imagine exploring Paris through a digital window in your living room, using a camera to capture a 3D blueprint of your house to<br />

plan your next remodel, or trying on clothing in a virtual dressing room that shows how fabrics flow with your every move. These<br />

are a few potential applications of visual computing, a fast-growing field of technology that combines photorealism, HD video,<br />

and audio, interactivity, and computational modeling to enable real-time, life-like immersive experiences.<br />

The demand for visual computing is accelerating in parallel with increasing computational power that enables the processing of<br />

complex visual data, the proliferation of mobile devices, and the demand for improved user experiences. In the future, people will<br />

use their computing platforms to interact with the world in richer ways, letting them enjoy realistic simulated experiences that<br />

blur the line between the physical and virtual worlds.<br />

Investing in the Future<br />

In January 2011, <strong>Intel</strong> announced the <strong>Intel</strong> Science and Technology Center<br />

(ISTC) program, which will invest approximately USD 100M over the next five<br />

years on collaborative academic research centers. The first of these centers is<br />

the <strong>Intel</strong> Science and Technology Center for <strong>Visual</strong> Computing (ISTC-VC), which<br />

is focused on the development of innovations in life-like computer graphics,<br />

natural user interfaces, and realistic virtual humans that will in the future<br />

make people’s technology experiences more immersive. The goal is to drive<br />

visual computing applications that not only look, act, and feel real, but also<br />

accelerate the pace at which these innovations reach consumers.<br />

The ISTC-VC, launched in Q1 of 2011, is a collaboration between <strong>Intel</strong> and<br />

experts from eight top U.S. universities: Cornell, Harvard, Princeton, Stanford, UC<br />

Berkeley, UC Davis, UC Irvine, and the University of Washington. Stanford is the<br />

hub of the virtual center, driving projects that will explore fundamental research<br />

problems and potential applications in this emerging space.<br />

The center is co-led by Stanford professor, Pat Hanrahan, and <strong>Intel</strong> Senior<br />

Principal Engineer, Jim Hurley. To date, the center has engaged 30 academic<br />

researchers and 45 graduate students. The ISTC-VC also expands the work of<br />

the <strong>Intel</strong> <strong>Visual</strong> Computing Institute in Germany, at Saarland University, launched<br />

in 2009 as <strong>Intel</strong>’s hub for visual computing research in Europe. The two centers<br />

will coordinate their work and team up on complementary projects.<br />

While much of the research of the ISTC-VC will focus on the development of<br />

systems and application software to enable visual computing, <strong>Intel</strong> will use<br />

the results to guide the development of future hardware platforms. <strong>Intel</strong> has<br />

been at the forefront of developing hardware to enable visual computing, most<br />

recently with the January launch of the 2nd generation <strong>Intel</strong><strong>®</strong> Core processor<br />

family microarchitecture (formerly code-named Sandy Bridge). The new<br />

processor is delivered on <strong>Intel</strong>’s cutting-edge 32nm process technology and<br />

combines the GPU and CPU to deliver powerful graphics and computational<br />

capabilities to a broad range of devices.<br />

Overview of the Research<br />

The work of the ISTC-VC is divided into four overlapping research themes:<br />

scalable real-time simulation, perceiving people and places, content creation,<br />

and graphics and systems. <strong>Intel</strong> is funding the research, but the findings and<br />

software prototypes developed by the center will be made widely available<br />

to the research community, encouraging the development of new visual<br />

computing applications and supporting technology.<br />

Real-Time Simulation<br />

The simulation of physical phenomena is central to visual computing—from<br />

light transport and appearance to the dynamics of fluids and solids, the<br />

movement of virtual characters, and increasingly the sounds these systems<br />

make. Such multi-sensory simulations are notoriously expensive to compute<br />

making it difficult to realize real-time simulations and develop simulation<br />

applications for mobile computing.<br />

This theme will address physics-based simulation and multi-sensory rendering<br />

in an integrated and connected manner: light, motion, and sound. Research<br />

will focus on the unique challenges that simulated virtual characters<br />

pose. In addition, this theme will explore the computational challenges of<br />

scalable simulation as it relates to multi-physics and multi-sensory software<br />

integration, parallel and distributed computing, interactive and hard real-time<br />

computation, model complexity (for example, planetary-scale simulation), the<br />

spectrum of computing platforms (from mobile to the cloud), and ever-present<br />

memory and bandwidth concerns.<br />

Perceiving People and Places<br />

The proliferation of digital cameras, coupled with explosive progress in<br />

computer vision, has led to major breakthroughs in sensing technologies.<br />

These technologies will impact our everyday lives—in cameras, maps, and<br />

search capabilities—with many more uses on the way (cars, personal<br />

robotics, smart homes, and so on). These advances are due mainly to the<br />

intel visual adrenaline no. 10, 2011 39