Eric lippert - Amazon Web Services

Eric lippert - Amazon Web Services

Eric lippert - Amazon Web Services

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

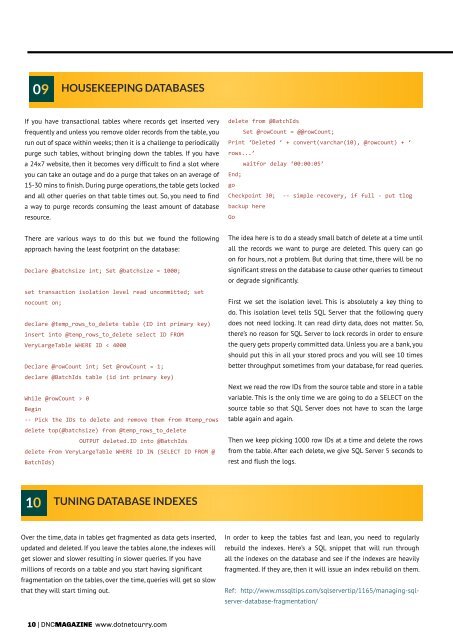

09<br />

Housekeeping databases<br />

If you have transactional tables where records get inserted very<br />

frequently and unless you remove older records from the table, you<br />

run out of space within weeks; then it is a challenge to periodically<br />

purge such tables, without bringing down the tables. If you have<br />

a 24x7 website, then it becomes very difficult to find a slot where<br />

you can take an outage and do a purge that takes on an average of<br />

15-30 mins to finish. During purge operations, the table gets locked<br />

and all other queries on that table times out. So, you need to find<br />

a way to purge records consuming the least amount of database<br />

resource.<br />

delete from @BatchIds<br />

Set @rowCount = @@rowCount;<br />

Print ‘Deleted ‘ + convert(varchar(10), @rowcount) + ‘<br />

rows...’<br />

waitfor delay ‘00:00:05’<br />

End;<br />

go<br />

Checkpoint 30; -- simple recovery, if full - put tlog<br />

backup here<br />

Go<br />

There are various ways to do this but we found the following<br />

approach having the least footprint on the database:<br />

Declare @batchsize int; Set @batchsize = 1000;<br />

set transaction isolation level read uncommitted; set<br />

nocount on;<br />

declare @temp_rows_to_delete table (ID int primary key)<br />

insert into @temp_rows_to_delete select ID FROM<br />

VeryLargeTable WHERE ID < 4000<br />

Declare @rowCount int; Set @rowCount = 1;<br />

declare @BatchIds table (id int primary key)<br />

While @rowCount > 0<br />

Begin<br />

-- Pick the IDs to delete and remove them from #temp_rows<br />

delete top(@batchsize) from @temp_rows_to_delete<br />

OUTPUT deleted.ID into @BatchIds<br />

delete from VeryLargeTable WHERE ID IN (SELECT ID FROM @<br />

BatchIds)<br />

The idea here is to do a steady small batch of delete at a time until<br />

all the records we want to purge are deleted. This query can go<br />

on for hours, not a problem. But during that time, there will be no<br />

significant stress on the database to cause other queries to timeout<br />

or degrade significantly.<br />

First we set the isolation level. This is absolutely a key thing to<br />

do. This isolation level tells SQL Server that the following query<br />

does not need locking. It can read dirty data, does not matter. So,<br />

there’s no reason for SQL Server to lock records in order to ensure<br />

the query gets properly committed data. Unless you are a bank, you<br />

should put this in all your stored procs and you will see 10 times<br />

better throughput sometimes from your database, for read queries.<br />

Next we read the row IDs from the source table and store in a table<br />

variable. This is the only time we are going to do a SELECT on the<br />

source table so that SQL Server does not have to scan the large<br />

table again and again.<br />

Then we keep picking 1000 row IDs at a time and delete the rows<br />

from the table. After each delete, we give SQL Server 5 seconds to<br />

rest and flush the logs.<br />

10<br />

Tuning database indexes<br />

Over the time, data in tables get fragmented as data gets inserted,<br />

updated and deleted. If you leave the tables alone, the indexes will<br />

get slower and slower resulting in slower queries. If you have<br />

millions of records on a table and you start having significant<br />

fragmentation on the tables, over the time, queries will get so slow<br />

that they will start timing out.<br />

In order to keep the tables fast and lean, you need to regularly<br />

rebuild the indexes. Here’s a SQL snippet that will run through<br />

all the indexes on the database and see if the indexes are heavily<br />

fragmented. If they are, then it will issue an index rebuild on them.<br />

Ref: http://www.mssqltips.com/sqlservertip/1165/managing-sqlserver-database-fragmentation/<br />

10 | DNCmagazine www.dotnetcurry.com