ADMIN+Magazine+Sample+PDF

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

OCFS2<br />

Tools<br />

F Figure 2: Unspectacular:<br />

the OCFS2 mount<br />

process.<br />

E Figure 3: Automatic<br />

reboot after 20 seconds<br />

on OCFS2 cluster<br />

errors.<br />

can modify the defaults to suit your<br />

needs. The easiest approach here is<br />

via the /etc/init.d/o2cb configure<br />

script, which prompts you for the<br />

required values – for example, when<br />

the OCFS2 cluster should regard a<br />

node or network connection as down.<br />

At the same time, you can specify<br />

when the cluster stack should try to<br />

reconnect and when it should send a<br />

keep-alive packet.<br />

Apart from the heartbeat timeout,<br />

all of these values are given in milliseconds.<br />

However, for the heartbeat<br />

timeout, you need a little bit of math<br />

to determine when the cluster should<br />

consider that a computer is down.<br />

The value represents the number of<br />

two-second iterations plus one for<br />

the heartbeat. The default value of 31<br />

is thus equivalent to 60 seconds. On<br />

larger networks, you might need to<br />

increase all these values to avoid false<br />

alarms.<br />

If OCFS2 stumbles across a critical<br />

error, it switches the filesystem<br />

to read-only mode and generates a<br />

kernel oops or even a kernel panic.<br />

In production use, you will probably<br />

want to remedy this state without<br />

in-depth error analysis (i.e., reboot<br />

the cluster node). For this to happen,<br />

you need to modify the underlying<br />

operating system so that it automatically<br />

reboots in case of a kernel oops<br />

or panic (Figure 3). Your best bet for<br />

this on Linux is the /proc filesystem<br />

for temporary changes, or sysctl if<br />

you want the change to survive a<br />

reboot.<br />

Just like any other filesystem, OCFS2<br />

has a couple of internal limits you<br />

need to take into account when designing<br />

your storage. The number<br />

of subdirectories in a directory is<br />

restricted to 32,000. OCFS2 stores<br />

data in clusters of between 4 and<br />

1,024Kb. Because the number of<br />

cluster addresses is restricted to 232,<br />

the maximum file size is 4PB. This<br />

limit is more or less irrelevant because<br />

another restriction – the use<br />

of JBD journaling – limits the maximum<br />

OCFS2 filesystem size to 16TB,<br />

which can address a maximum of 232<br />

blocks of 4KB.<br />

An active OCFS2 cluster uses a<br />

handful of processes to handle<br />

its work (Listing 5). DLM-related<br />

tasks are handled by dlm_thread,<br />

dlm_reco_thread, and dlm_wq. The<br />

ocfs2dc, ocfs2cmt, ocfs2_wq, and<br />

ocfs2rec processes are responsible<br />

for access to the filesystem. o2net<br />

and o2hb‐XXXXXXXXXX handle cluster<br />

communications and the heartbeat.<br />

All of these processes are started and<br />

stopped by init scripts for the cluster<br />

framework and OCFS2.<br />

OCFS2 stores its management files<br />

in the filesystem’s system directory,<br />

which is invisible to normal commands<br />

such as ls. The debugfs.ocfs2<br />

command lets you make the system<br />

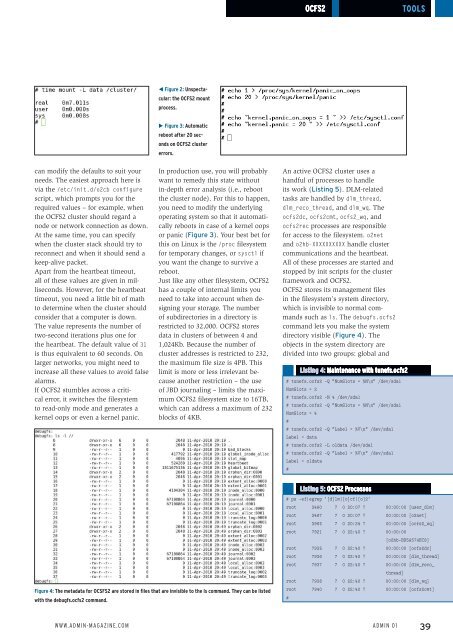

directory visible (Figure 4). The<br />

objects in the system directory are<br />

divided into two groups: global and<br />

Listing 4: Maintenance with tunefs.ocfs2<br />

# tunefs.ocfs2 ‐Q "NumSlots = %N\n" /dev/sda1<br />

NumSlots = 2<br />

# tunefs.ocfs2 ‐N 4 /dev/sda1<br />

# tunefs.ocfs2 ‐Q "NumSlots = %N\n" /dev/sda1<br />

NumSlots = 4<br />

#<br />

# tunefs.ocfs2 ‐Q "Label = %V\n" /dev/sda1<br />

Label = data<br />

# tunefs.ocfs2 ‐L oldata /dev/sda1<br />

# tunefs.ocfs2 ‐Q "Label = %V\n" /dev/sda1<br />

Label = oldata<br />

#<br />

Listing 5: OCFS2 Processes<br />

Figure 4: The metadata for OCSFS2 are stored in files that are invisible to the ls command. They can be listed<br />

with the debugfs.ocfs2 command.<br />

# ps ‐ef|egrep '[d]lm|[o]cf|[o]2'<br />

root 3460 7 0 20:07 ? 00:00:00 [user_dlm]<br />

root 3467 7 0 20:07 ? 00:00:00 [o2net]<br />

root 3965 7 0 20:24 ? 00:00:00 [ocfs2_wq]<br />

root 7921 7 0 22:40 ? 00:00:00<br />

[o2hb‐BD5A574EC8]<br />

root 7935 7 0 22:40 ? 00:00:00 [ocfs2dc]<br />

root 7936 7 0 22:40 ? 00:00:00 [dlm_thread]<br />

root 7937 7 0 22:40 ? 00:00:00 [dlm_reco_<br />

thread]<br />

root 7938 7 0 22:40 ? 00:00:00 [dlm_wq]<br />

root 7940 7 0 22:40 ? 00:00:00 [ocfs2cmt]<br />

#<br />

www.admin-magazine.com<br />

Admin 01<br />

39