Human-Computer Interaction and Presence in Virtual Reality

Human-Computer Interaction and Presence in Virtual Reality

Human-Computer Interaction and Presence in Virtual Reality

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

Chapter 3<br />

<strong>in</strong>teractions <strong>and</strong> whether these meet the requirements of the cognitive processes of the user.<br />

At this detail level we cannot use the same model for the exist<strong>in</strong>g <strong>and</strong> the future situation,<br />

s<strong>in</strong>ce these will have different implementation specific details. We will need two models:<br />

TM1 <strong>and</strong> TM2.<br />

Multi-modality<br />

<strong>Interaction</strong> <strong>in</strong> these applications uses multiple modalities. Users communicate with each<br />

other through use of the computer, <strong>and</strong> <strong>in</strong> this case also directly by talk<strong>in</strong>g. The computer<br />

responds to different types of <strong>in</strong>puts, such as the keyboard, joystick, but also headtrack<strong>in</strong>g,<br />

<strong>and</strong> produces output <strong>in</strong> different types of modalities such as vision, sound or even haptics.<br />

These modalities cannot be viewed separately; they are co-dependent <strong>and</strong> <strong>in</strong>fluence one<br />

another. We therefore need a method that can model these modalities. Aga<strong>in</strong>, the Language /<br />

Action Perspective looks promis<strong>in</strong>g s<strong>in</strong>ce it considers communication <strong>in</strong> different modalities<br />

to be equal. However, different modalities might afford different possibilities for<br />

communication <strong>and</strong> we should therefore look at which modality is used for which type of<br />

communication, possibly similar to the CUD.<br />

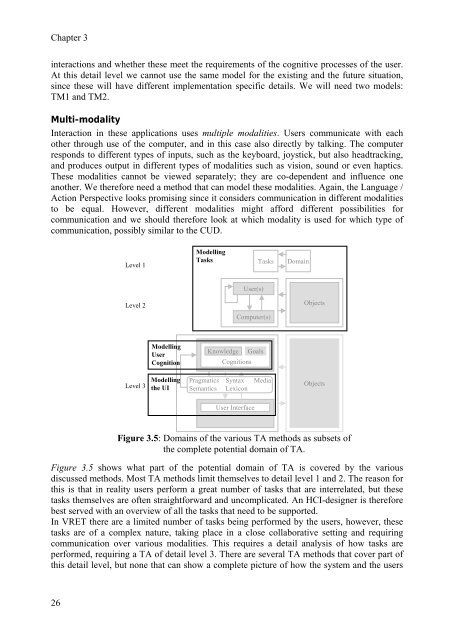

Level 1<br />

Level 2<br />

Level 3<br />

Modell<strong>in</strong>g<br />

User<br />

Cognition<br />

Modell<strong>in</strong>g<br />

the UI<br />

Modell<strong>in</strong>g<br />

Tasks<br />

Pragmatics<br />

Semantics<br />

Knowledge<br />

User(s)<br />

<strong>Computer</strong>(s)<br />

Cognitions<br />

Syntax<br />

Lexicon<br />

User Interface<br />

Tasks Doma<strong>in</strong><br />

Objects<br />

Figure 3.5 shows what part of the potential doma<strong>in</strong> of TA is covered by the various<br />

discussed methods. Most TA methods limit themselves to detail level 1 <strong>and</strong> 2. The reason for<br />

this is that <strong>in</strong> reality users perform a great number of tasks that are <strong>in</strong>terrelated, but these<br />

tasks themselves are often straightforward <strong>and</strong> uncomplicated. An HCI-designer is therefore<br />

Goals<br />

Media<br />

Objects<br />

Figure 3.5: Doma<strong>in</strong>s of the various TA methods as subsets of<br />

the complete potential doma<strong>in</strong> of TA.<br />

best served with an overview of all the tasks that need to be supported.<br />

In VRET there are a limited number of tasks be<strong>in</strong>g performed by the users, however, these<br />

tasks are of a complex nature, tak<strong>in</strong>g place <strong>in</strong> a close collaborative sett<strong>in</strong>g <strong>and</strong> requir<strong>in</strong>g<br />

communication over various modalities. This requires a detail analysis of how tasks are<br />

performed, requir<strong>in</strong>g a TA of detail level 3. There are several TA methods that cover part of<br />

this detail level, but none that can show a complete picture of how the system <strong>and</strong> the users<br />

26