Performance Analysis and Optimization of the Hurricane File System ...

Performance Analysis and Optimization of the Hurricane File System ...

Performance Analysis and Optimization of the Hurricane File System ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

CHAPTER 5. MICROBENCHMARKS – READ OPERATION 46<br />

0<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

free list<br />

dirty lists<br />

¦¦<br />

¦¦ ¦¦ ¦¦ ¦¦<br />

¦¦ ¦¦ ¦¦ ¦¦ ¦¦<br />

¦¦<br />

¦¦ ¦¦<br />

¦¦ ¦¦<br />

¦¦ ¦¦<br />

¦¦ ¦¦<br />

waiting list<br />

free list<br />

hash lists<br />

0 1 2 3<br />

¦¦<br />

¦¦ ¦¦ ¦¦ ¦¦<br />

¦¦ ¦¦ ¦¦ ¦¦ ¦¦<br />

¦¦<br />

¦¦ ¦¦<br />

¦¦ ¦¦<br />

¦¦ ¦¦<br />

Waiting I/O<br />

¦¦¦<br />

¦¦¦ ¦¦¦ ¦¦¦ ¦¦¦<br />

¦¦¦ ¦¦¦ ¦¦¦ ¦¦¦ ¦¦¦<br />

¦¦¦<br />

¦¦¦ ¦¦¦<br />

¦¦¦ ¦¦¦<br />

¦¦¦ ¦¦¦<br />

block<br />

cache<br />

entry<br />

¦¦¦ ¦¦¦ ¦¦¦ ¦¦¦<br />

¦¦¦ ¦¦¦ ¦¦¦ ¦¦¦ ¦¦¦<br />

¦¦¦<br />

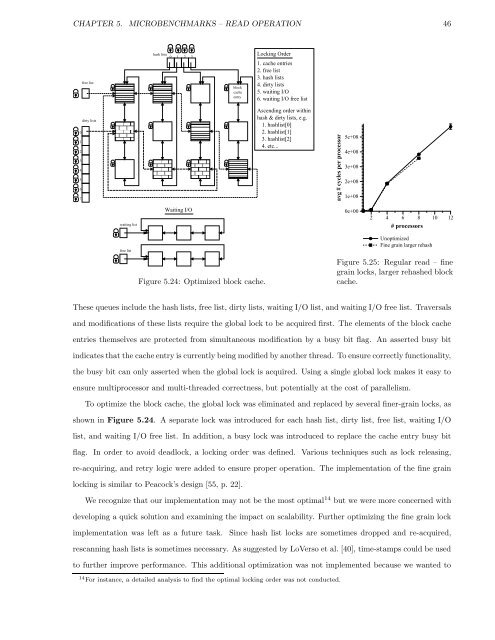

Figure 5.24: Optimized block cache.<br />

Locking Order<br />

1. cache entries<br />

2. free list<br />

3. hash lists<br />

4. dirty lists<br />

5. waiting I/O<br />

6. waiting I/O free list<br />

Ascending order within<br />

hash & dirty lists, e.g.<br />

1. hashlist[0]<br />

2. hashlist[1]<br />

3. hashlist[2]<br />

4. etc...<br />

avg # cycles per processor<br />

5e+08<br />

4e+08<br />

3e+08<br />

2e+08<br />

1e+08<br />

0e+00<br />

2 4 6 8 10 12<br />

# processors<br />

Unoptimized<br />

Fine grain larger rehash<br />

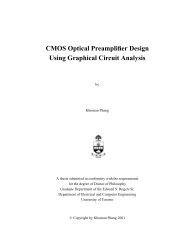

Figure 5.25: Regular read – fine<br />

grain locks, larger rehashed block<br />

cache.<br />

These queues include <strong>the</strong> hash lists, free list, dirty lists, waiting I/O list, <strong>and</strong> waiting I/O free list. Traversals<br />

<strong>and</strong> modifications <strong>of</strong> <strong>the</strong>se lists require <strong>the</strong> global lock to be acquired first. The elements <strong>of</strong> <strong>the</strong> block cache<br />

entries <strong>the</strong>mselves are protected from simultaneous modification by a busy bit flag. An asserted busy bit<br />

indicates that <strong>the</strong> cache entry is currently being modified by ano<strong>the</strong>r thread. To ensure correctly functionality,<br />

<strong>the</strong> busy bit can only asserted when <strong>the</strong> global lock is acquired. Using a single global lock makes it easy to<br />

ensure multiprocessor <strong>and</strong> multi-threaded correctness, but potentially at <strong>the</strong> cost <strong>of</strong> parallelism.<br />

To optimize <strong>the</strong> block cache, <strong>the</strong> global lock was eliminated <strong>and</strong> replaced by several finer-grain locks, as<br />

shown in Figure 5.24. A separate lock was introduced for each hash list, dirty list, free list, waiting I/O<br />

list, <strong>and</strong> waiting I/O free list. In addition, a busy lock was introduced to replace <strong>the</strong> cache entry busy bit<br />

flag. In order to avoid deadlock, a locking order was defined. Various techniques such as lock releasing,<br />

re-acquiring, <strong>and</strong> retry logic were added to ensure proper operation. The implementation <strong>of</strong> <strong>the</strong> fine grain<br />

locking is similar to Peacock’s design [55, p. 22].<br />

We recognize that our implementation may not be <strong>the</strong> most optimal 14 but we were more concerned with<br />

developing a quick solution <strong>and</strong> examining <strong>the</strong> impact on scalability. Fur<strong>the</strong>r optimizing <strong>the</strong> fine grain lock<br />

implementation was left as a future task. Since hash list locks are sometimes dropped <strong>and</strong> re-acquired,<br />

rescanning hash lists is sometimes necessary. As suggested by LoVerso et al. [40], time-stamps could be used<br />

to fur<strong>the</strong>r improve performance. This additional optimization was not implemented because we wanted to<br />

14 For instance, a detailed analysis to find <strong>the</strong> optimal locking order was not conducted.