improving music mood classification using lyrics, audio and social tags

improving music mood classification using lyrics, audio and social tags

improving music mood classification using lyrics, audio and social tags

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

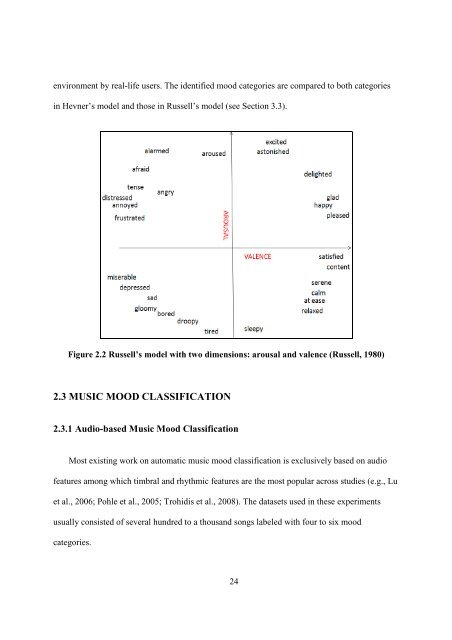

environment by real-life users. The identified <strong>mood</strong> categories are compared to both categories<br />

in Hevner’s model <strong>and</strong> those in Russell’s model (see Section 3.3).<br />

Figure 2.2 Russell’s model with two dimensions: arousal <strong>and</strong> valence (Russell, 1980)<br />

2.3 MUSIC MOOD CLASSIFICATION<br />

2.3.1 Audio-based Music Mood Classification<br />

Most existing work on automatic <strong>music</strong> <strong>mood</strong> <strong>classification</strong> is exclusively based on <strong>audio</strong><br />

features among which timbral <strong>and</strong> rhythmic features are the most popular across studies (e.g., Lu<br />

et al., 2006; Pohle et al., 2005; Trohidis et al., 2008). The datasets used in these experiments<br />

usually consisted of several hundred to a thous<strong>and</strong> songs labeled with four to six <strong>mood</strong><br />

categories.<br />

24