anytime algorithms for learning anytime classifiers saher ... - Technion

anytime algorithms for learning anytime classifiers saher ... - Technion

anytime algorithms for learning anytime classifiers saher ... - Technion

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

<strong>Technion</strong> - Computer Science Department - Ph.D. Thesis PHD-2008-12 - 2008<br />

Misclassification cost<br />

50<br />

45<br />

40<br />

35<br />

30<br />

25<br />

20<br />

15<br />

10<br />

5<br />

0 1 2 3 4 5 6 7<br />

Sample size (r)<br />

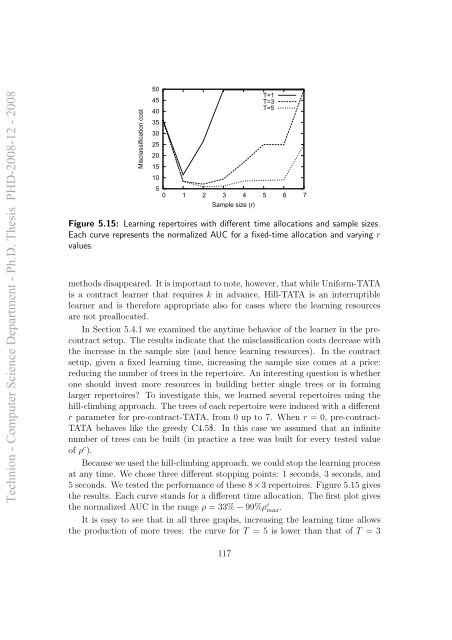

Figure 5.15: Learning repertoires with different time allocations and sample sizes.<br />

Each curve represents the normalized AUC <strong>for</strong> a fixed-time allocation and varying r<br />

values.<br />

methods disappeared. It is important to note, however, that while Uni<strong>for</strong>m-TATA<br />

is a contract learner that requires k in advance, Hill-TATA is an interruptible<br />

learner and is there<strong>for</strong>e appropriate also <strong>for</strong> cases where the <strong>learning</strong> resources<br />

are not preallocated.<br />

In Section 5.4.1 we examined the <strong>anytime</strong> behavior of the learner in the precontract<br />

setup. The results indicate that the misclassification costs decrease with<br />

the increase in the sample size (and hence <strong>learning</strong> resources). In the contract<br />

setup, given a fixed <strong>learning</strong> time, increasing the sample size comes at a price:<br />

reducing the number of trees in the repertoire. An interesting question is whether<br />

one should invest more resources in building better single trees or in <strong>for</strong>ming<br />

larger repertoires? To investigate this, we learned several repertoires using the<br />

hill-climbing approach. The trees of each repertoire were induced with a different<br />

r parameter <strong>for</strong> pre-contract-TATA, from 0 up to 7. When r = 0, pre-contract-<br />

TATA behaves like the greedy C4.5$. In this case we assumed that an infinite<br />

number of trees can be built (in practice a tree was built <strong>for</strong> every tested value<br />

of ρ c ).<br />

Because we used the hill-climbing approach, we could stop the <strong>learning</strong> process<br />

at any time. We chose three different stopping points: 1 seconds, 3 seconds, and<br />

5 seconds. We tested the per<strong>for</strong>mance of these 8×3 repertoires. Figure 5.15 gives<br />

the results. Each curve stands <strong>for</strong> a different time allocation. The first plot gives<br />

the normalized AUC in the range ρ = 33% − 99%ρ c max .<br />

It is easy to see that in all three graphs, increasing the <strong>learning</strong> time allows<br />

the production of more trees: the curve <strong>for</strong> T = 5 is lower than that of T = 3<br />

117<br />

T=1<br />

T=3<br />

T=5