anytime algorithms for learning anytime classifiers saher ... - Technion

anytime algorithms for learning anytime classifiers saher ... - Technion

anytime algorithms for learning anytime classifiers saher ... - Technion

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

<strong>Technion</strong> - Computer Science Department - Ph.D. Thesis PHD-2008-12 - 2008<br />

Average Size<br />

4000<br />

3500<br />

3000<br />

2500<br />

2000<br />

1500<br />

1000<br />

0 100 200 300 400 500 600<br />

Time [sec]<br />

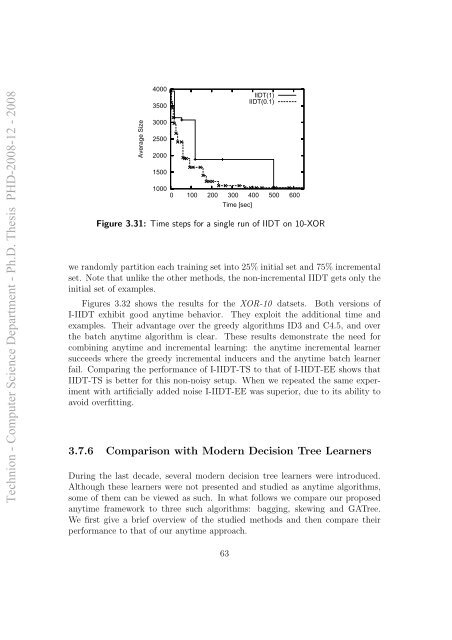

IIDT(1)<br />

IIDT(0.1)<br />

Figure 3.31: Time steps <strong>for</strong> a single run of IIDT on 10-XOR<br />

we randomly partition each training set into 25% initial set and 75% incremental<br />

set. Note that unlike the other methods, the non-incremental IIDT gets only the<br />

initial set of examples.<br />

Figures 3.32 shows the results <strong>for</strong> the XOR-10 datsets. Both versions of<br />

I-IIDT exhibit good <strong>anytime</strong> behavior. They exploit the additional time and<br />

examples. Their advantage over the greedy <strong>algorithms</strong> ID3 and C4.5, and over<br />

the batch <strong>anytime</strong> algorithm is clear. These results demonstrate the need <strong>for</strong><br />

combining <strong>anytime</strong> and incremental <strong>learning</strong>: the <strong>anytime</strong> incremental learner<br />

succeeds where the greedy incremental inducers and the <strong>anytime</strong> batch learner<br />

fail. Comparing the per<strong>for</strong>mance of I-IIDT-TS to that of I-IIDT-EE shows that<br />

IIDT-TS is better <strong>for</strong> this non-noisy setup. When we repeated the same experiment<br />

with artificially added noise I-IIDT-EE was superior, due to its ability to<br />

avoid overfitting.<br />

3.7.6 Comparison with Modern Decision Tree Learners<br />

During the last decade, several modern decision tree learners were introduced.<br />

Although these learners were not presented and studied as <strong>anytime</strong> <strong>algorithms</strong>,<br />

some of them can be viewed as such. In what follows we compare our proposed<br />

<strong>anytime</strong> framework to three such <strong>algorithms</strong>: bagging, skewing and GATree.<br />

We first give a brief overview of the studied methods and then compare their<br />

per<strong>for</strong>mance to that of our <strong>anytime</strong> approach.<br />

63