Diffusion Processes with Hidden States from ... - FU Berlin, FB MI

Diffusion Processes with Hidden States from ... - FU Berlin, FB MI

Diffusion Processes with Hidden States from ... - FU Berlin, FB MI

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

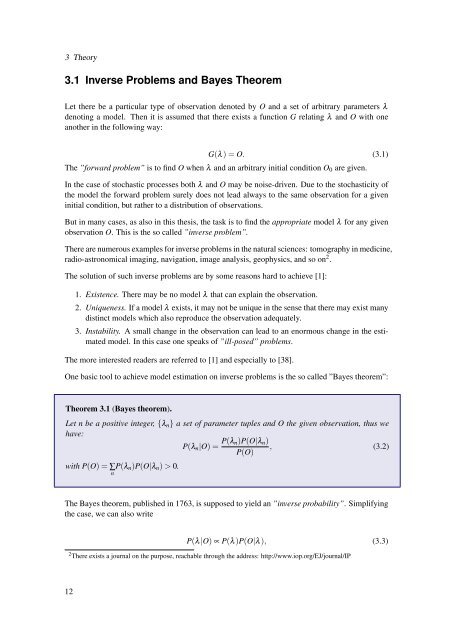

3 Theory3.1 Inverse Problems and Bayes TheoremLet there be a particular type of observation denoted by O and a set of arbitrary parameters λdenoting a model. Then it is assumed that there exists a function G relating λ and O <strong>with</strong> oneanother in the following way:G(λ) = O. (3.1)The ”forward problem” is to find O when λ and an arbitrary initial condition O 0 are given.In the case of stochastic processes both λ and O may be noise-driven. Due to the stochasticity ofthe model the forward problem surely does not lead always to the same observation for a giveninitial condition, but rather to a distribution of observations.But in many cases, as also in this thesis, the task is to find the appropriate model λ for any givenobservation O. This is the so called ”inverse problem”.There are numerous examples for inverse problems in the natural sciences: tomography in medicine,radio-astronomical imaging, navigation, image analysis, geophysics, and so on 2 .The solution of such inverse problems are by some reasons hard to achieve [1]:1. Existence. There may be no model λ that can explain the observation.2. Uniqueness. If a model λ exists, it may not be unique in the sense that there may exist manydistinct models which also reproduce the observation adequately.3. Instability. A small change in the observation can lead to an enormous change in the estimatedmodel. In this case one speaks of ”ill-posed” problems.The more interested readers are referred to [1] and especially to [38].One basic tool to achieve model estimation on inverse problems is the so called ”Bayes theorem”:Theorem 3.1 (Bayes theorem).Let n be a positive integer, {λ n } a set of parameter tuples and O the given observation, thus wehave:P(λ n |O) = P(λ n)P(O|λ n ), (3.2)P(O)<strong>with</strong> P(O) = ∑nP(λ n )P(O|λ n ) > 0.The Bayes theorem, published in 1763, is supposed to yield an ”inverse probability”. Simplifyingthe case, we can also writeP(λ|O) ∝ P(λ)P(O|λ), (3.3)2 There exists a journal on the purpose, reachable through the address: http://www.iop.org/EJ/journal/IP12