- Page 2 and 3:

Angelika C. Bullinger Innovation an

- Page 4 and 5:

Angelika C. Bullinger Innovation an

- Page 6 and 7:

in memoriam Paula Bullinger 1912 -

- Page 8 and 9:

Acknowledgements IX Acknowledgement

- Page 10 and 11:

Contents XIII Contents Preface ....

- Page 12 and 13:

Contents XV 2.2� E-Business (B2B)

- Page 14 and 15:

Contents XVII 2.2� Design Science

- Page 16 and 17:

Abbreviations XIX Abbreviations AI

- Page 18 and 19:

Figures XXI Figures figure 1� �

- Page 20 and 21:

Figures XXIII figure 59� Two-dime

- Page 22 and 23:

Figures XXV figure 120� Formal re

- Page 24 and 25:

XXVIII Tables table 27� Guideline

- Page 26 and 27:

XXX Tables table 87� Relations of

- Page 28 and 29:

2 Point of Departure During past ye

- Page 30 and 31:

4 Research Objectives and Structure

- Page 32 and 33:

6 Outline of Thesis Critical assess

- Page 34 and 35:

8 Part I Roadmap for the Fuzzy Fron

- Page 36 and 37:

10 Innovation 1 Innovation The foll

- Page 38 and 39:

12 Innovation By evaluation of tech

- Page 40 and 41:

14 Innovation 1.2 Types of Innovati

- Page 42 and 43:

16 Innovation A product innovation

- Page 44 and 45:

18 Innovation Process innovations a

- Page 46 and 47:

20 Innovation � Focus of this res

- Page 48 and 49:

22 Innovation figure 8 Some areas a

- Page 50 and 51:

24 Innovation 1.3.1 Holistic Innova

- Page 52 and 53:

26 Innovation figure 10 Elements of

- Page 54 and 55:

28 Innovation 1.3.2.1 R&D Managemen

- Page 56 and 57:

30 Innovation R&D management is par

- Page 58 and 59:

32 Innovation As the participating

- Page 60 and 61:

Roadmap for the Fuzzy Front End 33

- Page 62 and 63:

The Fuzzy Front End 35 2.1 The Fuzz

- Page 64 and 65:

The Fuzzy Front End 37 2.1.2 Manage

- Page 66 and 67:

The Fuzzy Front End 39 Kornwachs (1

- Page 68 and 69:

The Fuzzy Front End 41 Innovative i

- Page 70 and 71:

The Fuzzy Front End 43 Academia has

- Page 72 and 73:

The Fuzzy Front End 45 2.1.4.3 Char

- Page 74 and 75:

The Fuzzy Front End 47 2.1.4.4 Rule

- Page 76 and 77:

Ideation 49 Internal sources of ide

- Page 78 and 79:

Ideation 51 � figure 19 Process o

- Page 80 and 81:

Ideation 53 figure 21 External infl

- Page 82 and 83:

Ideation 55 Such innovative diversi

- Page 84 and 85:

Ideation 57 Sources of Ideas Relate

- Page 86 and 87:

Ideation 59 By the use of this meth

- Page 88 and 89:

Ideation 61 Scientific Publications

- Page 90 and 91:

Ideation 63 Properties and values o

- Page 92 and 93:

Ideation 65 Integration of such inn

- Page 94 and 95:

Concept Gate 67 Otherwise, when pre

- Page 96 and 97:

Concept Gate 69 A majority of large

- Page 98 and 99:

Concept Gate 71 Furthermore, the co

- Page 100 and 101:

Concept Gate 73 A positive impact 9

- Page 102 and 103:

Concept Gate 75 Besides diverse fun

- Page 104 and 105:

Concept Gate 77 2.3.3 Methods for t

- Page 106 and 107:

Concept Gate 79 Sample Criteria for

- Page 108 and 109:

Concept Gate 81 The predefined weig

- Page 110 and 111:

Concept Gate 83 2.3.3.2 Holistic Ap

- Page 112 and 113:

Concept Gate 85 Besides the aspects

- Page 114 and 115:

Concept Gate 87 Drawbacks can arise

- Page 116 and 117:

Concept Development 89 During Conce

- Page 118 and 119:

Concept Development 91 There are th

- Page 120 and 121:

Concept Development 93 Following Ge

- Page 122 and 123:

Concept Development 95 2.4.1.2 Coop

- Page 124 and 125:

Concept Development 97 To focus on

- Page 126 and 127:

Concept Development 99 2.4.2.2 Morp

- Page 128 and 129:

Concept Development 101 The five ph

- Page 130 and 131:

Innovation Gate 103 2.4.3 Support A

- Page 132 and 133:

Innovation Gate 105 The gate meetin

- Page 134 and 135:

Innovation Gate 107 2.5.2 Methods f

- Page 136 and 137:

Innovation Gate 109 figure 33 House

- Page 138 and 139:

Innovation Gate 111 External Evalua

- Page 140 and 141:

Innovation Gate 113 The roadmap is

- Page 142 and 143:

Innovation Gate 115 Economic assess

- Page 144 and 145:

Innovation Gate 117 Elements Detail

- Page 146 and 147:

Innovation Gate 119 The easiest (an

- Page 148 and 149:

Innovation Gate 121 Benchmarking Be

- Page 150 and 151:

Innovation Gate 123 Additionally, t

- Page 152 and 153:

Innovation Gate 125 Transaction In

- Page 154 and 155:

Innovation Gate 127 On the other ha

- Page 156 and 157:

Summary of FFE Roadmap 129 3 Summar

- Page 158 and 159:

Summary of FFE Roadmap 131 Strategi

- Page 160 and 161:

Principles 163 4.1.1.1 Classes As l

- Page 162 and 163:

Principles 165 The (sub)class ‘sa

- Page 164 and 165:

Languages 167 4.2 Languages The sel

- Page 166 and 167:

Languages 169 figure 54 Ontology ma

- Page 168 and 169:

Languages 171 When specifying an on

- Page 170 and 171:

Ontology in Philosophy 135 The foll

- Page 172 and 173:

Ontology in Philosophy 137 The mean

- Page 174 and 175:

Ontologies in Information Science 1

- Page 176 and 177:

Ontologies in Information Science 1

- Page 178 and 179:

Deployment in Business 143 2 Deploy

- Page 180 and 181:

Enterprise Ontologies 145 The Enter

- Page 182 and 183:

Enterprise Ontologies 147 2.1.3 E-B

- Page 184 and 185:

E-Business (B2B) Ontologies 149 Ont

- Page 186 and 187:

E-Business (B2B) Ontologies 151 2.2

- Page 188 and 189:

Management Ontologies 153 RosettaNe

- Page 190 and 191: Management Ontologies 155 The catal

- Page 192 and 193: Management Ontologies 157 figure 51

- Page 194 and 195: Note on Deployment in Other Areas 1

- Page 196 and 197: Potential of Ontologies 161 As the

- Page 198 and 199: 198 Part III Design Science Ontolog

- Page 200 and 201: Existing Classifications 173 As the

- Page 202 and 203: Existing Classifications 175 To sum

- Page 204 and 205: Existing Classifications 177 The as

- Page 206 and 207: Existing Classifications 179 5.1.5

- Page 208 and 209: Existing Classifications 181 5.1.7

- Page 210 and 211: Subject matter 183 5.2.2 Task Ontol

- Page 212 and 213: Subject matter 185 General ontologi

- Page 214 and 215: Formality 187 On the contrary, an o

- Page 216 and 217: Formality 189 5.3.2 Semi-Informal a

- Page 218 and 219: Expressiveness 191 Consequently, tw

- Page 220 and 221: Expressiveness 193 Wielinga et al.

- Page 222 and 223: Expressiveness 195 The distinction

- Page 224 and 225: 254 Part IV Results: The OntoGate T

- Page 226 and 227: Multimethodological Background 199

- Page 228 and 229: Ontology Engineering 201 1.1.1 Syno

- Page 230 and 231: Ontology Engineering 203 The method

- Page 232 and 233: Ontology Engineering 205 The method

- Page 234 and 235: Ontology Engineering 207 1.1.1.4.2

- Page 236 and 237: Ontology Engineering 209 1.1.1.5 On

- Page 238 and 239: Ontology Engineering 211 1.1.2 Stat

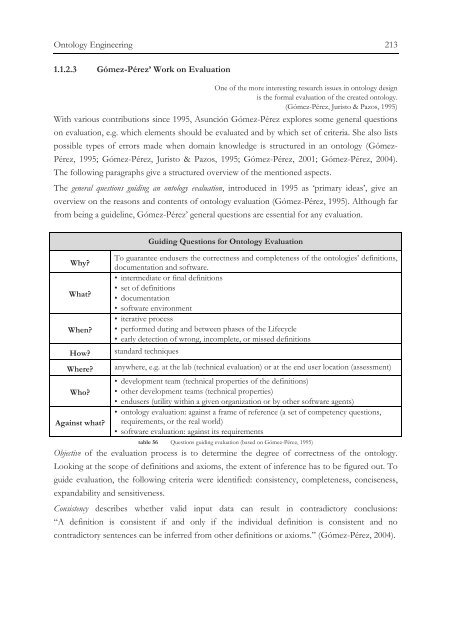

- Page 242 and 243: Design Science 215 1.1.2.4 Evaluati

- Page 244 and 245: Design Science 217 For this thesis,

- Page 246 and 247: Design Science 219 1.2.2.3 An Infor

- Page 248 and 249: Design Science 221 Justify refers t

- Page 250 and 251: Design Science 223 The following fi

- Page 252 and 253: Design Science 225 Foundation, clos

- Page 254 and 255: Design Science 227 Based on the pre

- Page 256 and 257: Empirical Approach 229 2 Empirical

- Page 258 and 259: Research Methodology 231 For purpos

- Page 260 and 261: Research Methodology 233 The lifecy

- Page 262 and 263: Research Methodology 235 2.1.3 Fiel

- Page 264 and 265: Design Science Ontology Lifecycle 2

- Page 266 and 267: Design Science Ontology Lifecycle 2

- Page 268 and 269: Design Science Ontology Lifecycle 2

- Page 270 and 271: Design Science Ontology Lifecycle 2

- Page 272 and 273: Design Science Ontology Lifecycle 2

- Page 274 and 275: Design Science Ontology Lifecycle 2

- Page 276 and 277: Design Science Ontology Lifecycle 2

- Page 278 and 279: Design Science Ontology Lifecycle 2

- Page 280 and 281: 256 Results of Prepare 1 Results of

- Page 282 and 283: 258 Results of Prepare As motivatin

- Page 284 and 285: 260 Results of Develop/ Build 2.2 K

- Page 286 and 287: 262 Results of Develop/ Build 2.2.1

- Page 288 and 289: 264 Results of Develop/ Build The w

- Page 290 and 291:

266 Results of Develop/ Build 2.2.2

- Page 292 and 293:

268 Results of Develop/ Build manag

- Page 294 and 295:

270 Results of Develop/ Build Its t

- Page 296 and 297:

272 Results of Develop/ Build A dis

- Page 298 and 299:

274 Results of Develop/ Build 2.2.4

- Page 300 and 301:

276 Results of Develop/ Build 2.2.5

- Page 302 and 303:

278 Results of Develop/ Build Due t

- Page 304 and 305:

280 Results of Develop/ Build 2.3.1

- Page 306 and 307:

282 Results of Develop/ Build 2.3.1

- Page 308 and 309:

284 Results of Develop/ Build Outpu

- Page 310 and 311:

286 Results of Develop/ Build figur

- Page 312 and 313:

288 Results of Develop/ Build 2.3.1

- Page 314 and 315:

290 Results of Develop/ Build figur

- Page 316 and 317:

292 Results of Develop/ Build figur

- Page 318 and 319:

294 Results of Develop/ Build 2.3.1

- Page 320 and 321:

296 Results of Develop/ Build Core

- Page 322 and 323:

298 Results of Develop/ Build Visua

- Page 324 and 325:

300 Results of Develop/ Build Inter

- Page 326 and 327:

302 Results of Develop/ Build 2.3.2

- Page 328 and 329:

304 Results of Develop/ Build Relat

- Page 330 and 331:

306 Results of Develop/ Build Excer

- Page 332 and 333:

308 Results of Develop/ Build Slots

- Page 334 and 335:

310 Results of Develop/ Build Negat

- Page 336 and 337:

312 Results of Develop/ Build 2.3.2

- Page 338 and 339:

314 Results of Develop/ Build Insta

- Page 340 and 341:

316 Results of Develop/ Build 2.3.2

- Page 342 and 343:

318 Results of Develop/ Build 2.3.2

- Page 344 and 345:

320 Results of Develop/ Build Insta

- Page 346 and 347:

322 Results of Develop/ Build 2.3.2

- Page 348 and 349:

324 Results of Justify/ Evaluate 3

- Page 350 and 351:

326 Results of Justify/ Evaluate fi

- Page 352 and 353:

328 Results of Justify/ Evaluate fi

- Page 354 and 355:

330 Results of Justify/ Evaluate fi

- Page 356 and 357:

332 Results of Justify/ Evaluate fi

- Page 358 and 359:

Conclusion 335 Conclusion Concludin

- Page 360 and 361:

Résumé 337 2 Résumé The conclus

- Page 362 and 363:

Résumé 339 2.2 Empirical Findings

- Page 364 and 365:

Résumé 341 This discussion is bas

- Page 366 and 367:

Résumé 343 The twofold evaluation

- Page 368 and 369:

Outlook 345 Concerning the potentia

- Page 370 and 371:

Annex 347 Annex 1 Glossary of Infor

- Page 372 and 373:

Glossary of Informal Taxonomy 349 d

- Page 374 and 375:

Glossary of Informal Taxonomy 351 l

- Page 376 and 377:

Glossary of Informal Taxonomy 353 q

- Page 378 and 379:

References 355 2 References Abecker

- Page 380 and 381:

References 357 Avesani, P., Giunchi

- Page 382 and 383:

References 359 Erprobung innovative

- Page 384 and 385:

References 361 Brockhoff, K. (2006)

- Page 386 and 387:

References 363 Cooper, R. G. (1994)

- Page 388 and 389:

References 365 Dean, B. V. & Goldha

- Page 390 and 391:

References 367 Ernst, H. (2004). Vi

- Page 392 and 393:

References 369 Franke, N. & Braun,

- Page 394 and 395:

References 371 Gerhards, A. (2002).

- Page 396 and 397:

References 373 González, R. G. (in

- Page 398 and 399:

References 375 Hannah, D. R. (2004)

- Page 400 and 401:

References 377 Herstatt, C. & Sande

- Page 402 and 403:

References 379 Husserl, E. (1965).

- Page 404 and 405:

References 381 Kobe, C. (2006). Tec

- Page 406 and 407:

References 383 Lindgren, M. & Bandh

- Page 408 and 409:

References 385 McBride, B. (2004a).

- Page 410 and 411:

References 387 Noy, N. F. & Hafner,

- Page 412 and 413:

References 389 Porter, M. E. (2004)

- Page 414 and 415:

References 391 Ruiz, F. & et. al. (

- Page 416 and 417:

References 393 Seidl, I. (1993). Un

- Page 418 and 419:

References 395 Staudt, E. (Ed.) (19

- Page 420 and 421:

References 397 Turner, J. R. & Muel

- Page 422 and 423:

References 399 Vet, P. E. van der &

- Page 424 and 425:

References 401 Witte, E. (1978). Un

- Page 426 and 427:

Index 403 3 Index A� action plan,

- Page 428 and 429:

Index 405 I� Ideation, 5, 7, 8, 3

- Page 430:

Index 407 S� scenario, 55, 60, 90