Tutorial CUDA

Tutorial CUDA

Tutorial CUDA

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

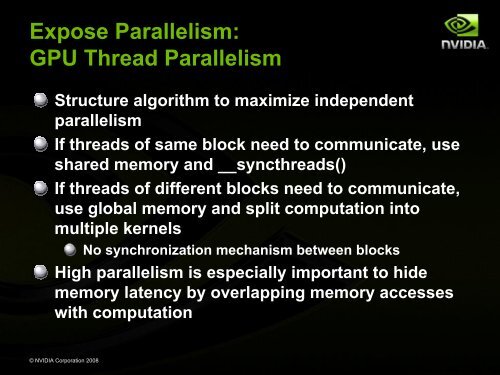

Expose Parallelism:<br />

GPU Thread Parallelism<br />

Structure algorithm to maximize independent<br />

parallelism<br />

If threads of same block need to communicate, use<br />

shared memory and __syncthreads()<br />

If threads of different blocks need to communicate,<br />

use global memory and split computation into<br />

multiple kernels<br />

© NVIDIA Corporation 2008<br />

No synchronization mechanism between blocks<br />

High parallelism is especially important to hide<br />

memory latency by overlapping memory accesses<br />

with computation