Tutorial CUDA

Tutorial CUDA

Tutorial CUDA

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

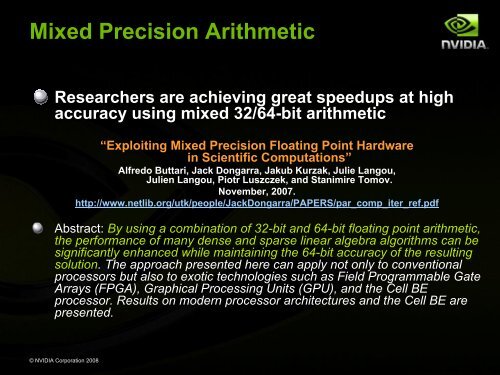

Mixed Precision Arithmetic<br />

Researchers are achieving great speedups at high<br />

accuracy using mixed 32/64-bit arithmetic<br />

© NVIDIA Corporation 2008<br />

“Exploiting Mixed Precision Floating Point Hardware<br />

in Scientific Computations”<br />

Alfredo Buttari, Jack Dongarra, Jakub Kurzak, Julie Langou,<br />

Julien Langou, Piotr Luszczek, and Stanimire Tomov.<br />

November, 2007.<br />

http://www.netlib.org/utk/people/JackDongarra/PAPERS/par_comp_iter_ref.pdf<br />

Abstract: By using a combination of 32-bit and 64-bit floating point arithmetic,<br />

the performance of many dense and sparse linear algebra algorithms can be<br />

significantly enhanced while maintaining the 64-bit accuracy of the resulting<br />

solution. The approach presented here can apply not only to conventional<br />

processors but also to exotic technologies such as Field Programmable Gate<br />

Arrays (FPGA), Graphical Processing Units (GPU), and the Cell BE<br />

processor. Results on modern processor architectures and the Cell BE are<br />

presented.