Notes on computational linguistics.pdf - UCLA Department of ...

Notes on computational linguistics.pdf - UCLA Department of ...

Notes on computational linguistics.pdf - UCLA Department of ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

Stabler - Lx 185/209 2003<br />

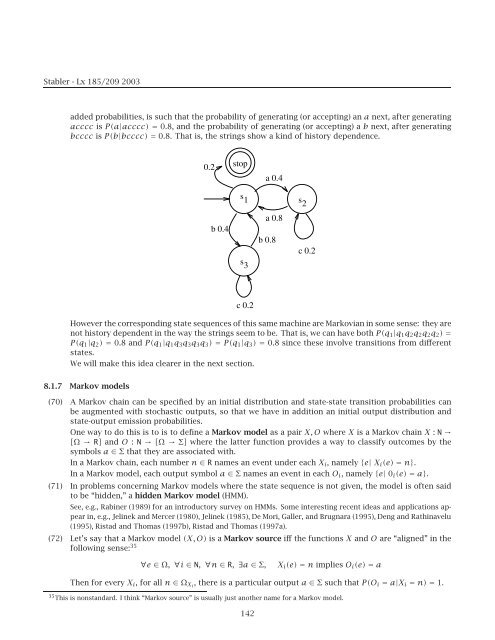

added probabilities, is such that the probability <strong>of</strong> generating (or accepting) an a next, after generating<br />

acccc is P(a|acccc) = 0.8, and the probability <strong>of</strong> generating (or accepting) a b next, after generating<br />

bcccc is P(b|bcccc) = 0.8. That is, the strings show a kind <strong>of</strong> history dependence.<br />

0.2<br />

b 0.4<br />

stop<br />

s 1 s2<br />

s 3<br />

c 0.2<br />

a 0.4<br />

a 0.8<br />

b 0.8<br />

However the corresp<strong>on</strong>ding state sequences <strong>of</strong> this same machine are Markovian in some sense: they are<br />

not history dependent in the way the strings seem to be. That is, we can have both P(q1|q1q2q2q2q2) =<br />

P(q1|q2) = 0.8 andP(q1|q1q3q3q3q3) = P(q1|q3) = 0.8 since these involve transiti<strong>on</strong>s from different<br />

states.<br />

We will make this idea clearer in the next secti<strong>on</strong>.<br />

8.1.7 Markov models<br />

(70) A Markov chain can be specified by an initial distributi<strong>on</strong> and state-state transiti<strong>on</strong> probabilities can<br />

be augmented with stochastic outputs, so that we have in additi<strong>on</strong> an initial output distributi<strong>on</strong> and<br />

state-output emissi<strong>on</strong> probabilities.<br />

One way to do this is to is to define a Markov model as a pair X,O where X is a Markov chain X : N →<br />

[Ω → R] and O : N → [Ω → Σ] where the latter functi<strong>on</strong> provides a way to classify outcomes by the<br />

symbols a ∈ Σ that they are associated with.<br />

In a Markov chain, each number n ∈ R names an event under each Xi, namely{e| Xi(e) = n}.<br />

In a Markov model, each output symbol a ∈ Σ names an event in each Oi, namely{e| 0i(e) = a}.<br />

(71) In problems c<strong>on</strong>cerning Markov models where the state sequence is not given, the model is <strong>of</strong>ten said<br />

to be “hidden,” a hidden Markov model (HMM).<br />

See, e.g., Rabiner (1989) for an introductory survey <strong>on</strong> HMMs. Some interesting recent ideas and applicati<strong>on</strong>s appear<br />

in, e.g., Jelinek and Mercer (1980), Jelinek (1985), De Mori, Galler, and Brugnara (1995), Deng and Rathinavelu<br />

(1995), Ristad and Thomas (1997b), Ristad and Thomas (1997a).<br />

(72) Let’s say that a Markov model (X, O) is a Markov source iff the functi<strong>on</strong>s X and O are “aligned” in the<br />

following sense: 35<br />

c 0.2<br />

∀e ∈ Ω, ∀i ∈ N, ∀n ∈ R, ∃a ∈ Σ, Xi(e) = n implies Oi(e) = a<br />

Then for every Xi, foralln ∈ ΩXi , there is a particular output a ∈ Σ such that P(Oi = a|Xi = n) = 1.<br />

35 This is n<strong>on</strong>standard. I think “Markov source” is usually just another name for a Markov model.<br />

142