Notes on computational linguistics.pdf - UCLA Department of ...

Notes on computational linguistics.pdf - UCLA Department of ...

Notes on computational linguistics.pdf - UCLA Department of ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

Stabler - Lx 185/209 2003<br />

8.1.13 C<strong>on</strong>troversies<br />

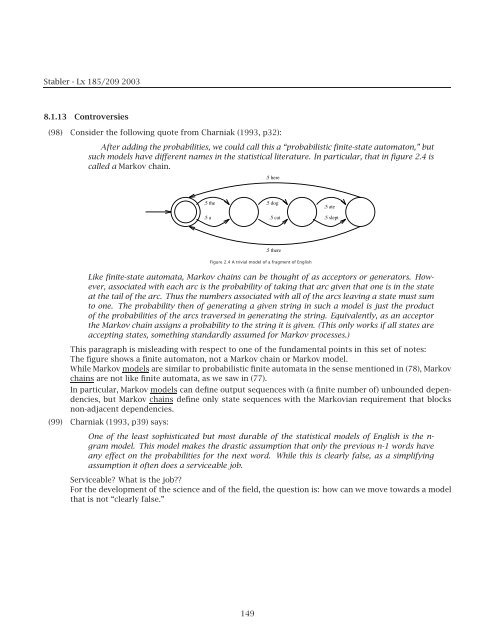

(98) C<strong>on</strong>sider the following quote from Charniak (1993, p32):<br />

After adding the probabilities, we could call this a “probabilistic finite-state automat<strong>on</strong>,” but<br />

such models have different names in the statistical literature. In particular, that in figure 2.4 is<br />

called a Markov chain.<br />

.5 the<br />

.5 here<br />

.5 dog<br />

.5 a .5 cat<br />

.5 there<br />

Figure 2.4 A trivial model <strong>of</strong> a fragment <strong>of</strong> English<br />

.5 ate<br />

.5 slept<br />

Like finite-state automata, Markov chains can be thought <strong>of</strong> as acceptors or generators. However,<br />

associated with each arc is the probability <strong>of</strong> taking that arc given that <strong>on</strong>e is in the state<br />

at the tail <strong>of</strong> the arc. Thus the numbers associated with all <strong>of</strong> the arcs leaving a state must sum<br />

to <strong>on</strong>e. The probability then <strong>of</strong> generating a given string in such a model is just the product<br />

<strong>of</strong> the probabilities <strong>of</strong> the arcs traversed in generating the string. Equivalently, as an acceptor<br />

the Markov chain assigns a probability to the string it is given. (This <strong>on</strong>ly works if all states are<br />

accepting states, something standardly assumed for Markov processes.)<br />

This paragraph is misleading with respect to <strong>on</strong>e <strong>of</strong> the fundamental points in this set <strong>of</strong> notes:<br />

The figure shows a finite automat<strong>on</strong>, not a Markov chain or Markov model.<br />

While Markov models are similar to probabilistic finite automata in the sense menti<strong>on</strong>ed in (78), Markov<br />

chains are not like finite automata, as we saw in (77).<br />

In particular, Markov models can define output sequences with (a finite number <strong>of</strong>) unbounded dependencies,<br />

but Markov chains define <strong>on</strong>ly state sequences with the Markovian requirement that blocks<br />

n<strong>on</strong>-adjacent dependencies.<br />

(99) Charniak (1993, p39) says:<br />

One <strong>of</strong> the least sophisticated but most durable <strong>of</strong> the statistical models <strong>of</strong> English is the ngram<br />

model. This model makes the drastic assumpti<strong>on</strong> that <strong>on</strong>ly the previous n-1 words have<br />

any effect <strong>on</strong> the probabilities for the next word. While this is clearly false, as a simplifying<br />

assumpti<strong>on</strong> it <strong>of</strong>ten does a serviceable job.<br />

Serviceable? What is the job??<br />

For the development <strong>of</strong> the science and <strong>of</strong> the field, the questi<strong>on</strong> is: how can we move towards a model<br />

that is not “clearly false.”<br />

149