- Page 1 and 2:

October 2007 Volume 10 Number 4

- Page 3 and 4:

Abstracting and Indexing Educationa

- Page 5 and 6:

The Relationship of Kolb Learning S

- Page 7 and 8:

a question about learning together

- Page 9 and 10:

affordances of networked learning s

- Page 11 and 12:

included a unit on collaborative le

- Page 13 and 14:

on at different times and work indi

- Page 15 and 16:

• the numerous evaluation studies

- Page 17 and 18:

wanted to work. The same inquiry pr

- Page 19 and 20:

usability studies have a place in t

- Page 21 and 22:

Milrad, M., & Jackskon, M. (2007).

- Page 23 and 24:

information- and communication tech

- Page 25 and 26:

Interactive Examination, the studen

- Page 27 and 28:

Table 1. Criteria for grading OD st

- Page 29 and 30:

content. Differences in the attitud

- Page 31 and 32:

Even though further research on the

- Page 33 and 34:

Olofsson, A. D. (2007). Participati

- Page 35 and 36:

Etzioni (1993) points out that an i

- Page 37 and 38: programme. They are rather construc

- Page 39 and 40: to be that each individual trainee

- Page 41 and 42: Bernmark-Ottosson, A. (2005). Demok

- Page 43 and 44: The Knowledge Foundation (2005). IT

- Page 45 and 46: Figure 1. Design Theories in contex

- Page 47 and 48: attention as we frequently discuss

- Page 49 and 50: Blog Reflection This design concept

- Page 51 and 52: Narrative Structure (Form) Content

- Page 53 and 54: Löwgren, J., & Stolterman, E. (200

- Page 55 and 56: critique, neither do the single ind

- Page 57 and 58: suggested by Ljungberg (1999b) we c

- Page 59 and 60: the del.icio.us site (see figure 3)

- Page 61 and 62: Device cultures It is not only the

- Page 63 and 64: uilt using a camera-equipped PDA ru

- Page 65 and 66: Jones, C., Dirckinck-Holmfeld, L.,

- Page 67 and 68: Milrad, M., & Spikol, D. (2007). An

- Page 69 and 70: components to be able to deal with

- Page 71 and 72: compulsory throughout the project.

- Page 73 and 74: only with their existing day-today

- Page 75 and 76: As our work continues, we will try

- Page 77 and 78: cognition as well as self-regulated

- Page 79 and 80: Mobile mind map tool for stimulatin

- Page 81 and 82: the pictorial knowledge representat

- Page 83 and 84: Ericsson, K. A., & Simon, H. A. (19

- Page 85 and 86: Al-A'ali, M. (2007). Implementation

- Page 87: complex cognitive skills involve em

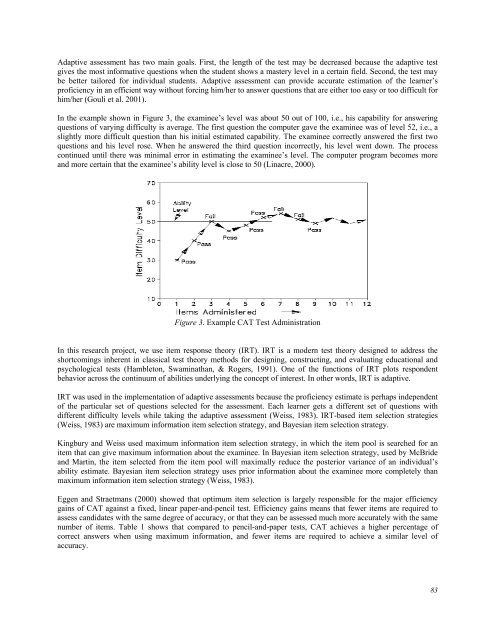

- Page 91 and 92: It is agreed that the difficulty le

- Page 93 and 94: Figure 7. Our newly added factors i

- Page 95 and 96: question factors according to IRT;

- Page 97 and 98: Instructure 1 Login User-ID Passwor

- Page 99 and 100: Shute, V., & Towle, B. (2003). Adap

- Page 101 and 102: distinguish between partial knowled

- Page 103 and 104: esponse is identified, then the sco

- Page 105 and 106: are stored in the answer record and

- Page 107 and 108: 2 (7) MNSQ = ∑WniZ ni ∑ Wni =

- Page 109 and 110: in the course. A randomized block d

- Page 111 and 112: To test whether ET can effectively

- Page 113 and 114: course, more comparison tests could

- Page 115 and 116: Fleischmann, K. R. (2007). Standard

- Page 117 and 118: educational standards in practice t

- Page 119 and 120: laboratory activities, which are bu

- Page 121 and 122: process is currently a largely top-

- Page 123 and 124: Hsu, Y.-S., Wu, H.-K., & Hwang, F.-

- Page 125 and 126: evolution with computing technology

- Page 127 and 128: Resources 0.55 0.72 e17 In my schoo

- Page 129 and 130: teachers’ instructional evolution

- Page 131 and 132: Regression models indicating relati

- Page 133 and 134: of beliefs about integrating comput

- Page 135 and 136: Sinko, M., & Lehtinen, E. (1999). T

- Page 137 and 138: concept of social support. Lastly,

- Page 139 and 140:

Procedure Content analysis procedur

- Page 141 and 142:

and mice are out of order. I have t

- Page 143 and 144:

feature of supportive online groups

- Page 145 and 146:

Anderson, J., & Lee, A. (1995). Lit

- Page 147 and 148:

Schwab, R. L., Jackson, S. E., & Sc

- Page 149 and 150:

Investigating the problem An extens

- Page 151 and 152:

meets the anywhere, anytime require

- Page 153 and 154:

minimise housekeeping tasks and fre

- Page 155 and 156:

3. Join Group Discussion 4. Attend

- Page 157 and 158:

Figure 4. Learning Shell showing He

- Page 159 and 160:

engineering the Learning Shell so i

- Page 161 and 162:

Olfos, R., & Zulantay, H. (2007). R

- Page 163 and 164:

portion of CourseInfo requires that

- Page 165 and 166:

Table 2. Assignment evaluation resp

- Page 167 and 168:

E-3c. Likert scale on usability: Th

- Page 169 and 170:

organized in four groups, namely: T

- Page 171 and 172:

The correlations were not high enou

- Page 173 and 174:

Data illustrate some reliability an

- Page 175 and 176:

nature, some specific core objectiv

- Page 177 and 178:

Frederiksen, J.R., & Collins, A. (1

- Page 179 and 180:

Bottino, R. M., & Robotti, E. (2007

- Page 181 and 182:

The Text Editor allows the editing

- Page 183 and 184:

Mathematical content and structure

- Page 185 and 186:

Consolidation exercises Solution co

- Page 187 and 188:

As to course components, the activi

- Page 189 and 190:

handle more formal representations

- Page 191 and 192:

Lagrange, J. B., Artigue, M., Labor

- Page 193 and 194:

processed information was classifie

- Page 195 and 196:

Method Participants The participant

- Page 197 and 198:

Table 5. Learning outcomes of diffe

- Page 199 and 200:

peers and benefited most from discu

- Page 201 and 202:

Kayes, D.C. (2005). Internal validi

- Page 203 and 204:

This paper considers the potential

- Page 205 and 206:

The first assumption ensures that c

- Page 207 and 208:

allowed to rise when external fundi

- Page 209 and 210:

The second option, lowering the cos

- Page 211 and 212:

Downes, S. (2003). Design and Reusa

- Page 213 and 214:

Appendix A. Good-enough approaches

- Page 215 and 216:

Rapid Prototyping and Design Based

- Page 217 and 218:

Initial Design Because the field-ba

- Page 219 and 220:

• reducing the number of required

- Page 221 and 222:

that they felt this hindrance; I fe

- Page 223 and 224:

goals” (Edelson, 2002, p. 114) wa

- Page 225 and 226:

more and more from the periphery of

- Page 227 and 228:

Edelson, M. (1988). The hermeneutic

- Page 229 and 230:

Wang, H.-Y., & Chen, S. M. (2007).

- Page 231 and 232:

If the universe of discourse U is a

- Page 233 and 234:

we can see that S( X ) − S( Y ) S

- Page 235 and 236:

Example 1: Let à and B ~ be two va

- Page 237 and 238:

columns shown in Table 2, where 1

- Page 239 and 240:

(3) Find the maximum value among th

- Page 241 and 242:

By applying Eq. (7), we can get the

- Page 243 and 244:

Q.2 carries 25 marks, Q.3 carries 2

- Page 245 and 246:

the proposed methods can evaluate s

- Page 247 and 248:

Gogoulou, A., Gouli, E., Grigoriado

- Page 249 and 250:

enabling learner (subject) to work

- Page 251 and 252:

• Self-, Peer- and Collaborative-

- Page 253 and 254:

(iii) Regulates the communication:

- Page 255 and 256:

Figure 3. A screen shot of the SCAL

- Page 257 and 258:

2 nd study: Thirty-five students pa

- Page 259 and 260:

them characterized it as time and e

- Page 261 and 262:

Soller, A. (2001). Supporting Socia

- Page 263 and 264:

making reference to the others wher

- Page 265 and 266:

Solution 2.2: Deliberately select h

- Page 267 and 268:

just near a deadline, when it may b

- Page 269 and 270:

assessed on an individual basis. Wi

- Page 271 and 272:

- and even appreciated - by student

- Page 273 and 274:

Panitz, T., & Panitz, P. (1998). En

- Page 275 and 276:

Concept Mapping Concept mapping by

- Page 277 and 278:

Research Questions In order to dete

- Page 279 and 280:

with their teacher but rather than

- Page 281 and 282:

Tukey’s HSD post hoc test reveale

- Page 283 and 284:

collaboratively during study time?

- Page 285 and 286:

Jegede, O.J., Alaiyemola, F.F., & O

- Page 287 and 288:

esources. The use of synchronous te

- Page 289 and 290:

The use of digital communication mo

- Page 291 and 292:

contributed more information and en

- Page 293 and 294:

3. Use the second screen for the le

- Page 295 and 296:

• Use drawing to develop writing

- Page 297 and 298:

student and teacher on the synchron

- Page 299 and 300:

Vogel, J. J., Vogel, D. S., Cannon-

- Page 301 and 302:

Cases The first set of ten case stu

- Page 303 and 304:

Kılıçkaya, F. (2007). Website re