- Page 1 and 2:

PC Architecture - a book by Michael

- Page 3 and 4:

● Next chapter. ● Previous chap

- Page 5 and 6:

Copyright Michael Karbo , Denmark,

- Page 7 and 8:

IBM-compatible PCs, and as the year

- Page 9 and 10:

8 bit 8080 Small CP/M based home co

- Page 11 and 12:

In the winter of 1939 Atanasoff was

- Page 13 and 14:

Copyright Michael Karbo and ELI Aps

- Page 15 and 16:

Fig. 12. Cray supercomputer, 1976.

- Page 17 and 18:

Copyright Michael Karbo and ELI Aps

- Page 19 and 20:

Internal devices External devices M

- Page 21 and 22:

Fig. 21. Underneath the hard disk y

- Page 23 and 24:

Fig. 24. The motherboard is the hub

- Page 25 and 26:

Fig. 27. Here you can see three (wh

- Page 27 and 28:

Fig. 31. At the bottom left, you ca

- Page 29 and 30:

● Higher clock frequencies (which

- Page 31 and 32:

Which CPU? Fig. 35. The underside o

- Page 33 and 34:

Fig. 38. A CPU is shown here withou

- Page 35 and 36:

Fig. 39. If you are not sure which

- Page 37 and 38:

Copyright Michael Karbo and ELI Aps

- Page 39 and 40:

Fig. 44. The two chips which make u

- Page 41 and 42:

Copyright Michael Karbo and ELI Aps

- Page 43 and 44:

Sound facilities in a chipset canno

- Page 45 and 46:

● Other network, screen and sound

- Page 47 and 48:

Fig. 54. The CPU’s working speed

- Page 49 and 50:

Copyright Michael Karbo and ELI Aps

- Page 51 and 52:

Less power consumption The types of

- Page 53 and 54:

1993 Pentium 0.8/0.5/0.35 microns 1

- Page 55 and 56:

Fig. 67. The latest generations of

- Page 57 and 58:

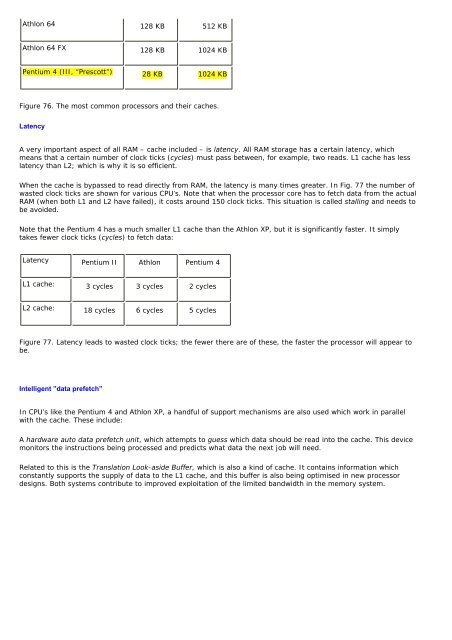

Fig. 68. Cache RAM is much faster t

- Page 59 and 60:

Copyright Michael Karbo and ELI Aps

- Page 61 and 62:

AMD Athlon 64 64 bits 2200 MHz 17,6

- Page 63 and 64:

Fig. 78. The WCPUID program reports

- Page 65 and 66:

Copyright Michael Karbo and ELI Aps

- Page 67 and 68:

As the years have passed, changes h

- Page 69 and 70:

The pipeline is like a reverse asse

- Page 71 and 72:

Motorola G4 4 500 MHz Motorola G4e

- Page 73 and 74:

Fig. 91. In the Pentium 4, the inst

- Page 75 and 76:

Fig. 92. Re-writing numbers in floa

- Page 77 and 78:

will become. ● Improvements to th

- Page 79 and 80:

point numbers with just one instruc

- Page 81 and 82:

The first PC’s were 16-bit machin

- Page 83 and 84:

another in all later generations of

- Page 85 and 86:

Fig. 105. L2 cache running at half

- Page 87 and 88:

Fig. 108. Extreme CPU cooling using

- Page 89 and 90:

Copyright Michael Karbo and ELI Aps

- Page 91 and 92:

Figur 112. The LGA 775 socket for P

- Page 93 and 94:

Figur 114. In the Athlon 64 the mem

- Page 95 and 96:

Figur 116. There are scores of diff

- Page 97 and 98:

present, CPU’s and motherboards h

- Page 99 and 100:

Fig. 119. With this architecture, t

- Page 101 and 102:

Copyright Michael Karbo and ELI Aps

- Page 103 and 104:

Figur 124. A gigantic cooler with t

- Page 105 and 106:

Copyright Michael Karbo and ELI Aps

- Page 107 and 108:

Module or chip size All RAM modules

- Page 109 and 110:

Fig. 135. Older RAM modules. FPM RA

- Page 111 and 112: In the beginning the problem with D

- Page 113 and 114: However, RAM also has to match the

- Page 115 and 116: Modern motherboards for desktop use

- Page 117 and 118: The new architecture is used for bo

- Page 119 and 120: Figur 148. Report from the freeware

- Page 121 and 122: Fig. 150. In reality, the RAM needs

- Page 123 and 124: Fig. 152. The data path to the vide

- Page 125 and 126: Texture cache and RAMDAC Textures a

- Page 127 and 128: opportunities for extension really

- Page 129 and 130: Clock freq. 66 - 1066 MHz Typically

- Page 131 and 132: Figur 162. The features in this mot

- Page 133 and 134: Copyright Michael Karbo and ELI Aps

- Page 135 and 136: Fig. 168. ISA based Sound Blaster s

- Page 137 and 138: As a result of all this, the periph

- Page 139 and 140: Fig. 174. Using the CMOS setup prog

- Page 141 and 142: Copyright Michael Karbo and ELI Aps

- Page 143 and 144: Figure 44. The two chips which make

- Page 145 and 146: Figure 47. The chipset’s south br

- Page 147 and 148: Figure 51. This PC has two sound ca

- Page 149 and 150: You will most likely want to have t

- Page 151 and 152: The trend is towards ever increasin

- Page 153 and 154: A grand new world … We can expect

- Page 155 and 156: here. Wafers and die size Another C

- Page 157 and 158: Copyright Michael Karbo and ELI Aps

- Page 159 and 160: Figure 69. A cache increases the CP

- Page 161: Celeron (later gen.), Pentium III,

- Page 165 and 166: Xeon processors are incredibly expe

- Page 167 and 168: You can no doubt see that it wouldn

- Page 169 and 170: Copyright Michael Karbo and ELI Aps

- Page 171 and 172: Intel Pentium 4 (first generation)

- Page 173 and 174: Figure 90. The passage of instructi

- Page 175 and 176: AMD’s 32 bit Athlon line can bare

- Page 177 and 178: FPU - the number cruncher Floating

- Page 179 and 180: for 32-bit integers, and one for 80

- Page 181 and 182: Copyright Michael Karbo and ELI Aps

- Page 183 and 184: Figure 101. Two 486’s from two di

- Page 185 and 186: Figure 105. L2 cache running at hal

- Page 187 and 188: As was mentioned earlier, the older

- Page 189 and 190: Figur 112. The LGA 775 socket for P

- Page 191 and 192: The Opteron is the most expensive a

- Page 193 and 194: Copyright Michael Karbo and ELI Aps

- Page 195 and 196: Copyright Michael Karbo and ELI Aps

- Page 197 and 198: Figure 120. The bus system for an 8

- Page 199 and 200: Figure 123. Setting the CPU voltage

- Page 201 and 202: Copyright Michael Karbo and ELI Aps

- Page 203 and 204: Figure 129. A 512 MB DDR RAM module

- Page 205 and 206: DDR2-400 400 MHz DDR2 RAM DDR2-533

- Page 207 and 208: Figure 137. The motherboard BIOS ca

- Page 209 and 210: Of course you want to choose the be

- Page 211 and 212: Under the Processes tab, you can se

- Page 213 and 214:

Copyright Michael Karbo and ELI Aps

- Page 215 and 216:

Figure 147. The architecture surrou

- Page 217 and 218:

Figure 149. The new chipset archite

- Page 219 and 220:

Copyright Michael Karbo and ELI Aps

- Page 221 and 222:

At the same time, the PCI system is

- Page 223 and 224:

Figure 156. The black PCI Express X

- Page 225 and 226:

Figure 157. The south bridge connec

- Page 227 and 228:

● The MCI, EISA and VL buses - fa

- Page 229 and 230:

Figure 164. The ATA interface works

- Page 231 and 232:

The ISA bus is thus the I/O bus whi

- Page 233 and 234:

MCA from 1987 Advanced I/O bus from

- Page 235 and 236:

Figure 172. The PCI bus is being re

- Page 237 and 238:

Figure 174. Using the CMOS setup pr

- Page 239 and 240:

Copyright Michael Karbo and ELI Aps

- Page 241 and 242:

Figure 178. The keyboard controller

- Page 243 and 244:

IRQ’s. It didn’t take much befo

- Page 245 and 246:

Memory-mapped I/O All devices, adap

- Page 247 and 248:

Copyright Michael Karbo and ELI Aps

- Page 249 and 250:

Figure 188. Here is the Audigy card

- Page 251 and 252:

Finally, I will look at the FireWir

- Page 253 and 254:

Copyright Michael Karbo and ELI Aps

- Page 255 and 256:

The super I/O controller is connect

- Page 257 and 258:

Figure 196. In the middle you see t

- Page 259 and 260:

Figure 200. The UART controller rep

- Page 261 and 262:

number of different SCSI standards.

- Page 263 and 264:

Figur 205. SCSI hard disks anno 200

- Page 265 and 266:

Figure 207. A USB-based trackball -

- Page 267 and 268:

USB has thus made the serial ports

- Page 269 and 270:

Figure 211. In the middle of the pi

- Page 271 and 272:

Figure 213. An SATA-hard disk (here

- Page 273 and 274:

from the disk. This magnetism will

- Page 275 and 276:

In the ”old days”, interfaces s

- Page 277 and 278:

Figure 220. This motherboard has an

- Page 279 and 280:

Figure 224. Jumper to change betwee

- Page 281 and 282:

Figure 228. An ATA-RAID controller

- Page 283 and 284:

The existing PATA system has a limi

- Page 285 and 286:

Copyright Michael Karbo and ELI Aps

- Page 287 and 288:

CMOS and Setup The startup program

- Page 289 and 290:

Figure 237. Standard CMOS Features

- Page 291 and 292:

Figure 239. Advanced Chipset Featur

- Page 293 and 294:

Figure 241. The system information

- Page 295 and 296:

have seen, in the ROM circuits on t

- Page 297 and 298:

To perform an upgrade you first dow

- Page 299 and 300:

DMA. Direct Memory Access. A system