- Page 1 and 2:

Is Parallel Programming Hard, And,

- Page 3 and 4:

Contents1 Introduction 11.1 Histori

- Page 5 and 6:

CONTENTSv6 Locking 676.1 Staying Al

- Page 7 and 8:

CONTENTSviiB Synchronization Primit

- Page 9 and 10:

CONTENTSixE.7.1 Introduction to Pre

- Page 11 and 12:

PrefaceThe purpose of this book is

- Page 13 and 14:

Chapter 1IntroductionParallel progr

- Page 15 and 16:

1.2. PARALLEL PROGRAMMING GOALS 3CP

- Page 17 and 18:

1.3. ALTERNATIVES TO PARALLEL PROGR

- Page 19 and 20:

1.4. WHAT MAKES PARALLEL PROGRAMMIN

- Page 21 and 22:

1.5. GUIDE TO THIS BOOK 9other hand

- Page 23 and 24:

Chapter 2Hardware and its HabitsMos

- Page 25: 2.1. OVERVIEW 13Therefore, as shown

- Page 28 and 29: 16 CHAPTER 2. HARDWARE AND ITS HABI

- Page 30 and 31: 18 CHAPTER 2. HARDWARE AND ITS HABI

- Page 32 and 33: 20 CHAPTER 3. TOOLS OF THE TRADE1 p

- Page 34 and 35: 22 CHAPTER 3. TOOLS OF THE TRADE1 p

- Page 36 and 37: 24 CHAPTER 3. TOOLS OF THE TRADE1.1

- Page 38 and 39: 26 CHAPTER 3. TOOLS OF THE TRADEQui

- Page 40 and 41: 28 CHAPTER 3. TOOLS OF THE TRADE

- Page 42 and 43: 30 CHAPTER 4. COUNTING1 atomic_t co

- Page 44 and 45: 32 CHAPTER 4. COUNTING4.2.3 Eventua

- Page 46 and 47: 34 CHAPTER 4. COUNTINGvanish when t

- Page 48 and 49: 36 CHAPTER 4. COUNTINGper-thread va

- Page 50 and 51: 38 CHAPTER 4. COUNTING1 unsigned lo

- Page 52 and 53: 40 CHAPTER 4. COUNTING1 unsigned lo

- Page 54 and 55: 42 CHAPTER 4. COUNTING1 #define THE

- Page 56 and 57: 44 CHAPTER 4. COUNTING1 unsigned lo

- Page 58 and 59: 46 CHAPTER 4. COUNTINGReadsAlgorith

- Page 60 and 61: 48 CHAPTER 5. PARTITIONING AND SYNC

- Page 62 and 63: 50 CHAPTER 5. PARTITIONING AND SYNC

- Page 64 and 65: 52 CHAPTER 5. PARTITIONING AND SYNC

- Page 66 and 67: 54 CHAPTER 5. PARTITIONING AND SYNC

- Page 68 and 69: 56 CHAPTER 5. PARTITIONING AND SYNC

- Page 70 and 71: 58 CHAPTER 5. PARTITIONING AND SYNC

- Page 72 and 73: 60 CHAPTER 5. PARTITIONING AND SYNC

- Page 74 and 75: 62 CHAPTER 5. PARTITIONING AND SYNC

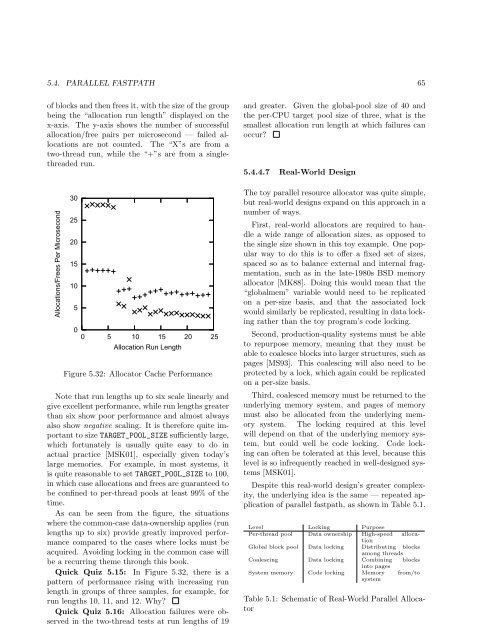

- Page 78 and 79: 66 CHAPTER 5. PARTITIONING AND SYNC

- Page 80 and 81: 68 CHAPTER 6. LOCKING1 int delete(i

- Page 82 and 83: 70 CHAPTER 7. DATA OWNERSHIP

- Page 84 and 85: 72 CHAPTER 8. DEFERRED PROCESSINGfo

- Page 86 and 87: 74 CHAPTER 8. DEFERRED PROCESSINGth

- Page 88 and 89: 76 CHAPTER 8. DEFERRED PROCESSING

- Page 90 and 91: 78 CHAPTER 8. DEFERRED PROCESSINGfi

- Page 92 and 93: 80 CHAPTER 8. DEFERRED PROCESSINGti

- Page 94 and 95: 82 CHAPTER 8. DEFERRED PROCESSINGNo

- Page 96 and 97: 84 CHAPTER 8. DEFERRED PROCESSING12

- Page 98 and 99: 86 CHAPTER 8. DEFERRED PROCESSING1

- Page 100 and 101: 88 CHAPTER 8. DEFERRED PROCESSINGvo

- Page 102 and 103: 90 CHAPTER 8. DEFERRED PROCESSINGLi

- Page 104 and 105: 92 CHAPTER 8. DEFERRED PROCESSINGpe

- Page 106 and 107: 94 CHAPTER 8. DEFERRED PROCESSINGCa

- Page 108 and 109: 96 CHAPTER 8. DEFERRED PROCESSINGTh

- Page 110 and 111: 98 CHAPTER 8. DEFERRED PROCESSING1

- Page 112 and 113: 100 CHAPTER 8. DEFERRED PROCESSING1

- Page 114 and 115: 102 CHAPTER 8. DEFERRED PROCESSING1

- Page 116 and 117: 104 CHAPTER 8. DEFERRED PROCESSINGs

- Page 118 and 119: 106 CHAPTER 8. DEFERRED PROCESSINGo

- Page 120 and 121: 108 CHAPTER 8. DEFERRED PROCESSING

- Page 122 and 123: 110 CHAPTER 9. APPLYING RCU1 struct

- Page 124 and 125: 112 CHAPTER 9. APPLYING RCU

- Page 126 and 127:

114 CHAPTER 10. VALIDATION: DEBUGGI

- Page 128 and 129:

116 CHAPTER 11. DATA STRUCTURES

- Page 130 and 131:

118 CHAPTER 12. ADVANCED SYNCHRONIZ

- Page 132 and 133:

120 CHAPTER 12. ADVANCED SYNCHRONIZ

- Page 134 and 135:

122 CHAPTER 12. ADVANCED SYNCHRONIZ

- Page 136 and 137:

124 CHAPTER 12. ADVANCED SYNCHRONIZ

- Page 138 and 139:

126 CHAPTER 12. ADVANCED SYNCHRONIZ

- Page 140 and 141:

128 CHAPTER 12. ADVANCED SYNCHRONIZ

- Page 142 and 143:

130 CHAPTER 12. ADVANCED SYNCHRONIZ

- Page 144 and 145:

132 CHAPTER 12. ADVANCED SYNCHRONIZ

- Page 146 and 147:

134 CHAPTER 12. ADVANCED SYNCHRONIZ

- Page 148 and 149:

136 CHAPTER 12. ADVANCED SYNCHRONIZ

- Page 150 and 151:

138 CHAPTER 13. EASE OF USEFigure 1

- Page 152 and 153:

140 CHAPTER 13. EASE OF USE

- Page 154 and 155:

142 CHAPTER 14. TIME MANAGEMENT

- Page 156 and 157:

144 CHAPTER 15. CONFLICTING VISIONS

- Page 158 and 159:

146 CHAPTER 15. CONFLICTING VISIONS

- Page 160 and 161:

148 CHAPTER 15. CONFLICTING VISIONS

- Page 162 and 163:

150 CHAPTER 15. CONFLICTING VISIONS

- Page 164 and 165:

152 CHAPTER 15. CONFLICTING VISIONS

- Page 166 and 167:

154 APPENDIX A. IMPORTANT QUESTIONS

- Page 168 and 169:

156 APPENDIX A. IMPORTANT QUESTIONS

- Page 170 and 171:

158 APPENDIX B. SYNCHRONIZATION PRI

- Page 172 and 173:

160 APPENDIX B. SYNCHRONIZATION PRI

- Page 174 and 175:

162 APPENDIX C. WHY MEMORY BARRIERS

- Page 176 and 177:

164 APPENDIX C. WHY MEMORY BARRIERS

- Page 178 and 179:

166 APPENDIX C. WHY MEMORY BARRIERS

- Page 180 and 181:

168 APPENDIX C. WHY MEMORY BARRIERS

- Page 182 and 183:

170 APPENDIX C. WHY MEMORY BARRIERS

- Page 184 and 185:

172 APPENDIX C. WHY MEMORY BARRIERS

- Page 186 and 187:

174 APPENDIX C. WHY MEMORY BARRIERS

- Page 188 and 189:

176 APPENDIX C. WHY MEMORY BARRIERS

- Page 190 and 191:

178 APPENDIX C. WHY MEMORY BARRIERS

- Page 192 and 193:

180 APPENDIX C. WHY MEMORY BARRIERS

- Page 194 and 195:

182 APPENDIX C. WHY MEMORY BARRIERS

- Page 196 and 197:

184 APPENDIX D. READ-COPY UPDATE IM

- Page 198 and 199:

186 APPENDIX D. READ-COPY UPDATE IM

- Page 200 and 201:

188 APPENDIX D. READ-COPY UPDATE IM

- Page 202 and 203:

190 APPENDIX D. READ-COPY UPDATE IM

- Page 204 and 205:

192 APPENDIX D. READ-COPY UPDATE IM

- Page 206 and 207:

194 APPENDIX D. READ-COPY UPDATE IM

- Page 208 and 209:

196 APPENDIX D. READ-COPY UPDATE IM

- Page 210 and 211:

198 APPENDIX D. READ-COPY UPDATE IM

- Page 212 and 213:

200 APPENDIX D. READ-COPY UPDATE IM

- Page 214 and 215:

202 APPENDIX D. READ-COPY UPDATE IM

- Page 216 and 217:

204 APPENDIX D. READ-COPY UPDATE IM

- Page 218 and 219:

206 APPENDIX D. READ-COPY UPDATE IM

- Page 220 and 221:

208 APPENDIX D. READ-COPY UPDATE IM

- Page 222 and 223:

210 APPENDIX D. READ-COPY UPDATE IM

- Page 224 and 225:

212 APPENDIX D. READ-COPY UPDATE IM

- Page 226 and 227:

214 APPENDIX D. READ-COPY UPDATE IM

- Page 228 and 229:

216 APPENDIX D. READ-COPY UPDATE IM

- Page 230 and 231:

218 APPENDIX D. READ-COPY UPDATE IM

- Page 232 and 233:

220 APPENDIX D. READ-COPY UPDATE IM

- Page 234 and 235:

222 APPENDIX D. READ-COPY UPDATE IM

- Page 236 and 237:

224 APPENDIX D. READ-COPY UPDATE IM

- Page 238 and 239:

226 APPENDIX D. READ-COPY UPDATE IM

- Page 240 and 241:

228 APPENDIX D. READ-COPY UPDATE IM

- Page 242 and 243:

230 APPENDIX D. READ-COPY UPDATE IM

- Page 244 and 245:

232 APPENDIX D. READ-COPY UPDATE IM

- Page 246 and 247:

234 APPENDIX D. READ-COPY UPDATE IM

- Page 248 and 249:

236 APPENDIX D. READ-COPY UPDATE IM

- Page 250 and 251:

238 APPENDIX D. READ-COPY UPDATE IM

- Page 252 and 253:

240 APPENDIX D. READ-COPY UPDATE IM

- Page 254 and 255:

242 APPENDIX D. READ-COPY UPDATE IM

- Page 256 and 257:

244 APPENDIX E. FORMAL VERIFICATION

- Page 258 and 259:

246 APPENDIX E. FORMAL VERIFICATION

- Page 260 and 261:

248 APPENDIX E. FORMAL VERIFICATION

- Page 262 and 263:

250 APPENDIX E. FORMAL VERIFICATION

- Page 264 and 265:

252 APPENDIX E. FORMAL VERIFICATION

- Page 266 and 267:

254 APPENDIX E. FORMAL VERIFICATION

- Page 268 and 269:

256 APPENDIX E. FORMAL VERIFICATION

- Page 270 and 271:

258 APPENDIX E. FORMAL VERIFICATION

- Page 272 and 273:

260 APPENDIX E. FORMAL VERIFICATION

- Page 274 and 275:

262 APPENDIX E. FORMAL VERIFICATION

- Page 276 and 277:

264 APPENDIX E. FORMAL VERIFICATION

- Page 278 and 279:

266 APPENDIX E. FORMAL VERIFICATION

- Page 280 and 281:

268 APPENDIX E. FORMAL VERIFICATION

- Page 282 and 283:

270 APPENDIX E. FORMAL VERIFICATION

- Page 284 and 285:

272 APPENDIX F. ANSWERS TO QUICK QU

- Page 286 and 287:

274 APPENDIX F. ANSWERS TO QUICK QU

- Page 288 and 289:

276 APPENDIX F. ANSWERS TO QUICK QU

- Page 290 and 291:

278 APPENDIX F. ANSWERS TO QUICK QU

- Page 292 and 293:

280 APPENDIX F. ANSWERS TO QUICK QU

- Page 294 and 295:

282 APPENDIX F. ANSWERS TO QUICK QU

- Page 296 and 297:

284 APPENDIX F. ANSWERS TO QUICK QU

- Page 298 and 299:

286 APPENDIX F. ANSWERS TO QUICK QU

- Page 300 and 301:

288 APPENDIX F. ANSWERS TO QUICK QU

- Page 302 and 303:

290 APPENDIX F. ANSWERS TO QUICK QU

- Page 304 and 305:

292 APPENDIX F. ANSWERS TO QUICK QU

- Page 306 and 307:

294 APPENDIX F. ANSWERS TO QUICK QU

- Page 308 and 309:

296 APPENDIX F. ANSWERS TO QUICK QU

- Page 310 and 311:

298 APPENDIX F. ANSWERS TO QUICK QU

- Page 312 and 313:

300 APPENDIX F. ANSWERS TO QUICK QU

- Page 314 and 315:

302 APPENDIX F. ANSWERS TO QUICK QU

- Page 316 and 317:

304 APPENDIX F. ANSWERS TO QUICK QU

- Page 318 and 319:

306 APPENDIX F. ANSWERS TO QUICK QU

- Page 320 and 321:

308 APPENDIX F. ANSWERS TO QUICK QU

- Page 322 and 323:

310 APPENDIX F. ANSWERS TO QUICK QU

- Page 324 and 325:

312 APPENDIX F. ANSWERS TO QUICK QU

- Page 326 and 327:

314 APPENDIX F. ANSWERS TO QUICK QU

- Page 328 and 329:

316 APPENDIX F. ANSWERS TO QUICK QU

- Page 330 and 331:

318 APPENDIX F. ANSWERS TO QUICK QU

- Page 332 and 333:

320 APPENDIX F. ANSWERS TO QUICK QU

- Page 334 and 335:

322 APPENDIX F. ANSWERS TO QUICK QU

- Page 336 and 337:

324 APPENDIX F. ANSWERS TO QUICK QU

- Page 338 and 339:

326 APPENDIX F. ANSWERS TO QUICK QU

- Page 340 and 341:

328 APPENDIX F. ANSWERS TO QUICK QU

- Page 342 and 343:

330 APPENDIX G. GLOSSARY(2) A physi

- Page 344 and 345:

332 APPENDIX G. GLOSSARYnear by. Th

- Page 346 and 347:

334 APPENDIX G. GLOSSARY

- Page 348 and 349:

336 BIBLIOGRAPHY[But97]USA, March 2

- Page 350 and 351:

338 BIBLIOGRAPHY[HMB06][Hol03][HP95

- Page 352 and 353:

340 BIBLIOGRAPHY[McK06] Paul E. McK

- Page 354 and 355:

342 BIBLIOGRAPHYtor. Software - Pra

- Page 356 and 357:

344 BIBLIOGRAPHY[UoC08][VGS08]Berke

- Page 358:

346 APPENDIX H. CREDITSH.4 Original

![Debugging with Ptkdb [pdf] - CPAN](https://img.yumpu.com/38177199/1/190x247/debugging-with-ptkdb-pdf-cpan.jpg?quality=85)