Bayesian Programming and Learning for Multi-Player Video Games ...

Bayesian Programming and Learning for Multi-Player Video Games ...

Bayesian Programming and Learning for Multi-Player Video Games ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

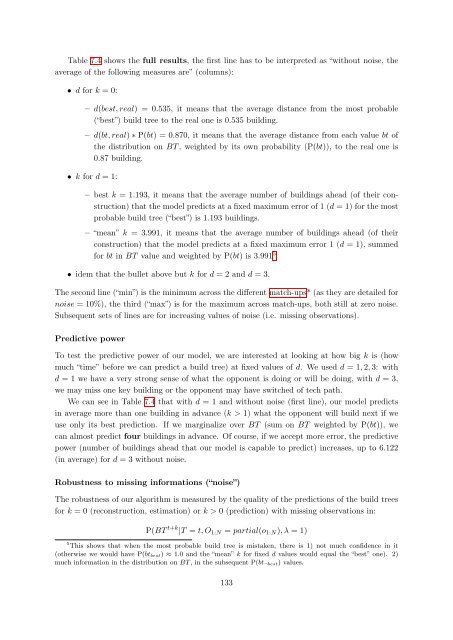

Table 7.4 shows the full results, the first line has to be interpreted as “without noise, the<br />

average of the following measures are” (columns):<br />

• d <strong>for</strong> k = 0:<br />

– d(best, real) = 0.535, it means that the average distance from the most probable<br />

(“best”) build tree to the real one is 0.535 building.<br />

– d(bt, real) ∗ P(bt) = 0.870, it means that the average distance from each value bt of<br />

the distribution on BT , weighted by its own probability (P(bt)), to the real one is<br />

0.87 building.<br />

• k <strong>for</strong> d = 1:<br />

– best k = 1.193, it means that the average number of buildings ahead (of their construction)<br />

that the model predicts at a fixed maximum error of 1 (d = 1) <strong>for</strong> the most<br />

probable build tree (“best”) is 1.193 buildings.<br />

– “mean” k = 3.991, it means that the average number of buildings ahead (of their<br />

construction) that the model predicts at a fixed maximum error 1 (d = 1), summed<br />

<strong>for</strong> bt in BT value <strong>and</strong> weighted by P(bt) is 3.991 5<br />

• idem that the bullet above but k <strong>for</strong> d = 2 <strong>and</strong> d = 3.<br />

The second line (“min”) is the minimum across the different match-ups* (as they are detailed <strong>for</strong><br />

noise = 10%), the third (“max”) is <strong>for</strong> the maximum across match-ups, both still at zero noise.<br />

Subsequent sets of lines are <strong>for</strong> increasing values of noise (i.e. missing observations).<br />

Predictive power<br />

To test the predictive power of our model, we are interested at looking at how big k is (how<br />

much “time” be<strong>for</strong>e we can predict a build tree) at fixed values of d. We used d = 1, 2, 3: with<br />

d = 1 we have a very strong sense of what the opponent is doing or will be doing, with d = 3,<br />

we may miss one key building or the opponent may have switched of tech path.<br />

We can see in Table 7.4 that with d = 1 <strong>and</strong> without noise (first line), our model predicts<br />

in average more than one building in advance (k > 1) what the opponent will build next if we<br />

use only its best prediction. If we marginalize over BT (sum on BT weighted by P(bt)), we<br />

can almost predict four buildings in advance. Of course, if we accept more error, the predictive<br />

power (number of buildings ahead that our model is capable to predict) increases, up to 6.122<br />

(in average) <strong>for</strong> d = 3 without noise.<br />

Robustness to missing in<strong>for</strong>mations (“noise”)<br />

The robustness of our algorithm is measured by the quality of the predictions of the build trees<br />

<strong>for</strong> k = 0 (reconstruction, estimation) or k > 0 (prediction) with missing observations in:<br />

P(BT t+k |T = t, O1:N = partial(o1:N), λ = 1)<br />

5 This shows that when the most probable build tree is mistaken, there is 1) not much confidence in it<br />

(otherwise we would have P(btbest) ≈ 1.0 <strong>and</strong> the “mean” k <strong>for</strong> fixed d values would equal the “best” one). 2)<br />

much in<strong>for</strong>mation in the distribution on BT , in the subsequent P(bt¬best) values.<br />

133