Bayesian Programming and Learning for Multi-Player Video Games ...

Bayesian Programming and Learning for Multi-Player Video Games ...

Bayesian Programming and Learning for Multi-Player Video Games ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

μ<br />

σ<br />

K'<br />

K'<br />

EC t<br />

EU<br />

Q<br />

Army mixture<br />

(GMM)<br />

What we infer<br />

V'<br />

ETT<br />

What we see What we want to know<br />

EU<br />

What we want<br />

to do (tactics)<br />

U<br />

K'<br />

K' K K<br />

V<br />

EC t<br />

K<br />

EC t+1 C c<br />

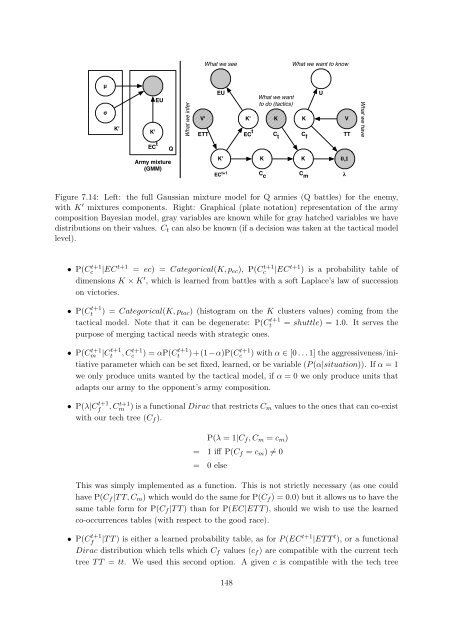

Figure 7.14: Left: the full Gaussian mixture model <strong>for</strong> Q armies (Q battles) <strong>for</strong> the enemy,<br />

with K ′ mixtures components. Right: Graphical (plate notation) representation of the army<br />

composition <strong>Bayesian</strong> model, gray variables are known while <strong>for</strong> gray hatched variables we have<br />

distributions on their values. Ct can also be known (if a decision was taken at the tactical model<br />

level).<br />

• P(C t+1<br />

c |EC t+1 = ec) = Categorical(K, pec), P(C t+1<br />

c |EC t+1 ) is a probability table of<br />

dimensions K × K ′ , which is learned from battles with a soft Laplace’s law of succession<br />

on victories.<br />

• P(C t+1<br />

t ) = Categorical(K, ptac) (histogram on the K clusters values) coming from the<br />

tactical model. Note that it can be degenerate: P(C t+1<br />

t = shuttle) = 1.0. It serves the<br />

purpose of merging tactical needs with strategic ones.<br />

• P(C t+1<br />

m |C t+1<br />

t<br />

, C t+1<br />

c ) = αP(C t+1<br />

t )+(1−α)P(Ct+1 c ) with α ∈ [0 . . . 1] the aggressiveness/ini-<br />

tiative parameter which can be set fixed, learned, or be variable (P (α|situation)). If α = 1<br />

we only produce units wanted by the tactical model, if α = 0 we only produce units that<br />

adapts our army to the opponent’s army composition.<br />

• P(λ|C t+1<br />

f , C t+1<br />

m ) is a functional Dirac that restricts Cm values to the ones that can co-exist<br />

with our tech tree (Cf ).<br />

C t<br />

P(λ = 1|Cf , Cm = cm)<br />

= 1 iff P(Cf = cm) �= 0<br />

= 0 else<br />

This was simply implemented as a function. This is not strictly necessary (as one could<br />

have P(Cf |T T, Cm) which would do the same <strong>for</strong> P(Cf ) = 0.0) but it allows us to have the<br />

same table <strong>for</strong>m <strong>for</strong> P(Cf |T T ) than <strong>for</strong> P(EC|ET T ), should we wish to use the learned<br />

co-occurrences tables (with respect to the good race).<br />

• P(C t+1<br />

f |T T ) is either a learned probability table, as <strong>for</strong> P (EC t+1 |ET T t ), or a functional<br />

Dirac distribution which tells which Cf values (cf ) are compatible with the current tech<br />

tree T T = tt. We used this second option. A given c is compatible with the tech tree<br />

148<br />

C f<br />

K<br />

C m<br />

TT<br />

0,1<br />

λ<br />

What we have