Bayesian Programming and Learning for Multi-Player Video Games ...

Bayesian Programming and Learning for Multi-Player Video Games ...

Bayesian Programming and Learning for Multi-Player Video Games ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

This model can be used to specify the behavior of units in RTS games. Instead of relying<br />

on a “units push each other” physics model <strong>for</strong> h<strong>and</strong>ling dynamic collision of units, this model<br />

makes the units react themselves to collision in a more realistic fashion (a marine cannot push a<br />

tank, the tank will move). More than adding realism to the game, this is a necessary condition<br />

<strong>for</strong> efficient micro-management in StarCraft: Brood War, as we do not have control over the<br />

game physics engine <strong>and</strong> it does not have this “flow-like” physics <strong>for</strong> units positioning.<br />

5.6 Discussion<br />

5.6.1 Perspectives<br />

Adding a sensory input: height attraction<br />

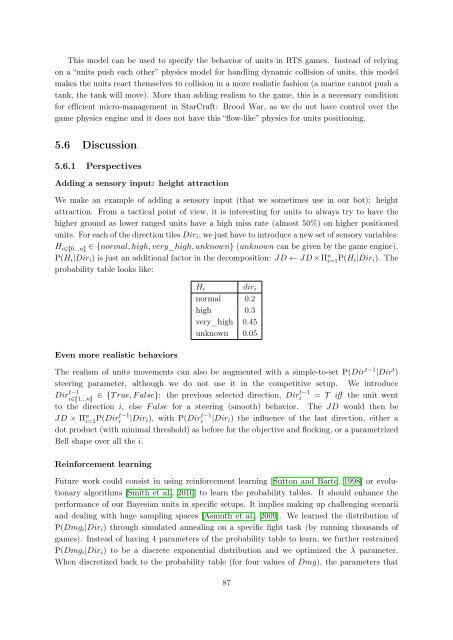

We make an example of adding a sensory input (that we sometimes use in our bot): height<br />

attraction. From a tactical point of view, it is interesting <strong>for</strong> units to always try to have the<br />

higher ground as lower ranged units have a high miss rate (almost 50%) on higher positioned<br />

units. For each of the direction tiles Diri, we just have to introduce a new set of sensory variables:<br />

H i∈�0...n� ∈ {normal, high, very_high, unknown} (unknown can be given by the game engine).<br />

P(Hi|Diri) is just an additional factor in the decomposition: JD ← JD × Π n i=1 P(Hi|Diri). The<br />

probability table looks like:<br />

Even more realistic behaviors<br />

Hi diri<br />

normal 0.2<br />

high 0.3<br />

very_high 0.45<br />

unknown 0.05<br />

The realism of units movements can also be augmented with a simple-to-set P(Dir t−1 |Dir t )<br />

steering parameter, although we do not use it in the competitive setup. We introduce<br />

Dir t−1<br />

i∈�1...n� ∈ {T rue, F alse}: the previous selected direction, Dirt−1<br />

i = T iff the unit went<br />

to the direction i, else F alse <strong>for</strong> a steering (smooth) behavior. The JD would then be<br />

JD × Π n i=1 P(Dirt−1<br />

i |Diri), with P(Dir t−1<br />

i |Diri) the influence of the last direction, either a<br />

dot product (with minimal threshold) as be<strong>for</strong>e <strong>for</strong> the objective <strong>and</strong> flocking, or a parametrized<br />

Bell shape over all the i.<br />

Rein<strong>for</strong>cement learning<br />

Future work could consist in using rein<strong>for</strong>cement learning [Sutton <strong>and</strong> Barto, 1998] or evolutionary<br />

algorithms [Smith et al., 2010] to learn the probability tables. It should enhance the<br />

per<strong>for</strong>mance of our <strong>Bayesian</strong> units in specific setups. It implies making up challenging scenarii<br />

<strong>and</strong> dealing with huge sampling spaces [Asmuth et al., 2009]. We learned the distribution of<br />

P(Dmgi|Diri) through simulated annealing on a specific fight task (by running thous<strong>and</strong>s of<br />

games). Instead of having 4 parameters of the probability table to learn, we further restrained<br />

P(Dmgi|Diri) to be a discrete exponential distribution <strong>and</strong> we optimized the λ parameter.<br />

When discretized back to the probability table (<strong>for</strong> four values of Dmg), the parameters that<br />

87