Dell Power Solutions

Dell Power Solutions

Dell Power Solutions

- No tags were found...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

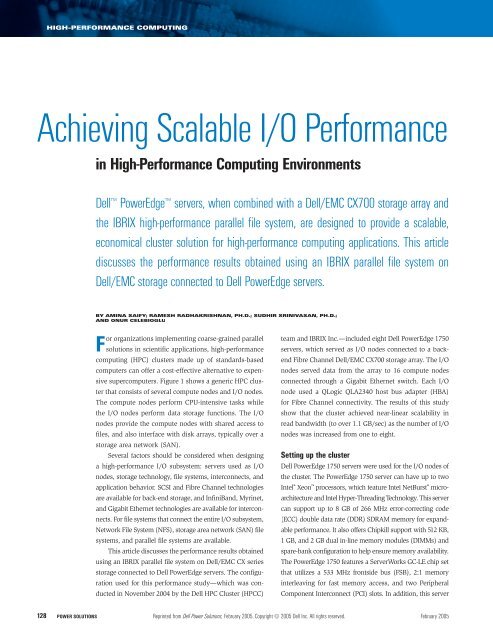

HIGH-PERFORMANCE COMPUTINGAchieving Scalable I/O Performancein High-Performance Computing Environments<strong>Dell</strong> <strong>Power</strong>Edge servers, when combined with a <strong>Dell</strong>/EMC CX700 storage array andthe IBRIX high-performance parallel file system, are designed to provide a scalable,economical cluster solution for high-performance computing applications. This articlediscusses the performance results obtained using an IBRIX parallel file system on<strong>Dell</strong>/EMC storage connected to <strong>Dell</strong> <strong>Power</strong>Edge servers.BY AMINA SAIFY; RAMESH RADHAKRISHNAN, PH.D.; SUDHIR SRINIVASAN, PH.D.;AND ONUR CELEBIOGLUFor organizations implementing coarse-grained parallelsolutions in scientific applications, high-performancecomputing (HPC) clusters made up of standards-basedcomputers can offer a cost-effective alternative to expensivesupercomputers. Figure 1 shows a generic HPC clusterthat consists of several compute nodes and I/O nodes.The compute nodes perform CPU-intensive tasks whilethe I/O nodes perform data storage functions. The I/Onodes provide the compute nodes with shared access tofiles, and also interface with disk arrays, typically over astorage area network (SAN).Several factors should be considered when designinga high-performance I/O subsystem: servers used as I/Onodes, storage technology, file systems, interconnects, andapplication behavior. SCSI and Fibre Channel technologiesare available for back-end storage, and InfiniBand, Myrinet,and Gigabit Ethernet technologies are available for interconnects.For file systems that connect the entire I/O subsystem,Network File System (NFS), storage area network (SAN) filesystems, and parallel file systems are available.This article discusses the performance results obtainedusing an IBRIX parallel file system on <strong>Dell</strong>/EMC CX seriesstorage connected to <strong>Dell</strong> <strong>Power</strong>Edge servers. The configurationused for this performance study—which was conductedin November 2004 by the <strong>Dell</strong> HPC Cluster (HPCC)team and IBRIX Inc.—included eight <strong>Dell</strong> <strong>Power</strong>Edge 1750servers, which served as I/O nodes connected to a backendFibre Channel <strong>Dell</strong>/EMC CX700 storage array. The I/Onodes served data from the array to 16 compute nodesconnected through a Gigabit Ethernet switch. Each I/Onode used a QLogic QLA2340 host bus adapter (HBA)for Fibre Channel connectivity. The results of this studyshow that the cluster achieved near-linear scalability inread bandwidth (to over 1.1 GB/sec) as the number of I/Onodes was increased from one to eight.Setting up the cluster<strong>Dell</strong> <strong>Power</strong>Edge 1750 servers were used for the I/O nodes ofthe cluster. The <strong>Power</strong>Edge 1750 server can have up to twoIntel ®Xeonprocessors, which feature Intel NetBurstt ®microarchitectureand Intel Hyper-Threading Technology. This servercan support up to 8 GB of 266 MHz error-correcting code(ECC) double data rate (DDR) SDRAM memory for expandableperformance. It also offers Chipkill support with 512 KB,1 GB, and 2 GB dual in-line memory modules (DIMMs) andspare-bank configuration to help ensure memory availability.The <strong>Power</strong>Edge 1750 features a ServerWorks GC-LE chip setthat utilizes a 533 MHz frontside bus (FSB), 2:1 memoryinterleaving for fast memory access, and two PeripheralComponent Interconnect (PCI) slots. In addition, this server128POWER SOLUTIONS Reprinted from <strong>Dell</strong> <strong>Power</strong> <strong>Solutions</strong>, February 2005. Copyright © 2005 <strong>Dell</strong> Inc. All rights reserved. February 2005