In Search of Evidence

jqluvth

jqluvth

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

Chapter 6<br />

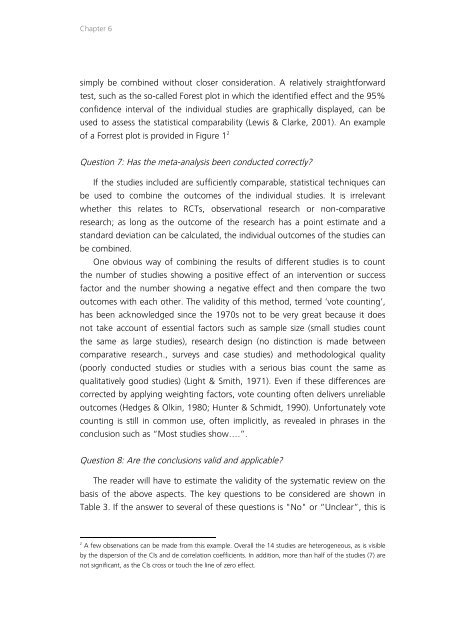

simply be combined without closer consideration. A relatively straightforward<br />

test, such as the so-called Forest plot in which the identified effect and the 95%<br />

confidence interval <strong>of</strong> the individual studies are graphically displayed, can be<br />

used to assess the statistical comparability (Lewis & Clarke, 2001). An example<br />

<strong>of</strong> a Forrest plot is provided in Figure 1 2<br />

Question 7: Has the meta-analysis been conducted correctly?<br />

If the studies included are sufficiently comparable, statistical techniques can<br />

be used to combine the outcomes <strong>of</strong> the individual studies. It is irrelevant<br />

whether this relates to RCTs, observational research or non-comparative<br />

research; as long as the outcome <strong>of</strong> the research has a point estimate and a<br />

standard deviation can be calculated, the individual outcomes <strong>of</strong> the studies can<br />

be combined.<br />

One obvious way <strong>of</strong> combining the results <strong>of</strong> different studies is to count<br />

the number <strong>of</strong> studies showing a positive effect <strong>of</strong> an intervention or success<br />

factor and the number showing a negative effect and then compare the two<br />

outcomes with each other. The validity <strong>of</strong> this method, termed ‘vote counting’,<br />

has been acknowledged since the 1970s not to be very great because it does<br />

not take account <strong>of</strong> essential factors such as sample size (small studies count<br />

the same as large studies), research design (no distinction is made between<br />

comparative research., surveys and case studies) and methodological quality<br />

(poorly conducted studies or studies with a serious bias count the same as<br />

qualitatively good studies) (Light & Smith, 1971). Even if these differences are<br />

corrected by applying weighting factors, vote counting <strong>of</strong>ten delivers unreliable<br />

outcomes (Hedges & Olkin, 1980; Hunter & Schmidt, 1990). Unfortunately vote<br />

counting is still in common use, <strong>of</strong>ten implicitly, as revealed in phrases in the<br />

conclusion such as “Most studies show….”.<br />

Question 8: Are the conclusions valid and applicable?<br />

The reader will have to estimate the validity <strong>of</strong> the systematic review on the<br />

basis <strong>of</strong> the above aspects. The key questions to be considered are shown in<br />

Table 3. If the answer to several <strong>of</strong> these questions is "No" or “Unclear”, this is<br />

2<br />

A few observations can be made from this example. Overall the 14 studies are heterogeneous, as is visible<br />

by the dispersion <strong>of</strong> the CIs and de correlation coefficients. <strong>In</strong> addition, more than half <strong>of</strong> the studies (7) are<br />

not significant, as the CIs cross or touch the line <strong>of</strong> zero effect.