Statistical Language Models based on Neural Networks - Faculty of ...

Statistical Language Models based on Neural Networks - Faculty of ...

Statistical Language Models based on Neural Networks - Faculty of ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

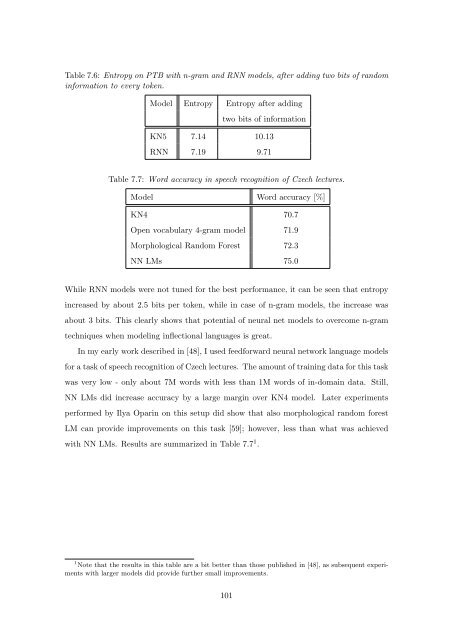

Table 7.6: Entropy <strong>on</strong> PTB with n-gram and RNN models, after adding two bits <strong>of</strong> random<br />

informati<strong>on</strong> to every token.<br />

Model Entropy Entropy after adding<br />

two bits <strong>of</strong> informati<strong>on</strong><br />

KN5 7.14 10.13<br />

RNN 7.19 9.71<br />

Table 7.7: Word accuracy in speech recogniti<strong>on</strong> <strong>of</strong> Czech lectures.<br />

Model Word accuracy [%]<br />

KN4 70.7<br />

Open vocabulary 4-gram model 71.9<br />

Morphological Random Forest 72.3<br />

NN LMs 75.0<br />

While RNN models were not tuned for the best performance, it can be seen that entropy<br />

increased by about 2.5 bits per token, while in case <strong>of</strong> n-gram models, the increase was<br />

about 3 bits. This clearly shows that potential <strong>of</strong> neural net models to overcome n-gram<br />

techniques when modeling inflecti<strong>on</strong>al languages is great.<br />

In my early work described in [48], I used feedforward neural network language models<br />

for a task <strong>of</strong> speech recogniti<strong>on</strong> <strong>of</strong> Czech lectures. The amount <strong>of</strong> training data for this task<br />

was very low - <strong>on</strong>ly about 7M words with less than 1M words <strong>of</strong> in-domain data. Still,<br />

NN LMs did increase accuracy by a large margin over KN4 model. Later experiments<br />

performed by Ilya Oparin <strong>on</strong> this setup did show that also morphological random forest<br />

LM can provide improvements <strong>on</strong> this task [59]; however, less than what was achieved<br />

with NN LMs. Results are summarized in Table 7.7 1 .<br />

1 Note that the results in this table are a bit better than those published in [48], as subsequent experiments<br />

with larger models did provide further small improvements.<br />

101