Statistical Language Models based on Neural Networks - Faculty of ...

Statistical Language Models based on Neural Networks - Faculty of ...

Statistical Language Models based on Neural Networks - Faculty of ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

group that we are interested in.<br />

w(t)<br />

s(t-1)<br />

U<br />

s(t)<br />

V<br />

W X<br />

y(t)<br />

c(t)<br />

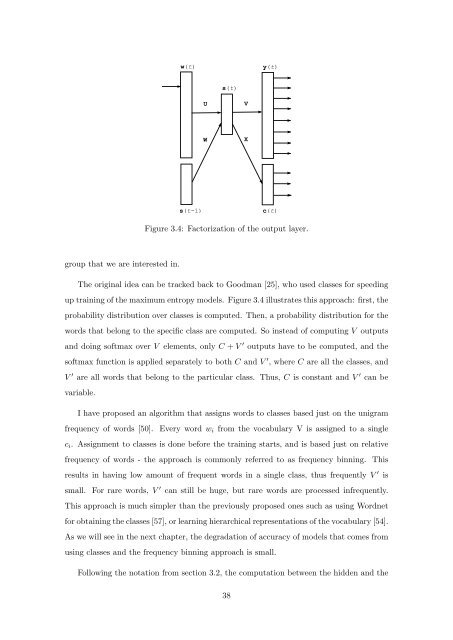

Figure 3.4: Factorizati<strong>on</strong> <strong>of</strong> the output layer.<br />

The original idea can be tracked back to Goodman [25], who used classes for speeding<br />

up training <strong>of</strong> the maximum entropy models. Figure 3.4 illustrates this approach: first, the<br />

probability distributi<strong>on</strong> over classes is computed. Then, a probability distributi<strong>on</strong> for the<br />

words that bel<strong>on</strong>g to the specific class are computed. So instead <strong>of</strong> computing V outputs<br />

and doing s<strong>of</strong>tmax over V elements, <strong>on</strong>ly C + V ′ outputs have to be computed, and the<br />

s<strong>of</strong>tmax functi<strong>on</strong> is applied separately to both C and V ′ , where C are all the classes, and<br />

V ′ are all words that bel<strong>on</strong>g to the particular class. Thus, C is c<strong>on</strong>stant and V ′ can be<br />

variable.<br />

I have proposed an algorithm that assigns words to classes <str<strong>on</strong>g>based</str<strong>on</strong>g> just <strong>on</strong> the unigram<br />

frequency <strong>of</strong> words [50]. Every word wi from the vocabulary V is assigned to a single<br />

ci. Assignment to classes is d<strong>on</strong>e before the training starts, and is <str<strong>on</strong>g>based</str<strong>on</strong>g> just <strong>on</strong> relative<br />

frequency <strong>of</strong> words - the approach is comm<strong>on</strong>ly referred to as frequency binning. This<br />

results in having low amount <strong>of</strong> frequent words in a single class, thus frequently V ′ is<br />

small. For rare words, V ′ can still be huge, but rare words are processed infrequently.<br />

This approach is much simpler than the previously proposed <strong>on</strong>es such as using Wordnet<br />

for obtaining the classes [57], or learning hierarchical representati<strong>on</strong>s <strong>of</strong> the vocabulary [54].<br />

As we will see in the next chapter, the degradati<strong>on</strong> <strong>of</strong> accuracy <strong>of</strong> models that comes from<br />

using classes and the frequency binning approach is small.<br />

Following the notati<strong>on</strong> from secti<strong>on</strong> 3.2, the computati<strong>on</strong> between the hidden and the<br />

38