Statistical Language Models based on Neural Networks - Faculty of ...

Statistical Language Models based on Neural Networks - Faculty of ...

Statistical Language Models based on Neural Networks - Faculty of ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

w(t-2)<br />

s(t-3)<br />

U<br />

W<br />

w(t-1)<br />

s(t-2)<br />

U<br />

W<br />

w(t)<br />

s(t-1)<br />

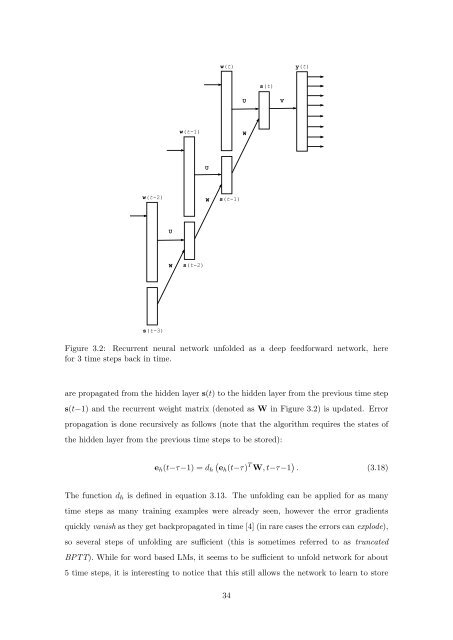

Figure 3.2: Recurrent neural network unfolded as a deep feedforward network, here<br />

for 3 time steps back in time.<br />

are propagated from the hidden layer s(t) to the hidden layer from the previous time step<br />

s(t−1) and the recurrent weight matrix (denoted as W in Figure 3.2) is updated. Error<br />

propagati<strong>on</strong> is d<strong>on</strong>e recursively as follows (note that the algorithm requires the states <strong>of</strong><br />

U<br />

W<br />

s(t)<br />

the hidden layer from the previous time steps to be stored):<br />

V<br />

y(t)<br />

<br />

eh(t−τ−1) = dh eh(t−τ) T W, t−τ−1 . (3.18)<br />

The functi<strong>on</strong> dh is defined in equati<strong>on</strong> 3.13. The unfolding can be applied for as many<br />

time steps as many training examples were already seen, however the error gradients<br />

quickly vanish as they get backpropagated in time [4] (in rare cases the errors can explode),<br />

so several steps <strong>of</strong> unfolding are sufficient (this is sometimes referred to as truncated<br />

BPTT). While for word <str<strong>on</strong>g>based</str<strong>on</strong>g> LMs, it seems to be sufficient to unfold network for about<br />

5 time steps, it is interesting to notice that this still allows the network to learn to store<br />

34