Statistical Language Models based on Neural Networks - Faculty of ...

Statistical Language Models based on Neural Networks - Faculty of ...

Statistical Language Models based on Neural Networks - Faculty of ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

1<br />

a<br />

a<br />

a<br />

1<br />

2<br />

3<br />

P(w(t)|*)<br />

ONE<br />

TWO<br />

THREE<br />

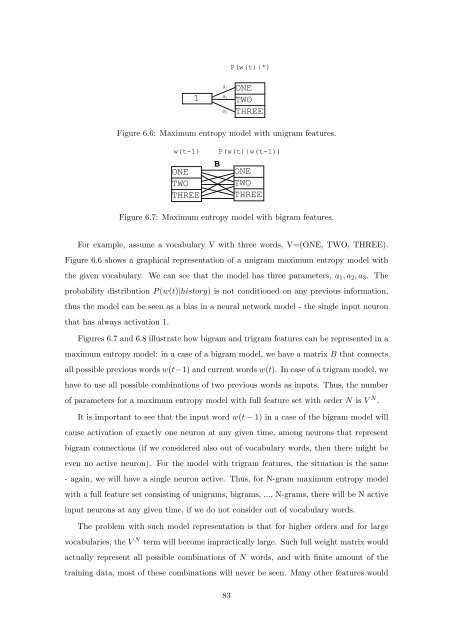

Figure 6.6: Maximum entropy model with unigram features.<br />

w(t-1) P(w(t)|w(t-1))<br />

ONE<br />

TWO<br />

THREE<br />

B<br />

ONE<br />

TWO<br />

THREE<br />

Figure 6.7: Maximum entropy model with bigram features.<br />

For example, assume a vocabulary V with three words, V=(ONE, TWO, THREE).<br />

Figure 6.6 shows a graphical representati<strong>on</strong> <strong>of</strong> a unigram maximum entropy model with<br />

the given vocabulary. We can see that the model has three parameters, a1, a2, a3. The<br />

probability distributi<strong>on</strong> P (w(t)|history) is not c<strong>on</strong>diti<strong>on</strong>ed <strong>on</strong> any previous informati<strong>on</strong>,<br />

thus the model can be seen as a bias in a neural network model - the single input neur<strong>on</strong><br />

that has always activati<strong>on</strong> 1.<br />

Figures 6.7 and 6.8 illustrate how bigram and trigram features can be represented in a<br />

maximum entropy model: in a case <strong>of</strong> a bigram model, we have a matrix B that c<strong>on</strong>nects<br />

all possible previous words w(t−1) and current words w(t). In case <strong>of</strong> a trigram model, we<br />

have to use all possible combinati<strong>on</strong>s <strong>of</strong> two previous words as inputs. Thus, the number<br />

<strong>of</strong> parameters for a maximum entropy model with full feature set with order N is V N .<br />

It is important to see that the input word w(t − 1) in a case <strong>of</strong> the bigram model will<br />

cause activati<strong>on</strong> <strong>of</strong> exactly <strong>on</strong>e neur<strong>on</strong> at any given time, am<strong>on</strong>g neur<strong>on</strong>s that represent<br />

bigram c<strong>on</strong>necti<strong>on</strong>s (if we c<strong>on</strong>sidered also out <strong>of</strong> vocabulary words, then there might be<br />

even no active neur<strong>on</strong>). For the model with trigram features, the situati<strong>on</strong> is the same<br />

- again, we will have a single neur<strong>on</strong> active. Thus, for N-gram maximum entropy model<br />

with a full feature set c<strong>on</strong>sisting <strong>of</strong> unigrams, bigrams, ..., N-grams, there will be N active<br />

input neur<strong>on</strong>s at any given time, if we do not c<strong>on</strong>sider out <strong>of</strong> vocabulary words.<br />

The problem with such model representati<strong>on</strong> is that for higher orders and for large<br />

vocabularies, the V N term will become impractically large. Such full weight matrix would<br />

actually represent all possible combinati<strong>on</strong>s <strong>of</strong> N words, and with finite amount <strong>of</strong> the<br />

training data, most <strong>of</strong> these combinati<strong>on</strong>s will never be seen. Many other features would<br />

83