Onto.PT: Towards the Automatic Construction of a Lexical Ontology ...

Onto.PT: Towards the Automatic Construction of a Lexical Ontology ...

Onto.PT: Towards the Automatic Construction of a Lexical Ontology ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

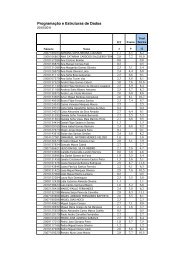

102 Chapter 6. Thesaurus Enrichment<br />

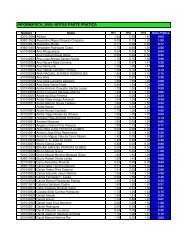

Measure Mode σ Sets/Pair P R RR F1 RF1 F0.5 RF0.5<br />

All – – 4.31 0.37 1.00 1.00 0.54 0.54 0.42 0.42<br />

Best<br />

0.00<br />

0.15<br />

1.07<br />

0.52<br />

0.63<br />

0.72<br />

0.49<br />

0.25<br />

0.81<br />

0.45<br />

0.55<br />

0.37<br />

0.71<br />

0.56<br />

0.59<br />

0.53<br />

0.66<br />

0.65<br />

Jaccard<br />

All<br />

0.05<br />

0.1<br />

0.15<br />

3.14<br />

1.52<br />

0.77<br />

0.45<br />

0.59<br />

0.70<br />

0.86<br />

0.52<br />

0.33<br />

0.96<br />

0.69<br />

0.48<br />

0.59<br />

0.55<br />

0.45<br />

0.61<br />

0.63<br />

0.57<br />

0.49<br />

0.57<br />

0.57<br />

0.50<br />

0.61<br />

0.64<br />

0.2 0.41 0.78 0.20 0.32 0.32 0.46 0.50 0.61<br />

Best<br />

0.00<br />

0.15<br />

1.06<br />

1.04<br />

0.63<br />

0.64<br />

0.48<br />

0.47<br />

0.81<br />

0.80<br />

0.55<br />

0.54<br />

0.71<br />

0.71<br />

0.60<br />

0.60<br />

0.66<br />

0.67<br />

0.1 3.98 0.39 0.96 0.98 0.55 0.56 0.44 0.44<br />

Overlap<br />

All<br />

0.4<br />

0.45<br />

0.5<br />

1.28<br />

1.05<br />

0.88<br />

0.63<br />

0.67<br />

0.70<br />

0.44<br />

0.37<br />

0.32<br />

0.61<br />

0.53<br />

0.48<br />

0.52<br />

0.47<br />

0.44<br />

0.62<br />

0.59<br />

0.57<br />

0.58<br />

0.57<br />

0.57<br />

0.63<br />

0.63<br />

0.64<br />

0.55 0.65 0.74 0.25 0.37 0.37 0.50 0.53 0.62<br />

0.6 0.50 0.79 0.19 0.31 0.30 0.45 0.48 0.60<br />

Best<br />

0.00<br />

0.15<br />

1.06<br />

0.87<br />

0.63<br />

0.64<br />

0.49<br />

0.45<br />

0.81<br />

0.7<br />

0.55<br />

0.53<br />

0.71<br />

0.67<br />

0.60<br />

0.59<br />

0.66<br />

0.65<br />

0.1 2.97 0.46 0.85 0.95 0.60 0.62 0.51 0.51<br />

Dice<br />

All<br />

0.15<br />

0.2<br />

0.25<br />

2.00<br />

1.26<br />

0.81<br />

0.54<br />

0.62<br />

0.71<br />

0.65<br />

0.45<br />

0.35<br />

0.80<br />

0.63<br />

0.50<br />

0.59<br />

0.52<br />

0.47<br />

0.64<br />

0.62<br />

0.59<br />

0.56<br />

0.57<br />

0.59<br />

0.58<br />

0.62<br />

0.66<br />

0.3 0.55 0.77 0.25 0.38 0.38 0.51 0.54 0.64<br />

0.35 0.35 0.81 0.16 0.27 0.26 0.41 0.44 0.58<br />

Best<br />

0.00<br />

0.15<br />

1.05<br />

0.94<br />

0.64<br />

0.66<br />

0.48<br />

0.45<br />

0.81<br />

0.75<br />

0.55<br />

0.53<br />

0.71<br />

0.70<br />

0.60<br />

0.60<br />

0.66<br />

0.68<br />

0.1 3.34 0.44 0.89 0.97 0.59 0.60 0.49 0.49<br />

0.15 2.40 0.52 0.75 0.88 0.61 0.66 0.55 0.57<br />

Cosine<br />

0.2 1.58 0.58 0.53 0.70 0.55 0.64 0.57 0.60<br />

All 0.25 1.08 0.66 0.41 0.58 0.51 0.61 0.59 0.64<br />

0.3 0.74 0.74 0.32 0.48 0.45 0.58 0.59 0.67<br />

0.35 0.48 0.82 0.21 0.35 0.34 0.49 0.52 0.64<br />

0.4 0.32 0.80 0.15 0.25 0.25 0.37 0.43 0.55<br />

Table 6.3: Evaluation against intersection <strong>of</strong> annotators 1 and 2.<br />

1. Create a new sparse matrix M(|V | × |V |).<br />

2. In each cell Mij, put <strong>the</strong> similarity between <strong>the</strong> adjacency vectors <strong>of</strong> <strong>the</strong> word<br />

in vi with <strong>the</strong> adjacency vectors <strong>of</strong> vj, Mij = sim(vi, vj);<br />

3. Extract a cluster Ci from each row Mi, consisting <strong>of</strong> <strong>the</strong> words vj where Mij > θ,<br />

a selected threshold. A lower θ leads to larger synsets and higher ambiguity,<br />

while a larger θ will result on less and smaller synsets or no synsets at all.<br />

4. For each cluster Ci with all elements included in a larger cluster Cj (Ci∪Cj = Cj<br />

and Ci ∩ Cj = Ci), Ci and Cj are merged, giving rise to a new cluster Ck with<br />

<strong>the</strong> same elements <strong>of</strong> Cj.<br />

After clustering, we will have a <strong>the</strong>saurus T with synsets Si and a set <strong>of</strong> discovered<br />

clusters C. A simple thing to do would be to handle <strong>the</strong> clusters as synsets and add<br />

<strong>the</strong>n to <strong>the</strong> <strong>the</strong>saurus. However, some <strong>of</strong> <strong>the</strong> clusters might be already included or<br />

partly included in existing synsets. Therefore, before adding <strong>the</strong> clusters to T , we<br />

compute <strong>the</strong> similarity between <strong>the</strong> words in each synset Si and <strong>the</strong> words in each<br />

discovered cluster Cj. For this purpose, we measure <strong>the</strong> overlap between <strong>the</strong> former<br />

sets, using <strong>the</strong> overlap coefficient:<br />

Overlap(Si, Cj) =<br />

|Si ∩ C ||<br />

min(|Si|, |Cj|)<br />

(6.1)