Onto.PT: Towards the Automatic Construction of a Lexical Ontology ...

Onto.PT: Towards the Automatic Construction of a Lexical Ontology ...

Onto.PT: Towards the Automatic Construction of a Lexical Ontology ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

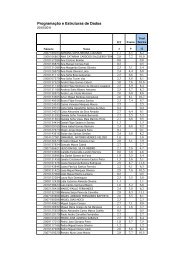

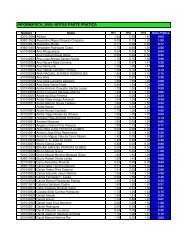

7.2. <strong>Onto</strong>logising performance 125<br />

Algorithm<br />

Precision (%)<br />

Nouns (800 tb-triples)<br />

Recall (%) F1(%) F0.5(%) RF1(%)<br />

RP 99.8 82.0 90.0 95.6 99.8<br />

AC 95.4 78.7 86.3 91.5 95.4<br />

NT 96.1 76.1 84.9 91.3 96.1<br />

NT+AC 96.5 75.9 85.0 91.5 96.5<br />

PR 56.3 53.4 54.9 55.7 56.3<br />

MD 52.8 85.2 65.2 57.1 52.8<br />

Verbs (800 tb-triples)<br />

Precision (%) Recall (%) F1(%) F0.5(%) RF1(%)<br />

RP 99.7 84.5 91.5 96.2 99.7<br />

AC 92.3 83.0 87.4 90.3 92.3<br />

NT 95.2 80.1 87.0 91.7 95.2<br />

NT+AC 95.2 80.1 87.0 91.7 95.2<br />

PR 52.0 56.9 54.3 52.9 52.0<br />

MD 69.6 87.1 77.4 72.5 69.6<br />

Adjectives (800 tb-triples)<br />

Precision (%) Recall (%) F1(%) F0.5(%) RF1(%)<br />

RP 100.0 94.8 97.3 98.9 100.0<br />

AC 95.2 93.0 94.1 94.7 95.2<br />

NT 96.1 91.3 93.6 95.1 96.1<br />

NT+AC 96.3 91.3 93.7 95.3 96.3<br />

PR 70.6 73.6 72.1 71.2 70.6<br />

MD 61.6 96.8 75.3 66.5 61.6<br />

Adverbs (476 tb-triples)<br />

Precision (%) Recall (%) F1(%) F0.5(%) RF1(%)<br />

RP 100.0 90.2 94.9 97.9 100.0<br />

AC 99.2 92.5 95.7 97.8 99.2<br />

NT 96.8 91.7 94.2 95.8 96.8<br />

NT+AC 98.3 89.5 93.7 96.4 98.3<br />

PR 92.4 91.7 92.1 92.3 92.4<br />

MD 64.8 95.8 77.2 69.2 64.8<br />

Table 7.4: Results <strong>of</strong> ontologising samples <strong>of</strong> antonymy tb-triples <strong>of</strong> TeP in TeP,<br />

using all TeP’s antonymy relations as a lexical network N.<br />

RF1 always higher than 90%, and F1 always higher than 84%. This confirms our<br />

initial intuition on using <strong>the</strong>se algorithms.<br />

However, it is not expected to extract a complete lexical network from dictionaries,<br />

and even less from o<strong>the</strong>r kinds <strong>of</strong> text. Even though dictionaries have an<br />

extensive list <strong>of</strong> words, senses and (implicitly) relations, <strong>the</strong>y can hardly cover all<br />

possible tb-triples resulting from a sb-triple, especially for large synsets.<br />

In tables 7.5, 7.6, 7.7 and 7.8 we present <strong>the</strong> results <strong>of</strong> a more realistic scenario,<br />

because we only use part <strong>of</strong> TeP’s lexical network, respectively 50%, 25%<br />

and 12.5%. As expected, performance decreases for smaller lexical networks, but<br />

it decreases more significantly for some algorithms than for o<strong>the</strong>rs. For instance,<br />

RP’s performance decreases drastically, and it has never <strong>the</strong> best F1 nor RF1, as it<br />

had when using all <strong>the</strong> network. On <strong>the</strong> o<strong>the</strong>r hand, RP+AC is <strong>the</strong> algorithm that<br />

performs better with missing tb-triples. Besides having <strong>the</strong> best precision in most<br />

scenarios, this algorithm has always <strong>the</strong> best F1, F0.5 and RF1. There are also some<br />

situations where AC, NT and NT+AC have a very close performance to RP+AC.<br />

The main difference between <strong>the</strong> previous kind <strong>of</strong> scenario and a real scenario<br />

is that <strong>the</strong> part <strong>of</strong> <strong>the</strong> lexical network used was selected randomly, which tends to