Onto.PT: Towards the Automatic Construction of a Lexical Ontology ...

Onto.PT: Towards the Automatic Construction of a Lexical Ontology ...

Onto.PT: Towards the Automatic Construction of a Lexical Ontology ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

8.3. Evaluation 141<br />

<strong>the</strong> most reliable. Following this kind <strong>of</strong> evaluation, we concluded that <strong>the</strong> accuracy<br />

<strong>of</strong> tb-triples extracted from dictionaries depends on <strong>the</strong> relation type. Accuracies<br />

are between 99%, for synonymy, and slightly more than 70%, for purpose-<strong>of</strong> and<br />

property-<strong>of</strong>. Hypernymy, which is <strong>the</strong> relation with more extracted instances, is<br />

about 90% accurate.<br />

The enrichment <strong>of</strong> synsets (chapter 6) was evaluated after <strong>the</strong> comparison with<br />

a reference gold standard, especially created for this task. The agreement on <strong>the</strong><br />

creation <strong>of</strong> <strong>the</strong> reference is moderate, and <strong>the</strong> accuracy <strong>of</strong> assigning a synonymy<br />

pair to a synset with our procedure and <strong>the</strong> selected parameters is between 76% and<br />

81%, depending on <strong>the</strong> judge. We recall that we are using TeP as a starting point for<br />

<strong>the</strong> synset-base. Since <strong>the</strong> previous resource is created manually, <strong>the</strong> final quality <strong>of</strong><br />

synsets should be higher than <strong>the</strong> aforementioned values. As for <strong>the</strong> establishment<br />

<strong>of</strong> new clusters from <strong>the</strong> remaining synonymy pairs, manual evaluation was once<br />

again followed, and <strong>the</strong> accuracy <strong>of</strong> this step has shown to be around 90%. This<br />

is an improvement towards <strong>the</strong> discovery <strong>of</strong> clusters from <strong>the</strong> complete synonymy<br />

network, where accuracy for nouns was about 75% (chapter 5).<br />

Finally, for evaluating <strong>the</strong> attachment <strong>of</strong> <strong>the</strong> term arguments <strong>of</strong> tb-triples to<br />

synsets (chapter 7) we used two gold standards – one created manually, for <strong>the</strong><br />

hypernymy, part-<strong>of</strong> and purpose-<strong>of</strong> relations; and TeP, for antonymy relations. Using<br />

<strong>the</strong> best performing algorithms, <strong>the</strong> precision <strong>of</strong> this step was measured to be<br />

between 99% for antonymy, and 60-64%, for <strong>the</strong> o<strong>the</strong>r relations. This number,<br />

however, considers only attachments to synsets with more than one lexical item.<br />

For lexical items that originate a single-item synset, attachment is straightforward.<br />

Given that more than two thirds <strong>of</strong> <strong>the</strong> <strong>Onto</strong>.<strong>PT</strong> synsets are single-item, ontologising<br />

performance will be higher in <strong>the</strong> actual creation <strong>of</strong> <strong>Onto</strong>.<strong>PT</strong>.<br />

All combined, we understand that <strong>the</strong>re will be some reliability issues with <strong>the</strong><br />

contents <strong>of</strong> <strong>Onto</strong>.<strong>PT</strong>, common in an automatic approach. However, it is not possible<br />

to speculate on a value or an interval for this reliability, because <strong>the</strong> ECO approach<br />

is not linear. Having this in mind, we decided to complement <strong>the</strong> previous evaluation<br />

hints by classifying a small part <strong>of</strong> <strong>the</strong> <strong>Onto</strong>.<strong>PT</strong> sb-triples manually, with results<br />

provided in <strong>the</strong> next section. Although manual evaluation has almost <strong>the</strong> same<br />

problems as creating large resources manually – it is tedious, time-consuming, and<br />

hard to repeat – it is also <strong>the</strong> more reliable kind <strong>of</strong> evaluation.<br />

Besides <strong>the</strong> manual evaluation, in section 8.4 we present how <strong>Onto</strong>.<strong>PT</strong> can be<br />

useful in <strong>the</strong> achievement <strong>of</strong> some NLP tasks. This can be seen as a task-based<br />

evaluation, which is <strong>the</strong> fourth strategy referred by Brank et al. (2005).<br />

8.3.2 Manual evaluation<br />

As referred earlier, manual evaluation suffers from similar issues as creating large<br />

resources manually. It is a tedious, time-consuming job, and hard to repeat. However,<br />

one <strong>of</strong> <strong>the</strong> few alternatives we found in <strong>the</strong> literature for evaluating a wordnet<br />

automatically is based on dictionaries (Nadig et al., 2008). Since all <strong>the</strong> available<br />

Portuguese dictionaries were exploited in <strong>the</strong> creation <strong>of</strong> <strong>Onto</strong>.<strong>PT</strong>, a similar evaluation<br />

would be biased.<br />

The manual evaluation <strong>of</strong> <strong>Onto</strong>.<strong>PT</strong> considered, first, <strong>the</strong> synsets alone and, second,<br />

<strong>the</strong> proper sb-triples. More precisely, this evaluation had <strong>the</strong> following steps:<br />

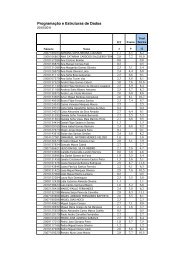

• Two random samples <strong>of</strong> 300 sb-triples were collected from <strong>Onto</strong>.<strong>PT</strong>: one with