ERATO Proceedings Istanbul 2006.pdf - Odeon

ERATO Proceedings Istanbul 2006.pdf - Odeon

ERATO Proceedings Istanbul 2006.pdf - Odeon

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

generation of the virtual bodies has also to consider the total number of polygons used to create<br />

the meshes in order to keep a balance between the 3D real-time simulation restrictions and the<br />

skin deformation accuracy of the models (as more polygons deform better but are heavier to<br />

simulate).<br />

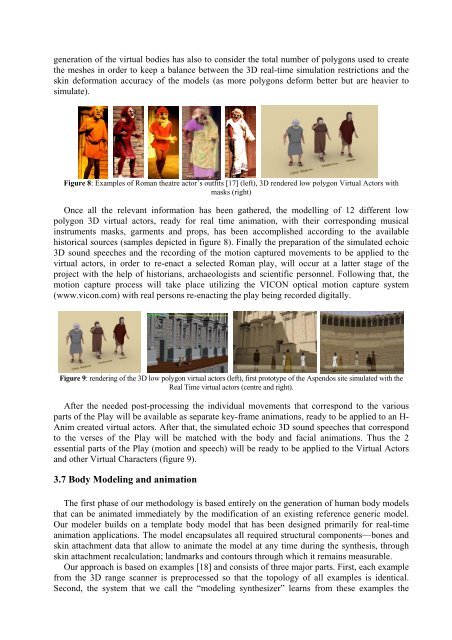

Figure 8: Examples of Roman theatre actor’s outfits [17] (left), 3D rendered low polygon Virtual Actors with<br />

masks (right)<br />

Once all the relevant information has been gathered, the modelling of 12 different low<br />

polygon 3D virtual actors, ready for real time animation, with their corresponding musical<br />

instruments masks, garments and props, has been accomplished according to the available<br />

historical sources (samples depicted in figure 8). Finally the preparation of the simulated echoic<br />

3D sound speeches and the recording of the motion captured movements to be applied to the<br />

virtual actors, in order to re-enact a selected Roman play, will occur at a latter stage of the<br />

project with the help of historians, archaeologists and scientific personnel. Following that, the<br />

motion capture process will take place utilizing the VICON optical motion capture system<br />

(www.vicon.com) with real persons re-enacting the play being recorded digitally.<br />

Figure 9: rendering of the 3D low polygon virtual actors (left), first prototype of the Aspendos site simulated with the<br />

Real Time virtual actors (centre and right).<br />

After the needed post-processing the individual movements that correspond to the various<br />

parts of the Play will be available as separate key-frame animations, ready to be applied to an H-<br />

Anim created virtual actors. After that, the simulated echoic 3D sound speeches that correspond<br />

to the verses of the Play will be matched with the body and facial animations. Thus the 2<br />

essential parts of the Play (motion and speech) will be ready to be applied to the Virtual Actors<br />

and other Virtual Characters (figure 9).<br />

3.7 Body Modeling and animation<br />

The first phase of our methodology is based entirely on the generation of human body models<br />

that can be animated immediately by the modification of an existing reference generic model.<br />

Our modeler builds on a template body model that has been designed primarily for real-time<br />

animation applications. The model encapsulates all required structural components—bones and<br />

skin attachment data that allow to animate the model at any time during the synthesis, through<br />

skin attachment recalculation; landmarks and contours through which it remains measurable.<br />

Our approach is based on examples [18] and consists of three major parts. First, each example<br />

from the 3D range scanner is preprocessed so that the topology of all examples is identical.<br />

Second, the system that we call the “modeling synthesizer” learns from these examples the