Journal of Research in Innovative Teaching - National University

Journal of Research in Innovative Teaching - National University

Journal of Research in Innovative Teaching - National University

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

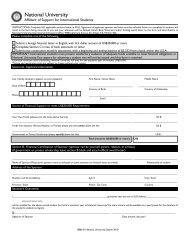

assessment rat<strong>in</strong>gs for graduates versus undergraduates were very similar (also see Table<br />

2). Comparisons among the college/schools revealed very little (see Table 3), except that<br />

the College <strong>of</strong> Letters and Science (COLS) did the best job <strong>of</strong> balanc<strong>in</strong>g low GPAs with<br />

high assessment rat<strong>in</strong>gs. F<strong>in</strong>ally, full-time and associate faculty (compared to adjuncts)<br />

did better at keep<strong>in</strong>g the GPA low and the assessment rat<strong>in</strong>gs high (see Table 4).<br />

Validity Analysis<br />

One way to assess validity is through factor analysis. Factor analysis is a statistical<br />

technique that shows whether items tend to fall <strong>in</strong>to mean<strong>in</strong>gful clusters. Ideally, if the<br />

assessment <strong>in</strong>strument were valid, we would expect the items listed for Student<br />

Assessment <strong>of</strong> Learn<strong>in</strong>g (SAL) to form one unique factor, items under Assessment <strong>of</strong><br />

Teach<strong>in</strong>g (AT) to form another unique factor, and items under Assessment <strong>of</strong> Course<br />

Content (ACC) to form a third unique factor. (Assessment <strong>of</strong> Web-Based Technology<br />

could not be <strong>in</strong>cluded because only the onl<strong>in</strong>e students received these items.) The set <strong>of</strong><br />

assessment questions did not yield a three-factor solution. Instead, two dist<strong>in</strong>ct factors<br />

were uncovered. In general, the items listed under AT formed one factor, and the items<br />

under SAL and ACC formed the second factor. Although this factor analysis was not<br />

completely consistent with the expected factor structure, the results give some credence<br />

to the validity <strong>of</strong> the assessment because the factors suggest that the <strong>in</strong>strument measures<br />

teach<strong>in</strong>g ability and some comb<strong>in</strong>ation <strong>of</strong> learn<strong>in</strong>g and course content.<br />

Another way to exam<strong>in</strong>e validity is to see if the assessment <strong>in</strong>strument is related<br />

to other variables as expected. In the comparative analysis the rat<strong>in</strong>gs were related to the<br />

type <strong>of</strong> class and faculty rank <strong>in</strong> ways that we would expect a valid <strong>in</strong>strument to behave<br />

(e.g., onl<strong>in</strong>e classes get lower rat<strong>in</strong>g, full-time and associate faculty get higher rat<strong>in</strong>gs).<br />

Another variable <strong>of</strong> <strong>in</strong>terest is grades. Although most <strong>in</strong>structors believe that<br />

assessment rat<strong>in</strong>gs are related to grades, the empirical data have not always supported this<br />

presumed relationship. However, most studies have only looked at l<strong>in</strong>ear relationships<br />

and have not considered the possibility that the relationship might be curvil<strong>in</strong>ear. Indeed,<br />

when we look at the function that relates GPA to the question that asks for an overall<br />

assessment <strong>of</strong> the teacher (“Overall, the <strong>in</strong>structor was an effective teacher”), we see a<br />

non-l<strong>in</strong>ear trend (see Figure 1). The traditional Pearson correlation coefficient was not<br />

significant (r = .007, p = .242) and the l<strong>in</strong>ear trend was not significant [F (1, 30396) =<br />

1.37, p = .242]. The curvil<strong>in</strong>ear (quadratic) trend, however, was statistically significant [F<br />

(1, 30395) = 44.25, p < .000]. This f<strong>in</strong>d<strong>in</strong>g and the positive nature <strong>of</strong> the relationship<br />

shows that student satisfaction was positively related to grades <strong>in</strong> that students who earn<br />

higher grades tend to give their <strong>in</strong>structors higher evaluations. As noted below, this<br />

f<strong>in</strong>d<strong>in</strong>g can be <strong>in</strong>terpreted <strong>in</strong> at least two ways. One way to view these results is to argue<br />

that student rat<strong>in</strong>gs are associated with student performance and thus supports the validity<br />

<strong>of</strong> the assessment <strong>in</strong>strument.<br />

178