Ten-Year Impacts of Burkina Faso’s BRIGHT Program

n?u=RePEc:mpr:mprres:2ecdd42bb503422b802ce20da2bf64b7&r=edu

n?u=RePEc:mpr:mprres:2ecdd42bb503422b802ce20da2bf64b7&r=edu

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

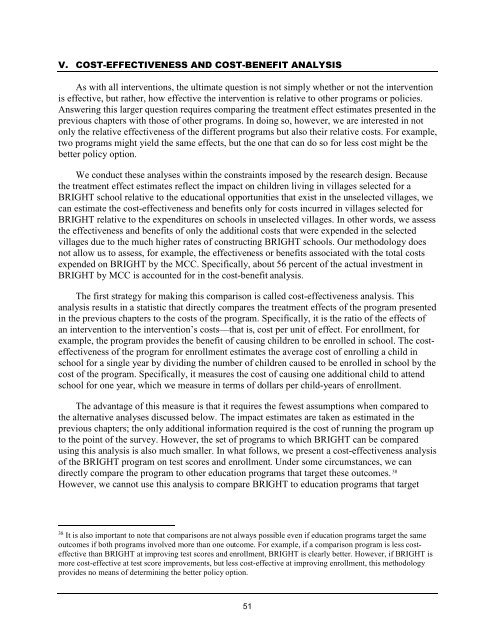

V. COST-EFFECTIVENESS AND COST-BENEFIT ANALYSIS<br />

As with all interventions, the ultimate question is not simply whether or not the intervention<br />

is effective, but rather, how effective the intervention is relative to other programs or policies.<br />

Answering this larger question requires comparing the treatment effect estimates presented in the<br />

previous chapters with those <strong>of</strong> other programs. In doing so, however, we are interested in not<br />

only the relative effectiveness <strong>of</strong> the different programs but also their relative costs. For example,<br />

two programs might yield the same effects, but the one that can do so for less cost might be the<br />

better policy option.<br />

We conduct these analyses within the constraints imposed by the research design. Because<br />

the treatment effect estimates reflect the impact on children living in villages selected for a<br />

<strong>BRIGHT</strong> school relative to the educational opportunities that exist in the unselected villages, we<br />

can estimate the cost-effectiveness and benefits only for costs incurred in villages selected for<br />

<strong>BRIGHT</strong> relative to the expenditures on schools in unselected villages. In other words, we assess<br />

the effectiveness and benefits <strong>of</strong> only the additional costs that were expended in the selected<br />

villages due to the much higher rates <strong>of</strong> constructing <strong>BRIGHT</strong> schools. Our methodology does<br />

not allow us to assess, for example, the effectiveness or benefits associated with the total costs<br />

expended on <strong>BRIGHT</strong> by the MCC. Specifically, about 56 percent <strong>of</strong> the actual investment in<br />

<strong>BRIGHT</strong> by MCC is accounted for in the cost-benefit analysis.<br />

The first strategy for making this comparison is called cost-effectiveness analysis. This<br />

analysis results in a statistic that directly compares the treatment effects <strong>of</strong> the program presented<br />

in the previous chapters to the costs <strong>of</strong> the program. Specifically, it is the ratio <strong>of</strong> the effects <strong>of</strong><br />

an intervention to the intervention’s costs—that is, cost per unit <strong>of</strong> effect. For enrollment, for<br />

example, the program provides the benefit <strong>of</strong> causing children to be enrolled in school. The costeffectiveness<br />

<strong>of</strong> the program for enrollment estimates the average cost <strong>of</strong> enrolling a child in<br />

school for a single year by dividing the number <strong>of</strong> children caused to be enrolled in school by the<br />

cost <strong>of</strong> the program. Specifically, it measures the cost <strong>of</strong> causing one additional child to attend<br />

school for one year, which we measure in terms <strong>of</strong> dollars per child-years <strong>of</strong> enrollment.<br />

The advantage <strong>of</strong> this measure is that it requires the fewest assumptions when compared to<br />

the alternative analyses discussed below. The impact estimates are taken as estimated in the<br />

previous chapters; the only additional information required is the cost <strong>of</strong> running the program up<br />

to the point <strong>of</strong> the survey. However, the set <strong>of</strong> programs to which <strong>BRIGHT</strong> can be compared<br />

using this analysis is also much smaller. In what follows, we present a cost-effectiveness analysis<br />

<strong>of</strong> the <strong>BRIGHT</strong> program on test scores and enrollment. Under some circumstances, we can<br />

directly compare the program to other education programs that target these outcomes. 38<br />

However, we cannot use this analysis to compare <strong>BRIGHT</strong> to education programs that target<br />

38<br />

It is also important to note that comparisons are not always possible even if education programs target the same<br />

outcomes if both programs involved more than one outcome. For example, if a comparison program is less costeffective<br />

than <strong>BRIGHT</strong> at improving test scores and enrollment, <strong>BRIGHT</strong> is clearly better. However, if <strong>BRIGHT</strong> is<br />

more cost-effective at test score improvements, but less cost-effective at improving enrollment, this methodology<br />

provides no means <strong>of</strong> determining the better policy option.<br />

51