Bernal S D_2010.pdf - University of Plymouth

Bernal S D_2010.pdf - University of Plymouth

Bernal S D_2010.pdf - University of Plymouth

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

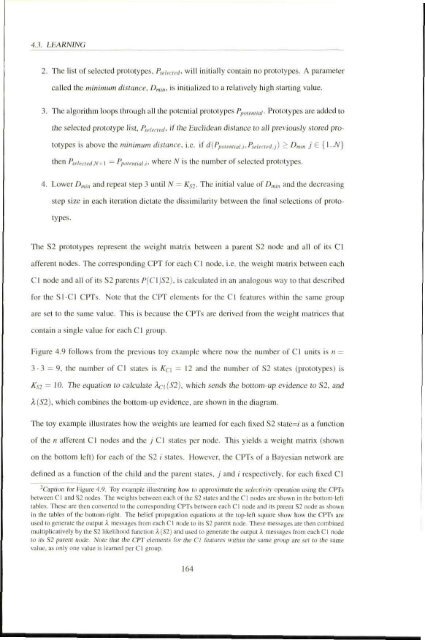

4.3. LEARNING<br />

2. The list <strong>of</strong> selected prototypes. Fjc/rn.'^. will initially contain no prototypes. A parameter<br />

called the minimum distance, Dmin- '^^ initialized to a relatively high starting value.<br />

3. The algorithm loops through all die potential prototypes /*,,n,„v,;. Prototypes are added to<br />

the selected prototype li,^t. l^^hried- if ihe Euclidean distance to all previously stored pro<br />

totypes is above the minimum distance, i.e. if d{Ppo,eni,ai.i- Pseimrdj) i l^mtn j ^ {\--N}<br />

then Psfierredjj+i — Pporeniiai.i' wHcrc N is the number <strong>of</strong> selected prototypes.<br />

4. Lower D,„,„ and repeat step .1 until N - K^i- The initial value <strong>of</strong> D,„i„ and the decreasing<br />

step size in each iteration dictate the dissimilarity between the final selection.'; <strong>of</strong> proto<br />

types,<br />

The S2 prototypes represent the weight matrix between a parent S2 node and all <strong>of</strong> its CI<br />

afferent nodes. The corresponding CI'T for each C1 node, i.e. the weight matrix between each<br />

n node and all <strong>of</strong> its S2 parents P{C\\S2). is calculated in an analogous way lo thai described<br />

for the Sl-Cl CRTs. Note that the CPT elements for the CI features within the same group<br />

are set to the same value. This is because the CPTs are derived from the weight matrices that<br />

contain a single value for each CI group.<br />

Figure 4,9 follows from the previous toy example where now the number <strong>of</strong> CI units is « =<br />

3 • 3 — 9, the number o!' CI slates is Kn = 12 and the number <strong>of</strong> S2 stales (prototypes) is<br />

Ks2 = 10. The equation to calculate Aci(52), which sends the bottom-up evidence to S2, and<br />

A (S2), which combines the botlom-up evidence, are shown in the diagram,<br />

The toy example illustrates how the weighls are learned for each fixed S2 state=( as a function<br />

<strong>of</strong> the n afferent CI ntMJes and the ./ CI .states per node. This yields a weight matrix (shown<br />

on the botlom left) for each <strong>of</strong> the S2 i states. However, the CPTs <strong>of</strong> a Bayesian network are<br />

defined as a function <strong>of</strong> the child and the parent states, j and i respectively, for each fixed CI<br />

*Capiiiin for Figure 4.9, Toy example illusirFiliiiy liow ID Lipprojiimaie ihe sekniiviiy operalion using Ihf CRTs<br />

tviweenC'l and S2 nodes. The weighis between eafh uf the S2 ilaie^ aiidlhe fl rwdts are shown in the boilom-lett<br />

tables. These are ihen eonverted in ihe conespimtlinp CF1\ l>eiween each CI node ami iis pureiil S2 node as shown<br />

in ihe tables <strong>of</strong> ihe bottom-right. The belief propagation equaiions ui ihe lop-lelt square show how the CI^Ts are<br />

u.M'd io generate ilic output A messages from each CI node loiis S2 parem niKie. These messages are then combined<br />

muliiplicaiively by ihe S2 likcliho(xl runciion k{S2) ami used lo generate the output X me-ssases from each CI node<br />

10 its S2 ptireni node, .Note that the Cl'T elemenw for iho CI fcaiures within the same group are sei lu ihc same<br />

value, as only one value is learned per CI ptoup.<br />

164