Subsampling estimates of the Lasso distribution.

Subsampling estimates of the Lasso distribution.

Subsampling estimates of the Lasso distribution.

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

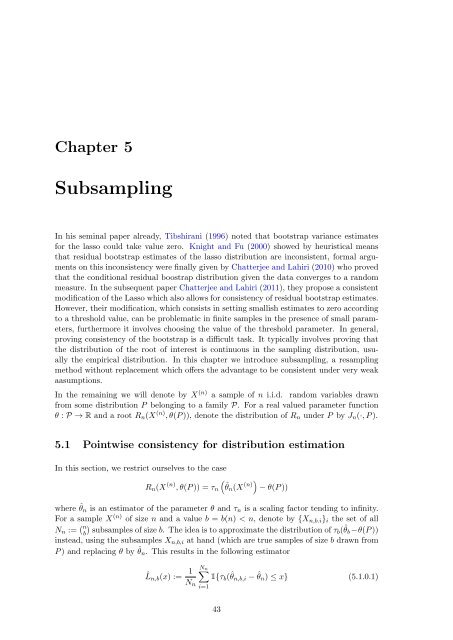

Chapter 5<br />

<strong>Subsampling</strong><br />

In his seminal paper already, Tibshirani (1996) noted that bootstrap variance <strong>estimates</strong><br />

for <strong>the</strong> lasso could take value zero. Knight and Fu (2000) showed by heuristical means<br />

that residual bootstrap <strong>estimates</strong> <strong>of</strong> <strong>the</strong> lasso <strong>distribution</strong> are inconsistent, formal arguments<br />

on this inconsistency were finally given by Chatterjee and Lahiri (2010) who proved<br />

that <strong>the</strong> conditional residual boostrap <strong>distribution</strong> given <strong>the</strong> data converges to a random<br />

measure. In <strong>the</strong> subsequent paper Chatterjee and Lahiri (2011), <strong>the</strong>y propose a consistent<br />

modification <strong>of</strong> <strong>the</strong> <strong>Lasso</strong> which also allows for consistency <strong>of</strong> residual bootstrap <strong>estimates</strong>.<br />

However, <strong>the</strong>ir modification, which consists in setting smallish <strong>estimates</strong> to zero according<br />

to a threshold value, can be problematic in finite samples in <strong>the</strong> presence <strong>of</strong> small parameters,<br />

fur<strong>the</strong>rmore it involves choosing <strong>the</strong> value <strong>of</strong> <strong>the</strong> threshold parameter. In general,<br />

proving consistency <strong>of</strong> <strong>the</strong> bootstrap is a difficult task. It typically involves proving that<br />

<strong>the</strong> <strong>distribution</strong> <strong>of</strong> <strong>the</strong> root <strong>of</strong> interest is continuous in <strong>the</strong> sampling <strong>distribution</strong>, usually<br />

<strong>the</strong> empirical <strong>distribution</strong>. In this chapter we introduce subsampling, a resampling<br />

method without replacement which <strong>of</strong>fers <strong>the</strong> advantage to be consistent under very weak<br />

aasumptions.<br />

In <strong>the</strong> remaining we will denote by X (n) a sample <strong>of</strong> n i.i.d. random variables drawn<br />

from some <strong>distribution</strong> P belonging to a family P. For a real valued parameter function<br />

θ : P → R and a root R n (X (n) , θ(P )), denote <strong>the</strong> <strong>distribution</strong> <strong>of</strong> R n under P by J n (·, P ).<br />

5.1 Pointwise consistency for <strong>distribution</strong> estimation<br />

In this section, we restrict ourselves to <strong>the</strong> case<br />

R n (X (n) , θ(P )) = τ n<br />

(ˆθn (X (n)) − θ(P ))<br />

where ˆθ n is an estimator <strong>of</strong> <strong>the</strong> parameter θ and τ n is a scaling factor tending to infinity.<br />

For a sample X (n) <strong>of</strong> size n and a value b = b(n) < n, denote by {X n,b.i } i <strong>the</strong> set <strong>of</strong> all<br />

N n := ( n<br />

b) subsamples <strong>of</strong> size b. The idea is to approximate <strong>the</strong> <strong>distribution</strong> <strong>of</strong> τb (ˆθ b −θ(P ))<br />

instead, using <strong>the</strong> subsamples X n,b,i at hand (which are true samples <strong>of</strong> size b drawn from<br />

P ) and replacing θ by ˆθ n . This results in <strong>the</strong> following estimator<br />

ˆL n,b (x) := 1 ∑N n<br />

1{τ b (ˆθ n,b,i −<br />

N ˆθ n ) ≤ x} (5.1.0.1)<br />

n<br />

i=1<br />

43