Aanesthetic Agents for Day Surgery - NIHR Health Technology ...

Aanesthetic Agents for Day Surgery - NIHR Health Technology ...

Aanesthetic Agents for Day Surgery - NIHR Health Technology ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

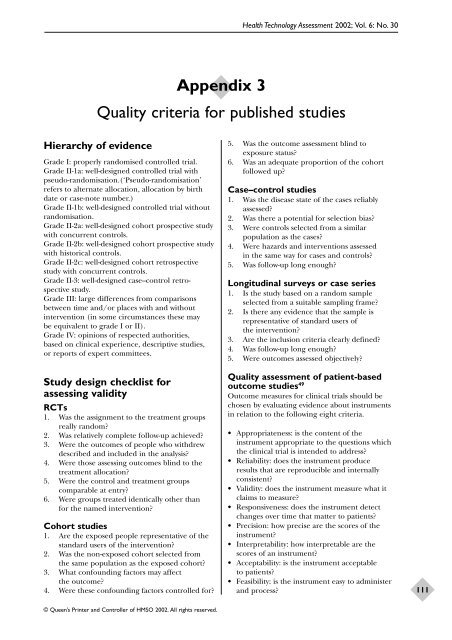

Hierarchy of evidence<br />

Grade I: properly randomised controlled trial.<br />

Grade II-1a: well-designed controlled trial with<br />

pseudo-randomisation.(‘Pseudo-randomisation’<br />

refers to alternate allocation, allocation by birth<br />

date or case-note number.)<br />

Grade II-1b: well-designed controlled trial without<br />

randomisation.<br />

Grade II-2a: well-designed cohort prospective study<br />

with concurrent controls.<br />

Grade II-2b: well-designed cohort prospective study<br />

with historical controls.<br />

Grade II-2c: well-designed cohort retrospective<br />

study with concurrent controls.<br />

Grade II-3: well-designed case–control retrospective<br />

study.<br />

Grade III: large differences from comparisons<br />

between time and/or places with and without<br />

intervention (in some circumstances these may<br />

be equivalent to grade I or II).<br />

Grade IV: opinions of respected authorities,<br />

based on clinical experience, descriptive studies,<br />

or reports of expert committees.<br />

Study design checklist <strong>for</strong><br />

assessing validity<br />

RCTs<br />

1. Was the assignment to the treatment groups<br />

really random?<br />

2. Was relatively complete follow-up achieved?<br />

3. Were the outcomes of people who withdrew<br />

described and included in the analysis?<br />

4. Were those assessing outcomes blind to the<br />

treatment allocation?<br />

5. Were the control and treatment groups<br />

comparable at entry?<br />

6. Were groups treated identically other than<br />

<strong>for</strong> the named intervention?<br />

Cohort studies<br />

1. Are the exposed people representative of the<br />

standard users of the intervention?<br />

2. Was the non-exposed cohort selected from<br />

the same population as the exposed cohort?<br />

3. What confounding factors may affect<br />

the outcome?<br />

4. Were these confounding factors controlled <strong>for</strong>?<br />

© Queen’s Printer and Controller of HMSO 2002. All rights reserved.<br />

Appendix 3<br />

<strong>Health</strong> <strong>Technology</strong> Assessment 2002; Vol. 6: No. 30<br />

Quality criteria <strong>for</strong> published studies<br />

5. Was the outcome assessment blind to<br />

exposure status?<br />

6. Was an adequate proportion of the cohort<br />

followed up?<br />

Case–control studies<br />

1. Was the disease state of the cases reliably<br />

assessed?<br />

2. Was there a potential <strong>for</strong> selection bias?<br />

3. Were controls selected from a similar<br />

population as the cases?<br />

4. Were hazards and interventions assessed<br />

in the same way <strong>for</strong> cases and controls?<br />

5. Was follow-up long enough?<br />

Longitudinal surveys or case series<br />

1. Is the study based on a random sample<br />

selected from a suitable sampling frame?<br />

2. Is there any evidence that the sample is<br />

representative of standard users of<br />

the intervention?<br />

3. Are the inclusion criteria clearly defined?<br />

4. Was follow-up long enough?<br />

5. Were outcomes assessed objectively?<br />

Quality assessment of patient-based<br />

outcome studies 49<br />

Outcome measures <strong>for</strong> clinical trials should be<br />

chosen by evaluating evidence about instruments<br />

in relation to the following eight criteria.<br />

• Appropriateness: is the content of the<br />

instrument appropriate to the questions which<br />

the clinical trial is intended to address?<br />

• Reliability: does the instrument produce<br />

results that are reproducible and internally<br />

consistent?<br />

• Validity: does the instrument measure what it<br />

claims to measure?<br />

• Responsiveness: does the instrument detect<br />

changes over time that matter to patients?<br />

• Precision: how precise are the scores of the<br />

instrument?<br />

• Interpretability: how interpretable are the<br />

scores of an instrument?<br />

• Acceptability: is the instrument acceptable<br />

to patients?<br />

• Feasibility: is the instrument easy to administer<br />

and process?<br />

111